Information is the frontier for the study of life

- Life’s unique use of information may be the key to understanding what makes it different from other physical systems.

- Life is the only physical system that actively uses information.

- We need to find a tractable, operational, and mathematical definition of semantic information that researchers can use to pry open the physics of life.

Life is really weird. From the vantage point of a physicist, it is even stranger. Life is unlike any other phenomenon in physics. Stars, electrons, and black holes are all amazing in their own ways. But only life invents, and the first thing life invents is itself.

Life is creative in a way that no other physical system can be, and its unique use of information may be the key to understanding what makes it different from other physical systems. Now, thanks to a new grant my colleagues and I have received from the Templeton Foundation, we are going to be exploring exactly how information allows life to work its magic. I’m very excited about the project, and this essay is my first report from the frontier as we plunge into terra incognita.

How information defines order

If information is the key to understanding life, the first question is, what kind of information does life use? Answering that question is the focus of our research project. To understand why it is important, we have to go back to the dawn of the information age and its earliest pioneer, Claude Shannon, a researcher at Bell Laboratories during the mid-20th century. In 1948, Shannon wrote a paper titled A Mathematical Theory of Communication. It was a game changer, and it set the stage for the digital revolution. Shannon’s first step was to define what he meant by information. To do this, he relied on earlier work that looked at the question in terms of probabilities, or of surprising events.

Consider watching a string of characters showing up on your computer screen one after the other. If the string consisted of nothing but the number 1 repeated over and over, the appearance of another 1 would not be very surprising. But if a 7 suddenly appeared, you would perk up. Something new has shown up in the string, and for Shannon, that means information has been conveyed. There is a link, for him, between the probability of an event and the information that it holds. Working with this link between information and events as symbols in a string, Shannon defined syntactic information. It was all about syntax, he reasoned — the proper order of characters, words, and phrases. Shannon then worked out a stunningly powerful theory of how to use syntactic information that became the basis for modern computers.

Sure, but what does it mean?

What Shannon’s definition did not address, however, was meaning. Shannon made it explicit that was never his intent, but meaning is key to the common-sense understanding of what information is. Information conveys something to be known to someone who wants to know it. In other words, information has semantic content, and what Shannon’s syntactic definition had purposely ignored was the role of semantic information.

This is a huge potential problem when it comes to trying to understand the unique role information plays for life. The reason physicists see information as the key to understanding life as a physical system is simple: Life is the only physical system that actively uses it. Sure, I can describe the network of thermonuclear reactions in a star in terms of information. But I also could choose not to describe it that way. After all, the star is not using the information in any way that makes such a description necessary.

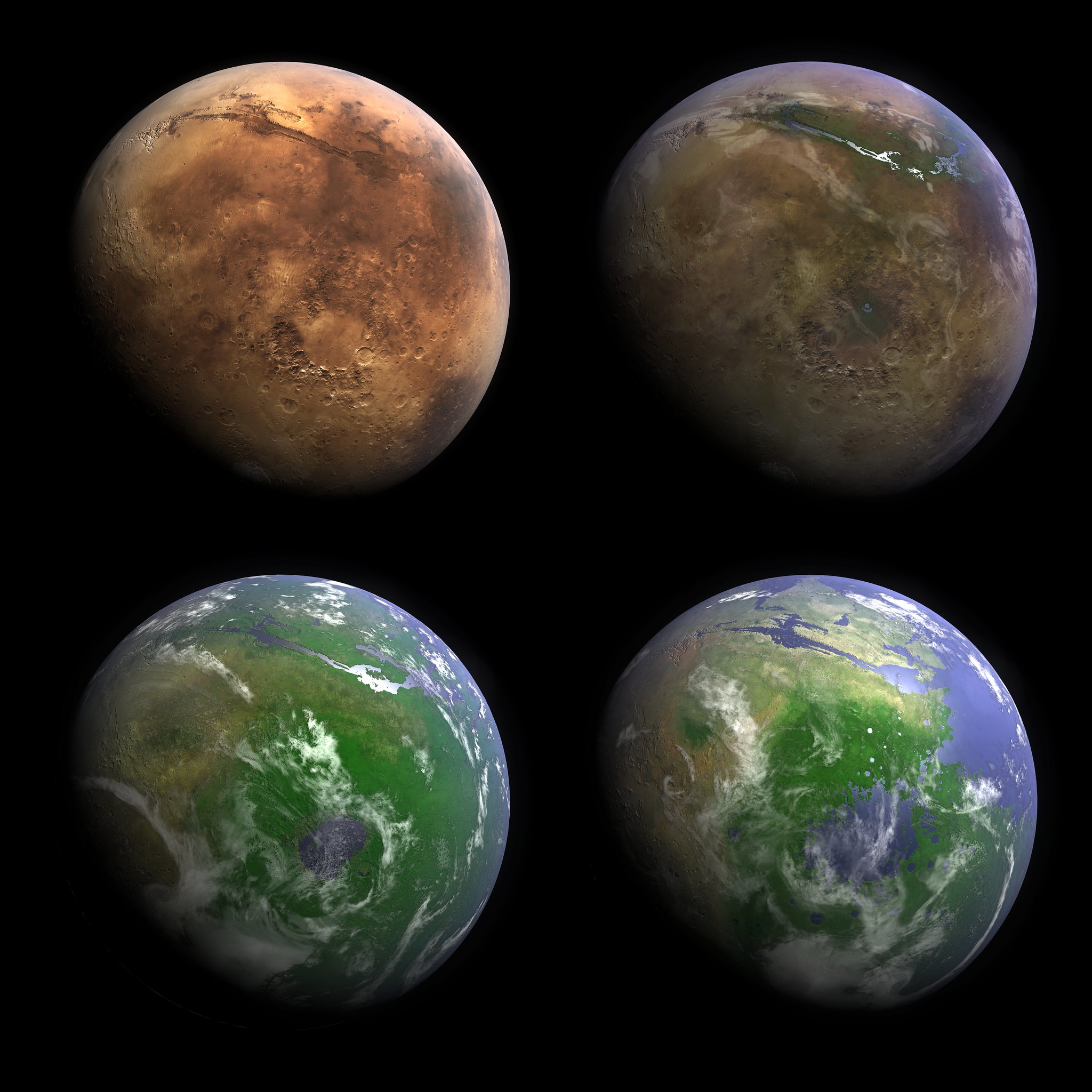

Life, however, is different. Life must be described in terms of its use of information. This is because life’s capacity to create and maintain itself can only occur because it processes information.

But syntactic information alone is not what matters for life — meaning is also important. The information life uses always carries a valence, a significance to the organism’s continuation. This gives life its agency and autonomy, allowing it to sense and respond to the environment. Even more fundamental is the essential division it explicates between an organism and its environment — it separates “me” from “not me.”

Our goal, then, is to understand life’s unique use of semantic information. Of course, “meaning” is a slippery philosophical idea, and many books have been written on the subject. But our Templeton project, based on a brilliant paper in 2018 by Artemy Kolchinsky and David Wolpert, aims to find a tractable, operational, and mathematical definition of semantic information that researchers can use to pry open the physics of life. (Kolchinsky, by the way, is part of the team). If the project succeeds, we might be able to understand how a bunch of chemicals can eventually give shape to a cell, or how a bunch of individuals can form a complex technological society.

It is a very exciting possibility, and we’re just getting started. Stay tuned to see how it goes.