The Dangers of Our ‘Inconvenient Mind’

Here’s some bad news for those of you who like to think you can think rationally about risk. You can’t. You know all those thoughtfully considered views you have about nuclear power or genetically modified food or climate change? They are really no more than a jumble of facts, and how you feel about those facts. That’s right. They’re just your opinions. Which is bad news, because no matter how right you feel, you might be wrong. And being wrong about risk is risky, to you AND to others.

Ever-growing mountains of research on human cognition make inescapably clear that your brain is only the organ with which you think you think. It is first and foremost a survival machine, and it uses instinct first and reason second when judging whether something is dangerous. This is generally good for survival, since thinking takes more effort, and time, and a slower response to risk could mean you end up dead. So the human brain has evolved to feel first and reason second.

We are also hardwired to feel more and reason less as time goes on, not just at first, because we never have all the facts, or even most of them, when we are called on to decide. We have evolved to use all sorts of instinctive mental shortcuts, and our overall affect – how things feel – to make our way in the world. This Instinct First/Reason Second and Instinct More/Reason Less system may be fine for simpler threats like lions or snakes or a dark alley, but it’s less fine if the possible bogeyman is industrial chemistry or climate change or genetically modified food. Such complex issues are fraught with critical factual details. Understanding them requires expertise most of us don’t have. They demand more careful reflection. The more complicated perils of our post-industrial/technological/information age pose a serious challenge to a brain designed to protect us from simpler hazards.

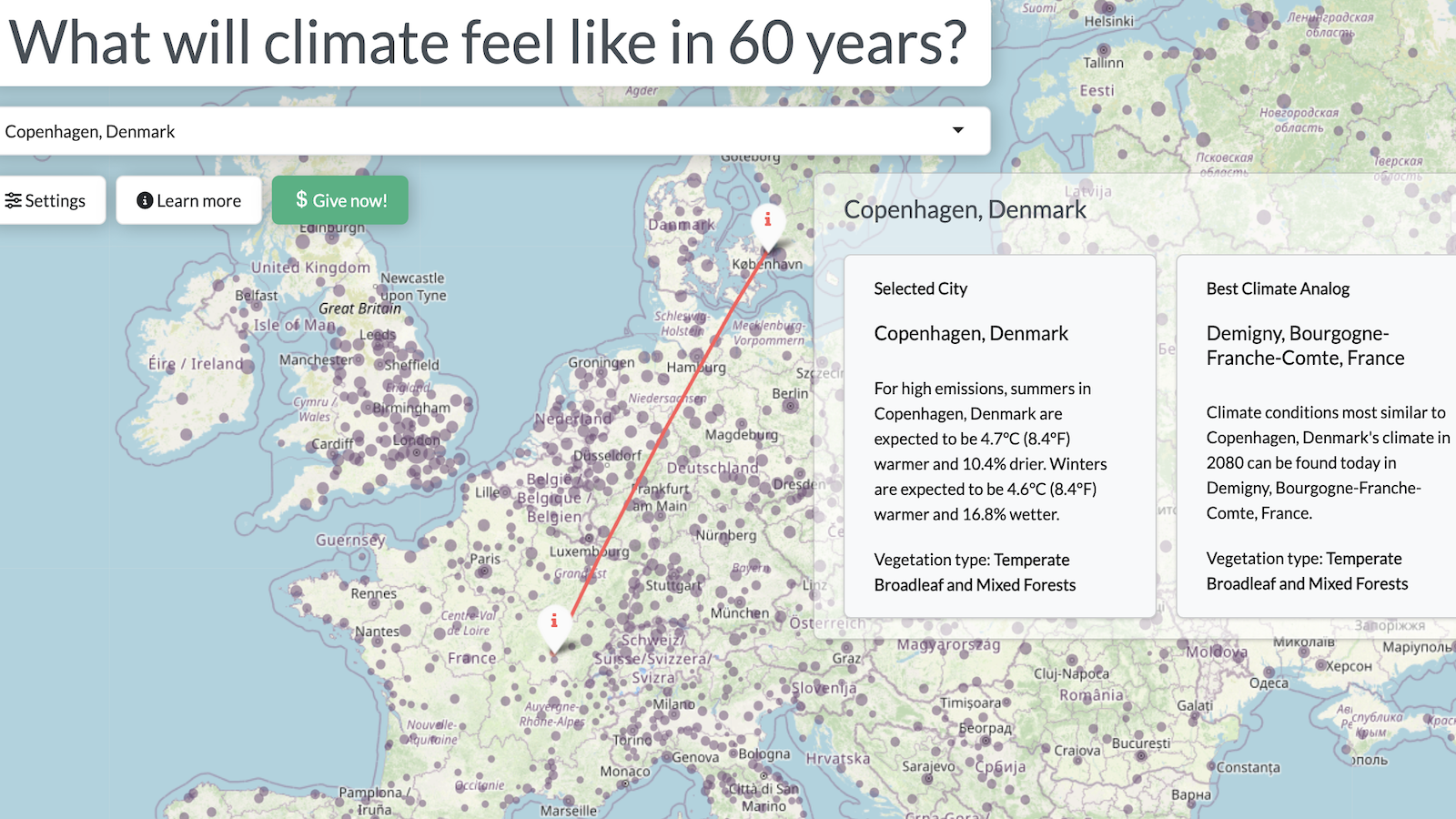

Consider climate change. One of the subconscious tool kits our brain employs to gauge risk is a set of risk perception ‘fear factors’ that help us quickly and instinctively figure out what feels scary. For example, immediate risks are scarier than those which are delayed. Risks that feel personally threatening are scarier than risks that only seem to threaten others, or polar bears. Those are just two of several psychological characteristics that make the immense threat of a rapidly changing climate feel, well, less immense. As a result, we’re not doing as much about it as we need to…and that is making the risk worse!

In addition to those ‘fear factors’, our opinions are also powerfully influenced by the opinions of others around us. We want our views to agree with those in the tribe(s) with which we most closely identify,(this is known as Cultural Cognition) because as social animals being a member of the tribe in good standing is important for our survival. And the tribal view is usually based on what the tribe’s thought leaders say, like Rush Limbaugh’s proud-to-profess-we’re-not-using-our-own-brain-to-figure-things-out ‘Ditto Heads”. Our brain is comfortable defaulting to these views because it takes less time and energy, literally fewer calories, than looking everything up and thinking it through ourselves.

Consider how this has impacted the climate change issue. When it surfaced into broader public consciousness in the late 1980’s, climate change was not the polarized issue it has become. But some people and companies and politicians, with vested interests that would suffer from climate change mitigation (think the fossil fuel industry) purposefully re-framed the issue in terms of government interference in the free market, and doubt about the science (hey, it worked for cigarettes), and members of the conservative or libertarian tribes adopted those views. That delayed progress, and that is making the risk worse.

Climate change is just one example of how dangerous our subjective risk perception system can be. Tribal thinking locks us into closed-minded ideological warfare which impedes compromise and progress on any issue. (Exhibit One; the U.S. Congress.) And those risk perception factors cause us us to worry more than we need to about some risks (mercury, nuclear power), and not enough about others (coal burning, climate change), and as a result we end up pushing for government protection from the threats we’re worried about more than the ones that threaten us the most… which makes the overall risk worse.

So what do we do about this innately “Inconvenient Mind” as Andy Revkin has called it? Daniel Kahneman and Paul Slovic, pioneers in the research about this sort of subjective decision making, are pessimistic that simply recognizing that we think this way can help us think more carefully. That may be true at a personal level. But at a societal level, we can jointly recognize that this feature of human cognition is a risk all by itself. In my book I call it The Perception Gap http://www.amazon.com/How-Risky-Really-Fears-Always/dp/0071629696/ref=sr_1_1?ie=UTF8&s=books&qid=1266244724&sr=1-1). It causes people to refuse vaccination for their kids, or to text when they drive, or to fight against adaptation to rising sea levels because that would acknowledge that the sea is rising, and that would be defeat in the tribal battle over climate change.

We can…we need to…recognize that these behaviors may feel right, but they put us all at greater risk. Once we recognize that The Perception Gap is a huge risk to society in and of itself, we can start to analyze and manage its dangers; declining vaccination rates, opposition to nuclear power that creates energy policy that favors coal (the particulate air pollution from which kills thousands of Americans a year), delay in curbing the build up of greenhouse gasses that will warm the climate for hundreds or thousands of years. Once we accept that The Perception Gap is a risk, we can apply the same tools we already use to manage other risks, from risk communication to laws to economic incentives or disincentives, to address behaviors that feel right but which create danger, for you and me, and for society.

Lots of research explains how our subconscious risk perception works. The really smart thing to do would be to heed the warning implicit in that knowledge, that our risk perceptions can get us into trouble. That’s the first critical step toward more careful, objective, and healthier thinking about risk. To do any less, would be really risky.