How generative AI language models are unlocking the secrets of DNA

- DNA language models can easily identify statistical patterns in DNA sequences.

- Applications range from predicting what different parts of the genome do to how genes interact with each other.

- The hallucinatory tendencies of generative AI can be repurposed to design new proteins from scratch.

Large language models (LLMs) learn from statistical associations between letters and words to predict what comes next in a sentence and are trained on large amounts of data. For instance, GPT-4, which is the LLM underlying the popular generative AI app ChatGPT, is trained on several petabytes (several million gigabytes) of text.

Biologists are leveraging the capability of these LLMs to shed new light on genetics by identifying statistical patterns in DNA sequences. DNA language models (also called genomic or nucleotide language models) are similarly trained on large numbers of DNA sequences.

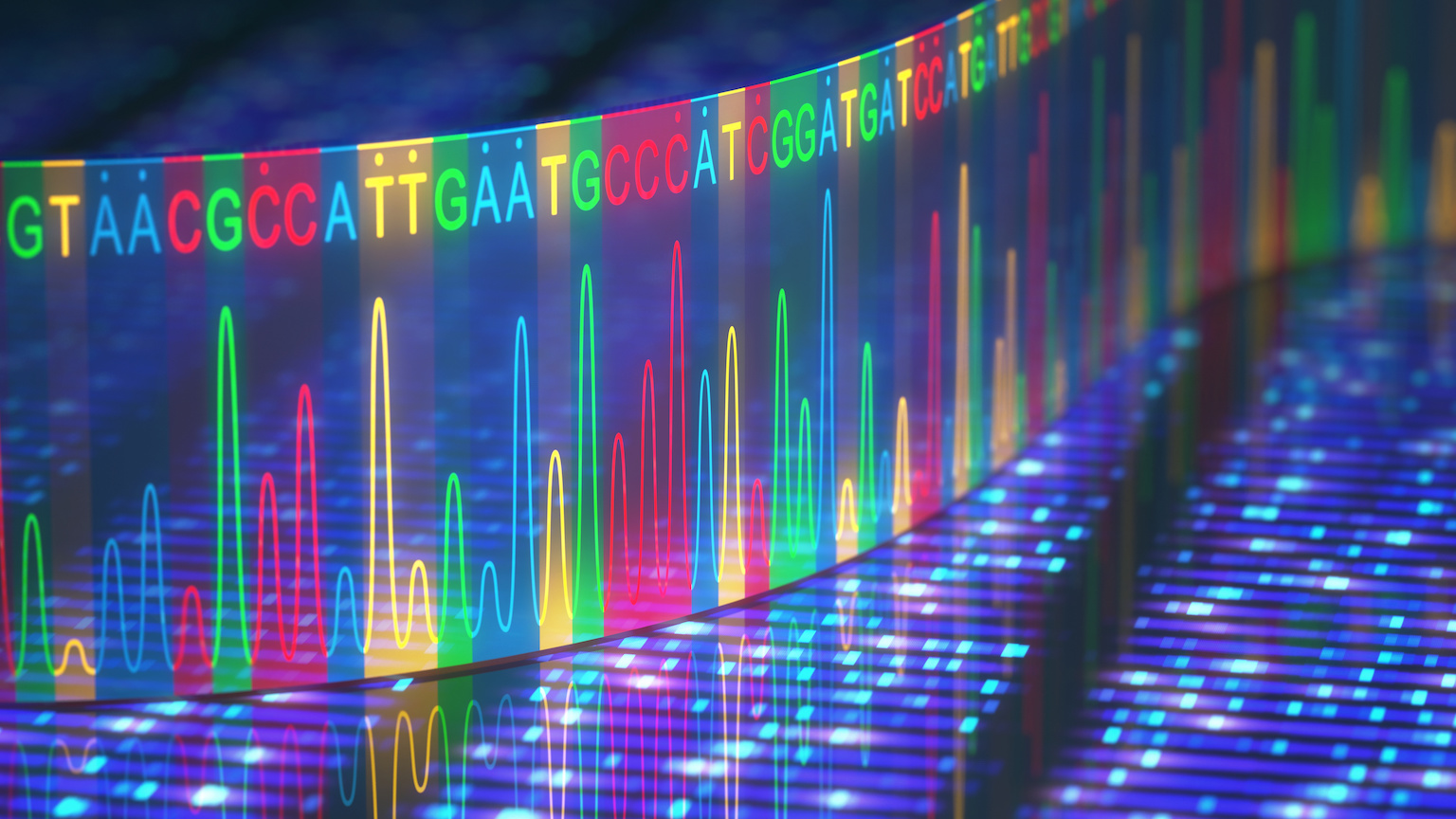

DNA as “the language of life” is an oft-repeated cliché. A genome is the entire set of DNA sequences that make up the genetic recipe for any organism. Unlike written languages, DNA has few letters: A, C, G, and T (representing the compounds adenine, cytosine, guanine, and thymine). As simple as this genomic language might seem, we are far from uncovering its syntax. DNA language models can improve our understanding of genomic grammar one rule at a time.

Predictive versatility

What makes ChatGPT incredibly powerful is its adaptability to a wide range of tasks, from generating poems to copy editing an essay. DNA language models are versatile too. Their applications range from predicting what different parts of the genome do to predicting how different genes interact with each other. By learning genome features from DNA sequences, without the need for “reference genomes,” language models could also potentially open up new methods of analysis.

A model trained on the human genome, for example, was able to predict sites on RNA where proteins are likely to bind. This binding is important in the process of “gene expression” — the conversion of DNA into proteins. Specific proteins bind to RNA, limiting how much of it is then further translated into proteins. In this way, these proteins are said to mediate gene expression. To be able to predict these interactions, the model needed to intuit not just where in the genome these interactions will take place but also how the RNA will fold, as its shape is critical to such interactions.

The generative capabilities of DNA language models also allow researchers to predict how new mutations may arise in genome sequences. For example, scientists developed a genome-scale language model to predict and reconstruct the evolution of the SARS-CoV-2 virus.

Genomic action at a distance

In recent years, biologists have realized that parts of the genome previously termed junk DNA interact with other parts of the genome in surprising ways. DNA language models offer a shortcut to learn more about these hidden interactions. With their ability to identify patterns across long stretches of DNA sequences, language models can also identify interactions between genes located on distant parts of the genome.

In a new preprint hosted on bioRxiv, scientists from the University of California-Berkeley present a DNA language model with the ability to learn genome-wide variant effects. These variants are single-letter changes to the genome that lead to diseases or other physiological outcomes and generally require expensive experiments (known as genome-wide association studies) to discover.

Named the Genomic Pre-trained Network (GPN), it was trained on the genomes of seven species of plants from the mustard family. Not only can GPN correctly label the different parts of these mustard genomes, it can also be adapted to identify genome variants for any species.

In another study published in Nature Machine Intelligence, scientists developed a DNA language model that could identify gene-gene interactions from single-cell data. Being able to study how genes interact with each other at single-cell resolution will reveal new insights into diseases that involve complex mechanisms. This is because it allows biologists to pin variations between individual cells to genetic factors that lead to disease development.

Hallucination becomes creativity

Language models can have problems with “hallucination” whereby an output sounds sensible but is not rooted in truth. ChatGPT, for example, could hallucinate health advice that is essentially misinformation. However, for protein design, this “creativity” makes language models a useful tool for designing completely new proteins from scratch.

Scientists are also applying language models to protein datasets in an effort to build on the success of deep learning models like AlphaFold in predicting how proteins fold. Folding is a complex process that enables a protein — which starts off as a chain of amino acids — to adopt a functional shape. Because protein sequences are derived from DNA sequences, the latter determine how the former fold, raising the possibility that we may be able to discover everything about protein structure and function from gene sequences alone.

Meanwhile, biologists will continue to use DNA language models to extract more and better insights from the large amounts of genome data available to us, across the full range and diversity of life on Earth.