Fear of AI is this century’s overpopulation scare

- Doom prophets tend to dominate the news. Paul Ehrlich became famous by claiming that Earth was overpopulated and humans would suffer mass starvations. They never happened.

- Today, we are witnessing similar hyperbolic predictions of doom by those who fear artificial intelligence.

- All doom prophets should be required to answer this question: "At what specific date in the future, if the apocalypse hasn’t happened, will you finally admit to being wrong?"

“Sometime in the next 15 years, the end will come.”

Reading this in 2023, you could be forgiven for thinking these words came from an artificial intelligence (AI) alarmist like Eliezer Yudkowsky. Yudkowsky is a self-taught researcher whose predictions about intelligent machines veer into the apocalyptic. “The most likely result of building a superhumanly smart AI… is that literally everyone on Earth will die”, Yudkowsky wrote earlier this week in an article for Time. “We are not prepared. We are not on course to be prepared in any reasonable time window. There is no plan.”

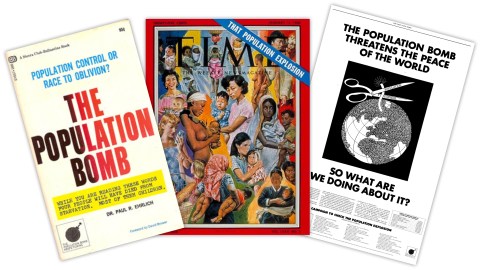

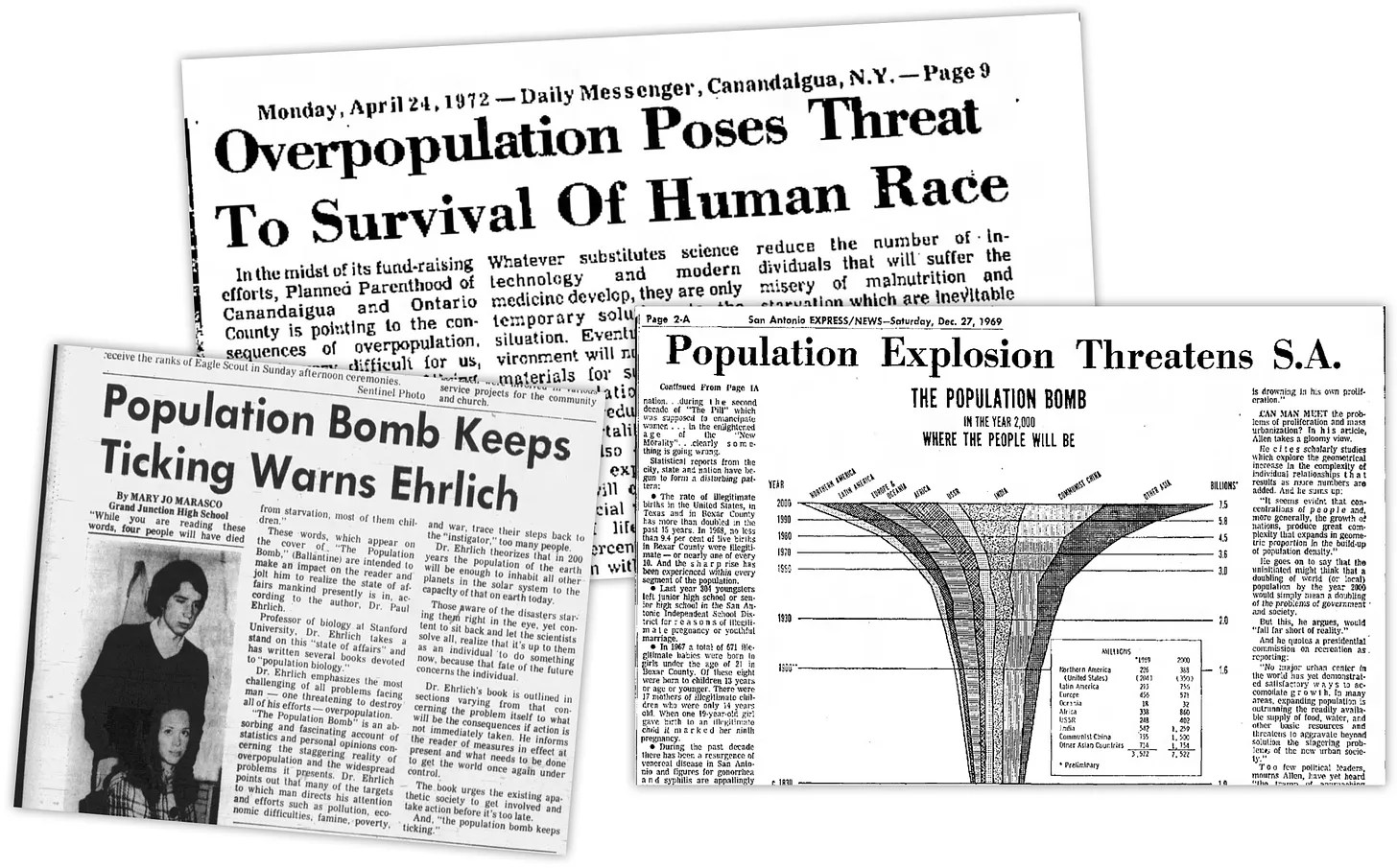

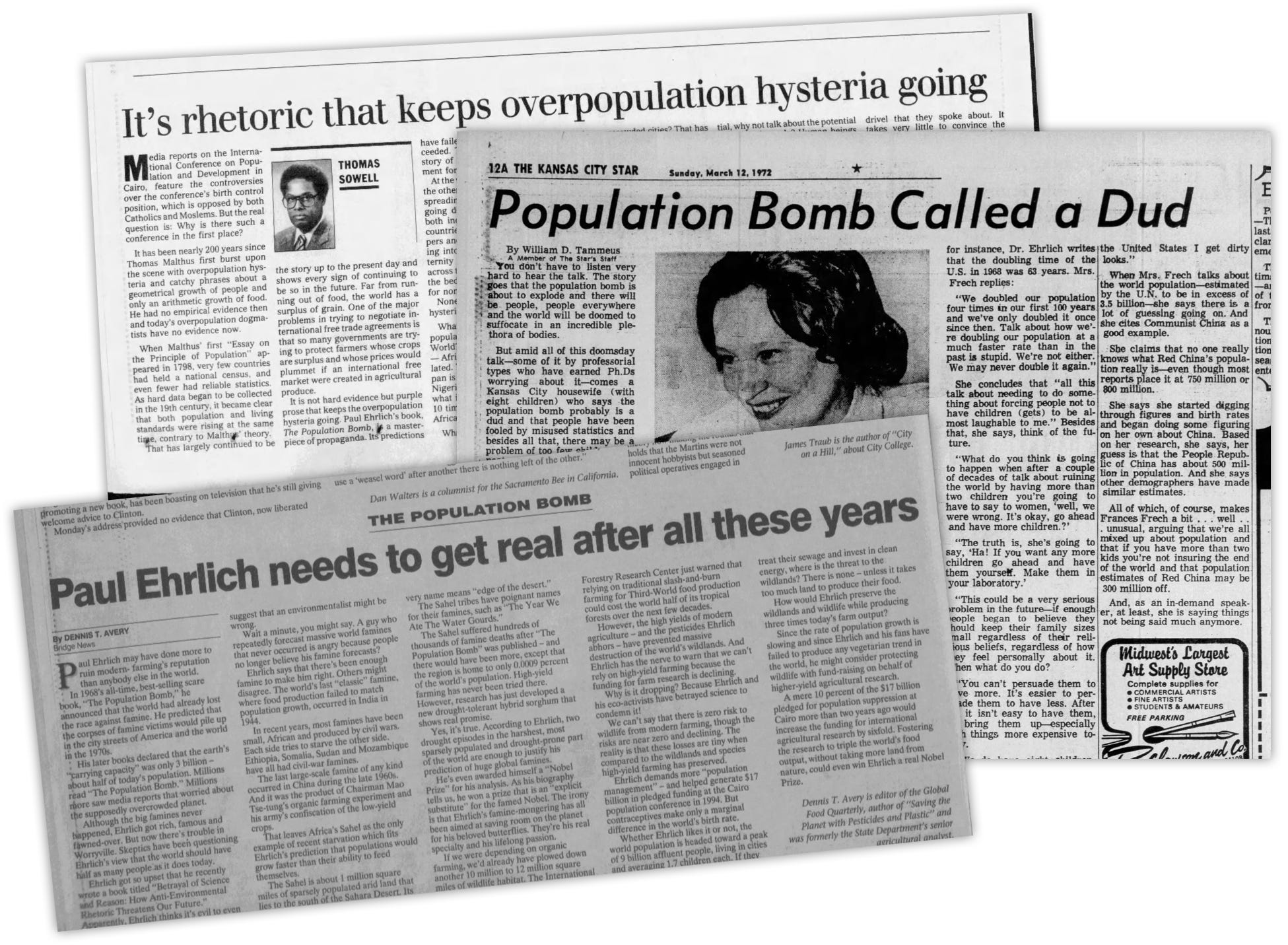

But the words “sometime in the next 15 years, the end will come” are not Yudkowsky’s. They are from a similarly gloomy prognosticator over 50 years ago: Paul Ehrlich. Ehrlich, a biologist at Stanford, authored The Population Bomb, which argued that Earth’s booming population would spell doom for humanity. (The idea that there are too many people, proven wrong decades ago, is still fashionable in some circles.)

In 1968, when Ehrlich wrote The Population Bomb, there were 3.5 billion humans. Now, there are over eight billion of us. The famines that Ehrlich predicted never happened — while world population doubled, agricultural efficiency tripled. People are not burdens; we can innovate out of resource scarcity.

“Most of the people who are going to die in the greatest cataclysm in the history of man have already been born.” The words are Ehrlich’s, but they easily could have come from Yudkowsky, who once declared that children conceived in 2022 have only “a fair chance” of “living to see kindergarten.”

We’ve seen this movie before

Ehrlich and Yudkowsky are both fatalists. Their prophecies are those of panic and helplessness — the forces of modernity, or capitalism, or natural selection have already spiraled out of control. There’s nothing you can do but wait for death. (Notoriously, the first sentence of The Population Bomb was, “The battle to feed all of humanity is over.”) Yudkowsky reckons that for humanity to survive, he would have to be jaw-droppingly wrong. “I basically don’t see on-model hopeful outcomes at this point.”

Luckily for humanity, history will prove Yudkowsky just as wrong as Ehrlich. Nevertheless, his misplaced faith that we’re all going to die has deep roots. Millions of years of evolution causes humans to see impending doom, even when it’s not there. That’s true for religious ends-of-days, illusory famines, or runaway AI. Yudkowsky’s anxieties aren’t even original: Evil machines have been a plot point in sci-fi for over a century.

Apocalypse Later

Judgment Day isn’t coming; Yudkowsky is systematically wrong about the technology. Take his tried-and-true metaphor for superintelligence, chess computers. Computers, famously, have been better at chess than humans for a quarter-century. So, Yudkowsky claims, humans fighting a rogue AI system would be like “a 10-year-old trying to play chess against Stockfish 15 [a powerful chess engine]”.

Chess, however, is a bounded, asynchronous, symmetric, and easily simulated game, which an AI system can play against itself millions of times to get better at. The real world is, to put it simply, not like that. (This sets aside other objections, like Yudkowsky’s assumption that there are no diminishing returns on intelligence as a system gets smarter, or that such a system is anywhere close to being built.)

AI fearmongering has consequences. Already, luminaries like Elon Musk, Steve Wozniak, and Andrew Yang have signed an open letter that pressures researchers to halt the training of powerful AI systems for six months. (“Should we risk loss of control of our civilization?” it asks, rather melodramatically.) However, suffocating AI won’t save humanity from doom — but it could doom people whose deaths might have been prevented by an AI-assisted discovery of new drugs. Yudkowsky, though, thinks the open letter doesn’t go far enough: He wants governments to “track all GPUs sold” and “destroy… rogue datacenter[s] by airstrike.”

There are grim echoes here. An agonizing number of well-intentioned, logical people take Yudkowsky’s ideas seriously, at least enough to sign the open letter. Fifty years ago, a comparably agonizing number adopted Paul Ehrlich’s ideas about overpopulation, including the governments of the two largest countries on Earth. The result was forced sterilization in India and the one-child policy in China, with backing from international institutions like the World Bank.

Serious people, unserious conversation

Yudkowsky and Ehrlich are people you want to take seriously. They associate with academics, philosophers, and research scientists. Certainly, they believe themselves to be rational, scientific people. But their words are unserious. They are fearmongering, and they are now too reputationally invested in their publicly stated position to question their own beliefs.

Paul Ehrlich, now 90, continues to believe his only error was assigning the wrong dates to his predictions. Hopefully, Yudkowsky will learn from his blunder and not follow in his footsteps.

“How many years do you have to not have the world end” to realize that “maybe it didn’t end because that reason was wrong?” asks Stewart Brand, a former supporter of Ehrlich, who was quoted in the New York Times. Anyone predicting catastrophe or collapse should have to answer this question. At what specific date in the future, if the apocalypse hasn’t happened, will the doom prophet finally admit to being wrong?