What Do a Robot’s Dreams Look Like? Google Found Out

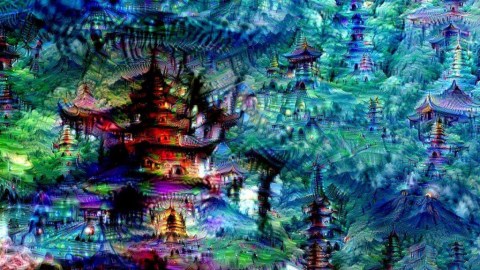

When Google asked its neural network to dream, the machine begin to generating some pretty wild images. They may look odd, but it’s all part of Google’s plan to solve a huge issue in machine learning: recognizing objects in images.

To be clear, Google’s software engineers didn’t ask a computer to dream, but they did ask its neural network to alter the images based on an original photo they fed into it, by applying layers. This was all part of their Deep Dream program.

The purpose was to make it better at finding patterns, which computers are none too good at. So, engineers started by “teaching” the neural network to recognize certain objects by giving it 1.2 million images, complete with object classifications the computer could understand.

These classifications allowed Google’s AI to learn to detect the different qualities of certain objects in an image, like a dog and a fork. But Google’s engineers wanted to go one step further, which is where Deep Dream comes in, which allowed the neural network to add those hallucinogenic qualities to images.

Google wanted to make its neural network better at detection to the point where it could pick out other objects in an image that may not contain that object (think of it as seeing the outline of a dog in the clouds). Deep Dream gave the computer the ability to change the rules and parameters of the images, which in turn allowed Google’s AI to recognize objects the images didn’t necessarily contain. So, an image might contain an image of a foot, but when it examined a few pixels of that image, it may have seen the outline of what looked like a dog’s nose.

So, when researchers began to ask its neural network to tell them what other objects they might be able to see in an image of a mountain, tree, or plant, it came up with these interpretations:

(Photo Credit: Michael Tyka/Google)

“The techniques presented here help us understand and visualize how neural networks are able to carry out difficult classification tasks, improve network architecture, and check what the network has learned during training,” software engineers Alexander Mordvintsev and Christopher Olah, and intern Mike Tyka wrote in a post about Deep Dream. “It also makes us wonder whether neural networks could become a tool for artists—a new way to remix visual concepts—or perhaps even shed a little light on the roots of the creative process in general.”

Just for fun, Google has opened up the tool to the public and you can generate your own Deep Dream art here: deepdreamgenerator.com