Can AI Develop Empathy?

While we stand at the precipice of the robot revolution, tech and science scions are weighing in on what impact it will likely have on the human race. Most foresee two ultimate scenarios, the robot apocalypse made palpable by the Terminator movies, and robots becoming our servants and companions, much like the Asimovian world of iRobot. Stephen Hawking publicly warned of the first scenario, and with good reason. Without a moral backbone, AI could go horribly wrong.

Microsoft got a glimpse recently when their tweeting AI program, identified as a 19-year-old girl named Tay, quickly turned into something of a Nazi and a sexpot. Since Tay was programmed to learn by interacting with others on Twitter, the tech giant claims that it was quickly engaged with a cluster of internet trolls who ultimately turned the program in the wrong direction. The problem of AI going haywire has become so worrisome that now a division of Google known as DeepMind is working with researchers at Oxford to develop a “kill switch,” lest AI becomes a serious threat.

Basically, a computer with human-level intelligence based solely on its own optimization may find that humans are in the way, either by trying to shut it down, or by keeping desirous resources from it. AI theorist Eliezer Yudkowsky once wrote, “The AI neither hates you nor loves you, but you are made out of atoms that it can use for something else.”

It’s not just Hollywood but some of the world’s most brilliant minds who fear a robot apocalypse.

One way to make sure robots and AI stay on the helpful side is to imbue them with empathy. That’s at least according to expert Murray Shanahan. He is a professor of cognitive robotics at Imperial College London. But is it actually possible to develop a feeling robot? Not only is it possible, Shanahan thinks it’s necessary.

That’s why he, Elon Musk, and Stephen Hawking together penned an open letter to the Centre for the Study of Existential Risk (CSER), promoting more research in AI to discover “potential pitfalls.” In the letter, Musk says that AI could become more dangerous than nuclear weapons, while Hawkins says it could spell the end of the human race. Shanahan suggests creating artificial general intelligence (AGI) with a human-like psychological framework, or even modeling AI after our own neurological makeup.

There is time to consider direction. Experts believe AI will reach human-like intelligence anywhere from 15 to 100 years from now. 2100 seems like a good year to hang your hat on, at least according to Shanahan. Besides a dangerous AI with no moral capacity, there is fear that the current economic, social, and political climate may lead to developing AGI that is purposely dangerous. In fact, unbridled capitalism has been implicated outright. “Capitalist forces will drive incentive to produce ruthless maximization processes. With this there is the temptation to develop risky things,” Shanahan said. Governments or companies could use AGI to rig markets or elections, even develop military tech head and shoulders above anything currently being developed. Other governments would have to respond, creating an AI arms race.

AI could bring a whole new dimension to military capability.

Since there is no data to suggest AI would go bad, Shanahan and other experts say it isn’t necessary to ban this technology, but to put in the necessary safeguards in place to make sure it stays friendly and peaceful. To do so, Shanahan says there are certain capabilities the machine will need to have, such as the ability to form relationships, to recognize and understand the emotions of others, and even to feel empathy itself. One way is to mimic the human brain artificially. Since we haven’t mapped it entirely, that doesn’t seem likely in the short-term. Scientists at the Human Connectome Project (HCP) are working on mapping the brain. Some say that it may be possible to create algorithms and computational structures which act like a human brain, at least in theory.

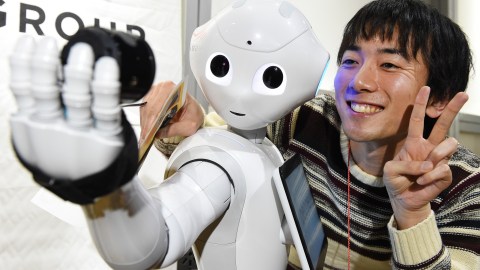

Robots who can recognize and respond to human emotion are already on the market. Nao is one. This robot, just two feet tall, is put out by Aldebaran Robotics. Nao is equipped with facial recognition software. It can make eye contact and responds when you talk to it. So far, Nao has been successfully used in classrooms to help autistic children. A new model by the same Japanese firm named Pepper can recognize not only words, but facial expressions and body language, and responds appropriately in kind. But here, the robot itself isn’t feeling. For that, it would need self-awareness which requires the ability to feel what others are feeling and to think about those feelings.

We are getting close at making the outside look human. But the inside? That’s a lot more complicated.

To have real empathy is to recognize the emotions in others that you yourself have felt. For such emotions to take root, robots will have to have experiences like growing up, and succeeding and failing. They will need to feel emotions like attachment, desire, accomplishment, love, anger, worry, fear, perhaps even jealousy. Robots will need to take part in meta-cognition too, which is thinking about one’s own thinking and emotions. Science fiction writer Philip K. Dick foresaw this. In his novels, robots were simply imbued with artificial memories. A recent film, Ex Machina brings up another question, if a robot were able to show authentic emotion, would you believe it? It could be difficult discerning a robot who was really good at responding appropriately, to one that actually holds the same feelings as you.

And then say all of these problems are solved and questions favorably answered. Will robots be are equals or subordinates? And should they have their emotions controlled by humans? Or is this a form of organic slavery, meaning they should be able to think and feel how they wish? There are a lot of difficult issues to be worked out. At this point, our social, economic, and political structures may not be ready for such a disruptive change. Even so, Shanahan believes we have time now to begin wading through these thorny matters, putting some safeguards in place, and setting aside resources to be sure that robots become humanity’s closest friends, instead of our ultimate adversaries.

Learn more about Pepper the world’s first emotion-reading robot here: