Some of the most intelligent people at the most highly-funded companies in the world can’t seem to answer this simple question: what is the danger in creating something smarter than you? They’ve created AI so smart that the “deep learning” that it’s outsmarting the people that made it.

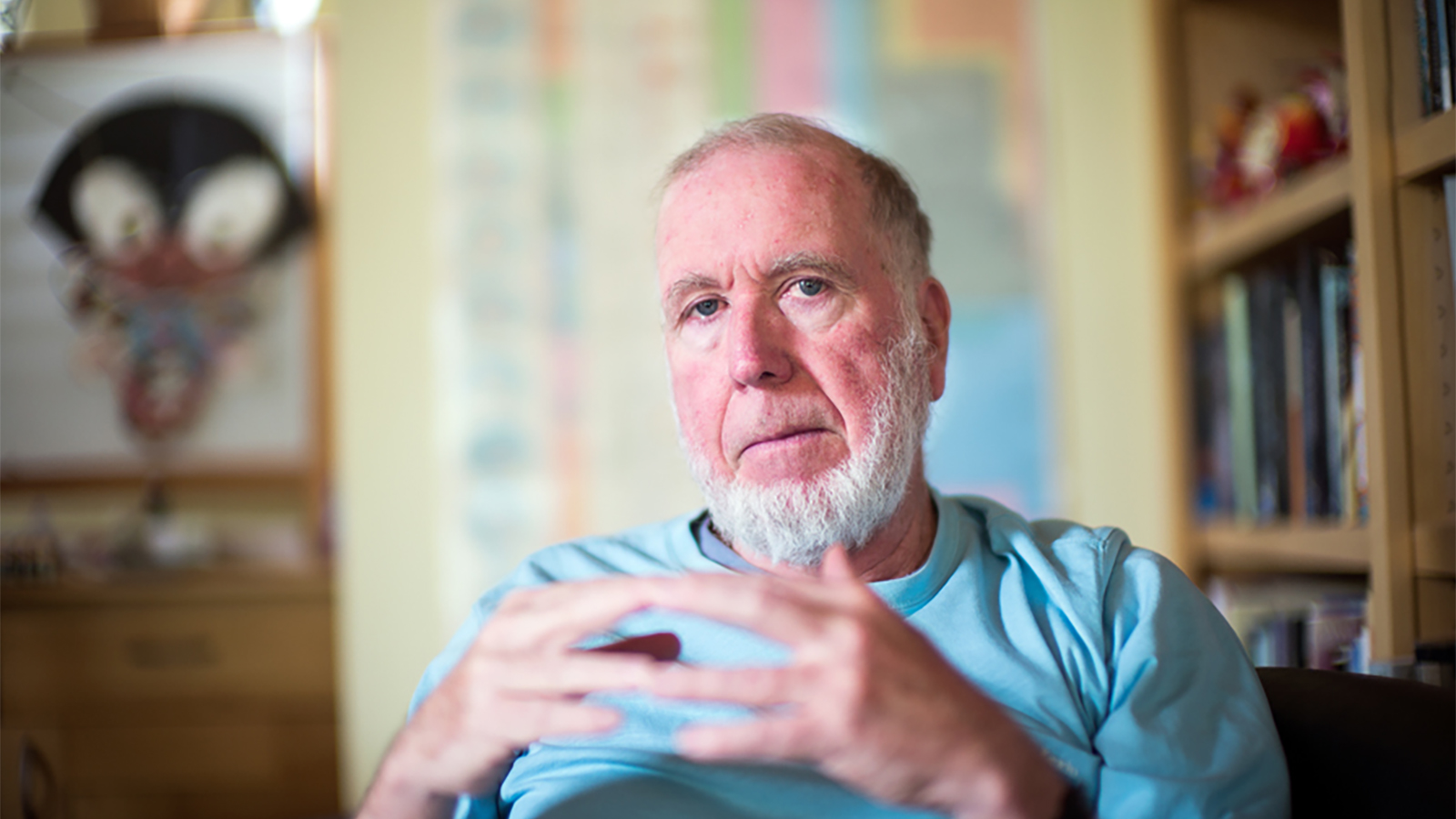

The reason is the “blackbox” style code that the AI is based off of—it’s built solely to become smarter, and we have no way to regulate that knowledge. That might not seem like a terrible thing if you want to build superintelligence. But we’ve all experienced something minor going wrong, or a bug, in our current electronics. Imagine that, but in a Robojudge that can sentence you to 10 years in prison without explanation other than “I’ve been fed data and this is what I compute”… or a bug in the AI of a busy airport. We need regulation now before we create something we can’t control. Max’s book Life 3.0: Being Human in the Age of Artificial Intelligence is being heralded as one of the best books on AI, period, and is a must-read if you’re interested in the subject.

Watch the video: