How does the brain process speech? We now know the answer, and it’s fascinating

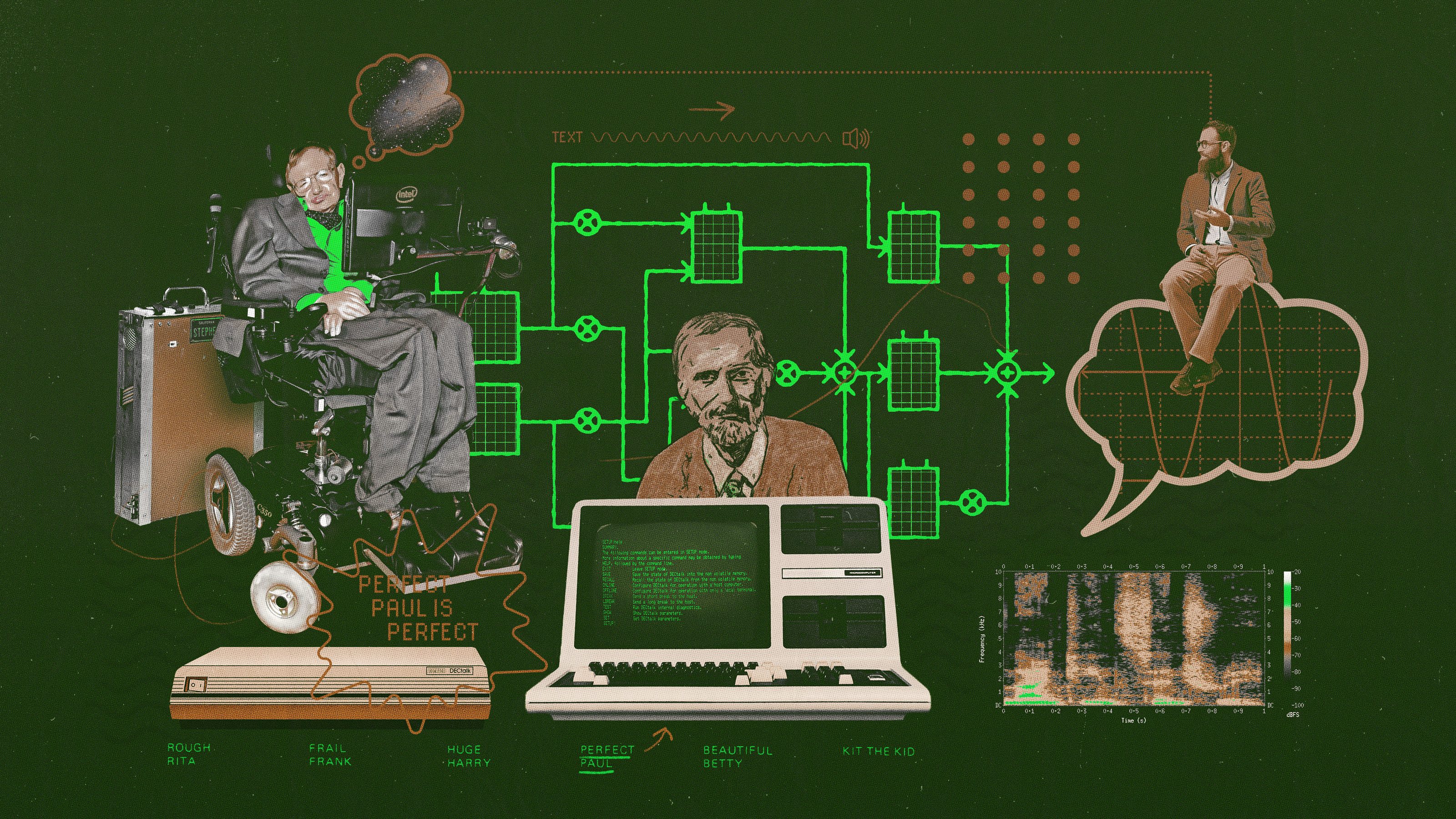

Neuroscientists have known that speech is processed in the auditory cortex for some time, along with some curious activity within the motor cortex. How this last cortex is involved though, has been something of a mystery, until now. A new study by two NYU scientists reveals one of the last holdouts to a process of discovery which started over a century and a half ago. In 1861, French neurologist Pierre Paul Broca identified what would come to be known as “Broca’s area.” This is a region in the posterior inferior frontal gyrus.

This area is responsible for processing and comprehending speech, as well as producing it. Interestingly, a fellow scientist, whom Broca had to operate on, was post-op missing Broca’s area entirely. Yet, he was still able to speak. He couldn’t initially make complex sentences, however, but in time regained all speaking abilities. This meant another region had pitched in, and a certain amount of neuroplasticity was involved.

In 1871, German neurologist Carl Wernicke discovered another area responsible for processing speech through hearing, this time in the superior posterior temporal lobe. It’s now called Wernicke’s area. The model was updated in 1965 by the eminent behavioral neurologist, Norman Geschwind. The updated map of the brain is known as the Wernicke-Geschwind model.

Wernicke and Broca gained their knowledge through studying patients with damage to certain parts of the brain. In the 20th century, electrical brain stimulation began to give us an even greater understanding of the brain’s inner workings. Patients undergoing brain surgery in the mid-century were given weak electrical brain stimulation. The current allowed surgeons to avoid damaging critically important areas. But it also gave them more insight into what areas controlled what functions.

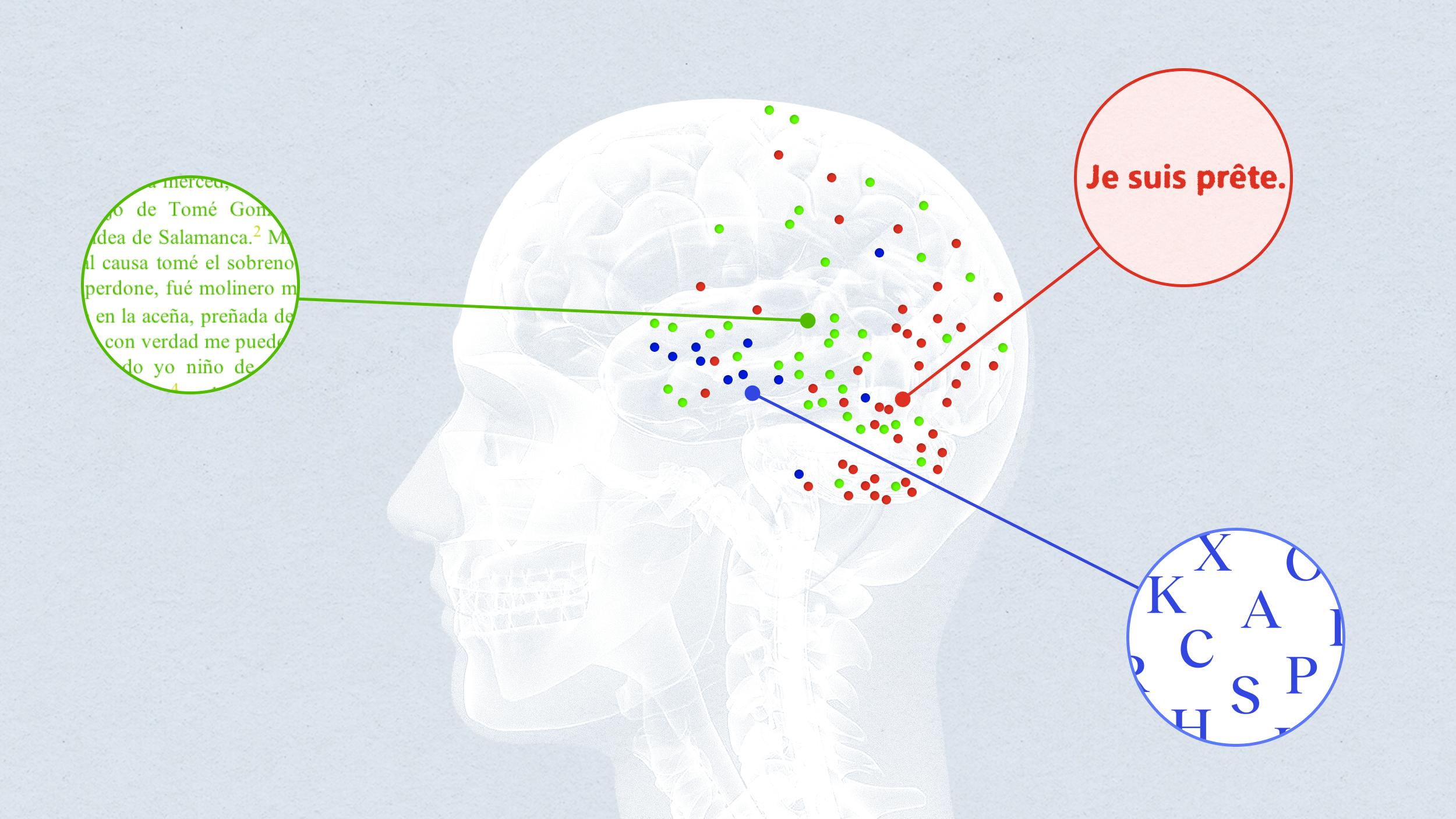

With the advent of the fMRI and other scanning technology, we were able to look at the activity in regions of the brain and how language travels across them. We now know that impulses associated with language go between Boca’s and Wernicke’s areas. Communication between the two helps us understand grammar, how words sound, and their meaning. Another region, the fusiform gyrus, helps us classify words.

Those with damage to this part have trouble reading. It allows us to pick up on metaphors and meter too—such as with poetry. Turns out, language processing involves far more brain regions than previously thought. Every major lobe is involved. According to Psychology and Neural Science Professor David Poeppel at New York University, neuroscience research, after giving us so much, has grown too myopic. Poeppel says how perception and leads to action is still unknown.

Neuroscience, in his view, needs an overarching theme, and to adopt from other disciplines. Now, in a study recently published in the journal Science Advances, Poeppel and post-doc. M. Florencia Assaneo, investigate one of the last holdouts on how the brain processes language. The question is, why is the motor cortex involved? Classically, this area controls planning and execution of movement. So, what has this got to do with language?

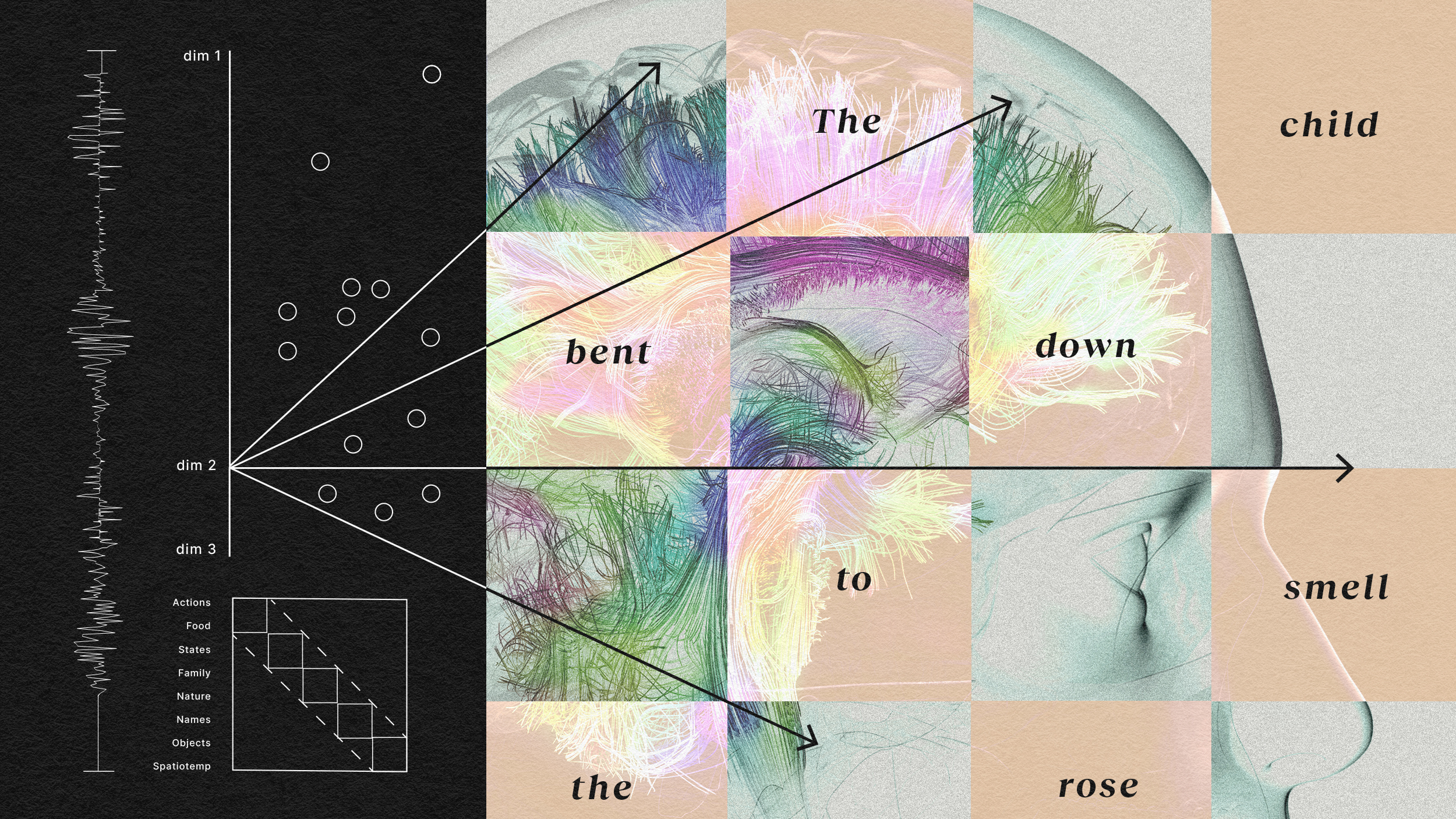

When you listen to someone talk, your ears take in the sound waves and turn them into electrical impulses that travel across your nerves to various parts of the brain. According to Peoppel, “The brain waves surf on the sound waves.” The first place they go is the auditory cortex, where the “envelope” or frequency is translated. This is then cut into chunks, known as an entrained signal. What researchers have been stymied over is that some of this signal ends up in the motor cortex.

Of course, you move your mouth when you talk, and lots of other parts of your face. So the motor cortex essentially is responsible for the physics of speech. But why does it need to be involved in the interpretation process? According to Assaneo, it’s almost like the brain needs to speak the words silently to itself, in order to decipher what’s been said. Such interpretations, however, are controversial. The entrained signal doesn’t always end up in the motor cortex. So do these signals start at the auditory cortex or somewhere else?

What Assaneo and Poeppel did was, they took a well-known fact that entrained signals in the auditory cortex are usually at about 4.5 hertz. Then from linguistics, they found that this also happens to be the average rate syllables are spoken in almost every language on Earth. Could there be a neurophysiological link? Assaneo recruited volunteers and had them listen to syllables that made nonsense words, at rates between 2-7 hertz. If entrained signals went from the auditory to the motor cortex, the entrained signal should be recorded throughout the test.

Poeppel and Assaneo found that the entrained signal did go from the auditory to the motor cortex, and sustained a connection for up to 5 hertz. Any higher and the signal dropped off. A computer model found that the motor cortex oscillates internally at 4-5 hertz, the same rate syllables are spoken in almost any language. Poeppel cites a multidisciplinary approach to neuroscience for this discovery. Future studies will continue to look at the rhythms of the brain, and how synchronicity between regions allows us to decode and formulate speech.

To learn more about how the brain processes speech, click here: