Here’s What We Know about Tesla’s First Fatal Crash

Tesla announced it’s semi-autonomous Autopilot system was involved in its first deadly crash on May 7, 2016. This marks the first fatality involving an autonomous vehicle. However, this tragedy should not hinder progress.

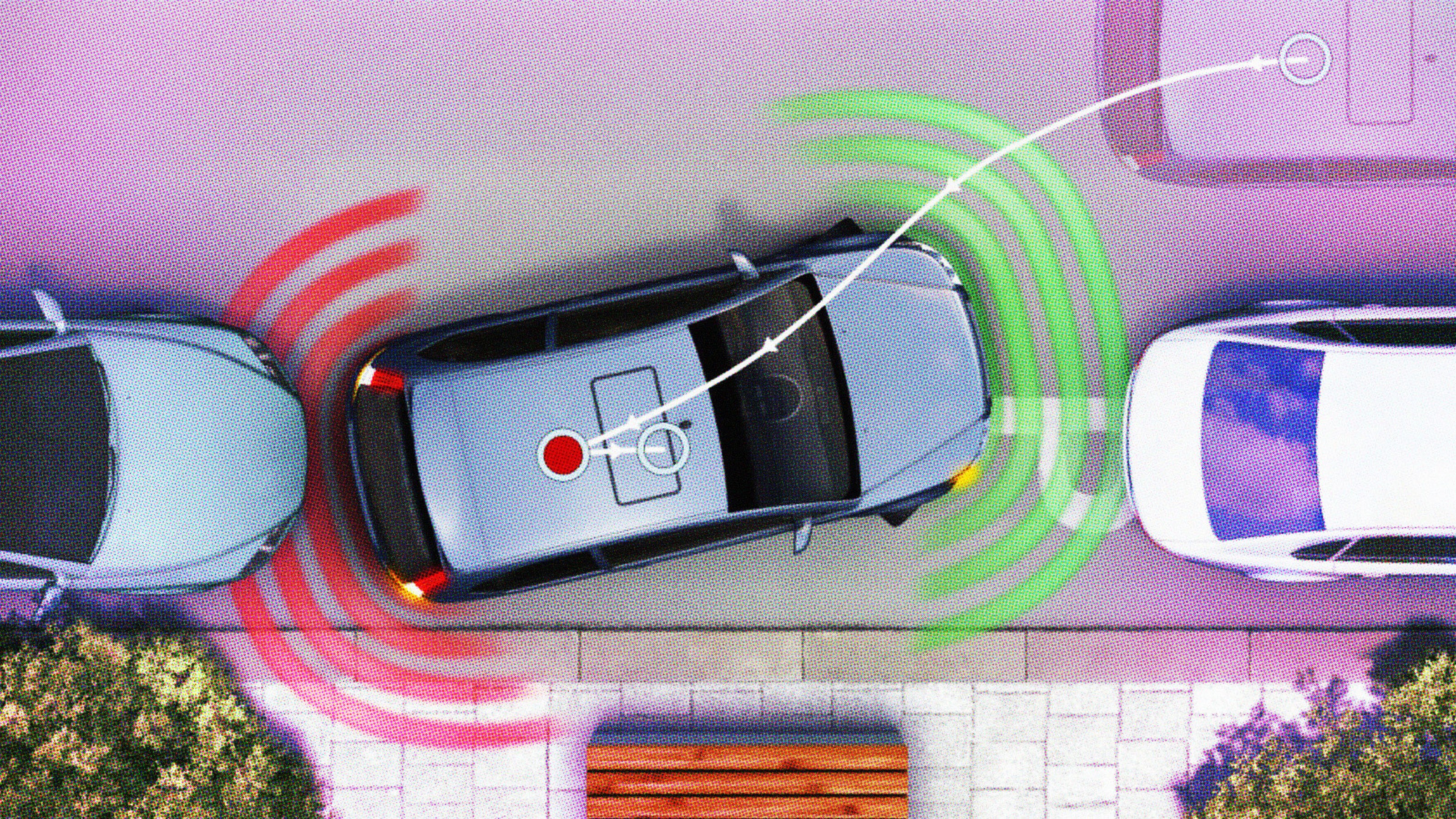

What everyone wants to know is how did this happen and why it happened. Here’s what we know: Put simply, aTesla Model S failed to see an oncoming threat. The vehicle was driving down a highway with the Autopilot system engaged, while a tractor-trailer was driving across the highway; perpendicular to the Model S. But against the bright sky, the Model S’s camera could not see the white side of the tractor-tailor.

“The MobilEye is the vision sensor used by the Tesla to power the autopilot, and the failure to detect the truck in this situation is a not-unexpected result for the sensor,” Brad Templeton, who has been a consultant on Google’s team designing a driverless car, explained in post about the incident. “It is also worth noting that the camera they use sees only red and gray intensity, it does not see all the colors, making it have an even harder time with the white truck and bright sky. The sun was not a factor, it was up high in the sky.”

Templeton also points out that had the car been equipped with LIDARrather than a camera, the Autopilot system would have had no issue detecting the truck in this scenario.

It’s also presumed the driver, Joshua Brown, who died in the crash, was not paying attention. Frank Baressi, the man driving the tractor-trailer, told the AP he heard the movie Harry Potterplaying from Brown’s carat the time of the crash.

“The high ride height of the trailer combined with its positioning across the road and the extremely rare circumstances of the impact caused the Model S to pass under the trailer, with the bottom of the trailer impacting the windshield of the Model S,” Tesla wrote in a blog post.

Despite Tesla’s Autopilot feature being an “assist feature that requires you to keep your hands on the steering wheel at all times,” according to the disclaimer before it’s enabled, many people have been reckless with this system, treating it as a substitute for driving.

Tesla is learning what Google learned years ago: The company found early on in testing that even with a disclaimer, people would still become inattentive drivers. It’s one of the reasons why Google says it won’t release a semi-autonomous beta car to the public—it has to be able to be fully autonomous.

Joshua Brown’s death is a tragedy, and there are going to have more days like May 7. When Google’s autonomous car was involved in its own accident earlier this year, Chris Urmson, head of Google’s autonomous cars, told an audience at SXSW, “We’re going to have another day like our Valentine’s Day [accident], and we’re going to have worse days than that. I know that the net benefit will be better for society.” One bump every 1.4 million miles in a Google car is certainly better than 38,000 deaths from car accidents every year. Likewise, according to Tesla, its Autopilot system drove 130 million miles before it’s May fatality, while human drivers in the USA have a car fatality every 94 million miles. However, Google’s crash happened because the software made an incorrect assumption. Tesla’s crash occurred because the equipment wasn’t capable of detecting the threat ahead.

However, there are many, many recorded occasions where drivers have avoided a potentially fatal incident because of Tesla’s Autopilot system.

So, what happens now?

The NHTSA is currently doing a preliminary evaluation, which will examine whether the system was working according to expectations when the Model S crashed. Tesla admits it’s Autopilot system isn’t perfect, which is why it requires the driver to remain alert; this system is a high-end kind of cruise control, not an autonomous car.

This incident brings up questions of fault that have not been considered before. You can’t punish a machine, even so, the Model S’s camera wasn’t capable of detecting the truck ahead. When autonomous vehicles do take over, Jerry Kaplan, who teaches Impact of Artificial Intelligence in the Computer Science Department at Stanford University, points out,“We’re going to need new kinds of laws that deal with the consequences of well-intentioned autonomous actions that robots take.”

***

Photo Credit: Justin Sullivan / Getty Staff