Why do people rate AI-generated faces as more trustworthy?

- We each form first impressions within seconds of seeing someone's face. We do so on various facial features and their similarity to what we know best.

- New research shows that not only can we no longer tell AI-generated from real faces, but that we actually find the AI more trustworthy.

- It's research that proves again how the borders between the digital and real world are porous and fading. It's possible that the difference between "artificial" and "real" will one day mean little.

In 1938, Coco Chanel wrote, “Nature gives you the face you have at twenty; life models the face you have at thirty; but the face you have at fifty, is the one you deserve.” The idea is that our faces, after enduring enough of life, will reveal something fundamental about who we are — that the lines and creases we each wear are a map to our soul.

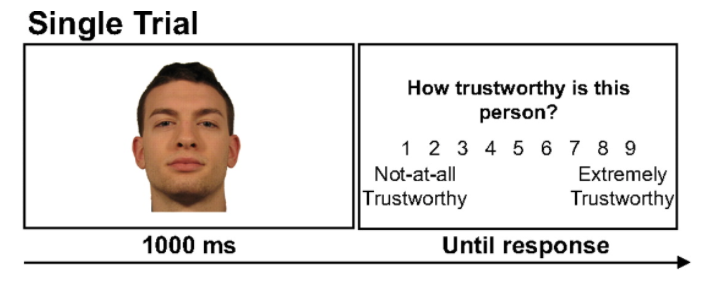

Knowingly or not, we all judge people by their faces. You form your first opinion of someone within seconds of meeting them (possibly even sooner), while research suggests that 69 percent of Americans form their first impressions of someone before they even speak. Our faces — and how we judge them — matter, both for interpersonal relationships and how we form mutual trust and cooperation in society.

That’s why new research suggesting that people tend to think AI-generated faces are more trustworthy than real faces is concerning. But understanding why that is, and how imaging technology might be abused in the future, requires understanding why we deem certain faces trustworthy.

A trustworthy face

So, what exactly is it that we’re judging when we look at a face? In those split seconds it takes, what features or cues does our brain take in and deem trustworthy or not?

One paper from 2013 tells us that men tend to be seen as more trustworthy when they have “a bigger mouth, a broader chin, a bigger nose, and more prominent eyebrows positioned closer to each other,” while the trustworthy “female faces tended to have a more prominent chin, a mouth with upward-pointing corners, and a shorter distance between the eyes.” Brown eyes are often seen as more trustworthy — not necessarily because of the color but rather the features (above) that are associated with brown eyes.

Predictably, our unconscious biases play a role here too. We are much more likely to call trustworthy people who look or behave like those people we are familiar with. We warm to those who act like us. As one 2011 study found, though ethnicity features in this analysis, the reason has more to do with the fact that we trust those “of shared experiences” — in other words, if our social group is widely multiethnic, ethnicity will feature hardly at all as a feature of trustworthiness.

Unnaturally good

The more research and interest that goes into what makes a “good” or “trustworthy” face now has very real-world implications. We live in an age of AI-generated faces. Some are entirely benign, such as in computer games or with social avatars. Others, though, are much more nefarious, as in digitally superimposed “revenge porn” or fraud. The ability to create a convincing fake face is becoming big money.

And where there’s money there’s quick progress. According to new research published in PNAS, we are at the stage now where “synthetically generated faces are not just highly photorealistic, they are nearly indistinguishable from real faces.” What study authors Nightingale and Farid found is that we now live in a world where we cannot meaningful tell the difference between real and AI-generated faces, meaning we are steadily losing reasons to trust the veracity of video and photographic evidence or documentation. It’s a world where timestamps, edit records, and fine digital inspections will have to do the work our lumbering, fooled senses can no longer do.

I rather like my robot overlord

The other fascinating observation from the Lancaster University study was that not only can we not tell fake from real faces, but we actually trust the AI faces more. As the team noted, “…synthetically generated faces have emerged on the other side of the uncanny valley.” AI faces are no longer weird or creepy — they’re preferable to the ones we see in coffee shops and on the school run. It’s not entirely clear why this is. The Lancaster University team cite a separate paper that suggests it “may be because synthesized faces tend to look more like average faces which themselves are deemed more trustworthy.”

Regardless of the reason, what’s clear is that Nightingale and Farid’s research provides one more piece in what’s becoming a definite pattern: The artificial or digital world is no longer some clearly delineated “other space.” Rather, the borders between real and fake are becoming porous and blurred. The metaverse, and alternative realities in all their guises, are encroaching more and more into our lived experiences.

It’s no longer so easy to tell AI from human. But, as this research shows, we might actually like that better.

Jonny Thomson teaches philosophy in Oxford. He runs a popular Instagram account called Mini Philosophy (@philosophyminis). His first book is Mini Philosophy: A Small Book of Big Ideas.