Are Physicists Too Dismissive When Experiments Give Unexpected Results?

Scientific surprises are often how science advances. But more often than not, they’re just bad science.

When you’re a scientist, getting an unexpected result can be a double-edged sword. The best prevailing theories of the day can tell you what you ought to expect, but only by confronting your predictions with real-world scientific inquiry — involving experiments, measurements, and observations — can you put those theories to the test. Most commonly, your results agree with what the leading theories predict; that’s why they became the leading theories in the first place.

But every once in a while, you get a result that conflicts with your theoretical predictions. In general, when this happens in physics, most people default to the most skeptical of explanations: that there’s a problem with the experiment. Either there’s an unintentional mistake, or a delusional self-deception, or an outright case of deliberate fraud. But it’s also possible that something quite fantastic is afoot: we’re seeing the first signs of something new in the Universe. It’s important to remain simultaneously both skeptical and open-minded, as five examples from history clearly illustrate.

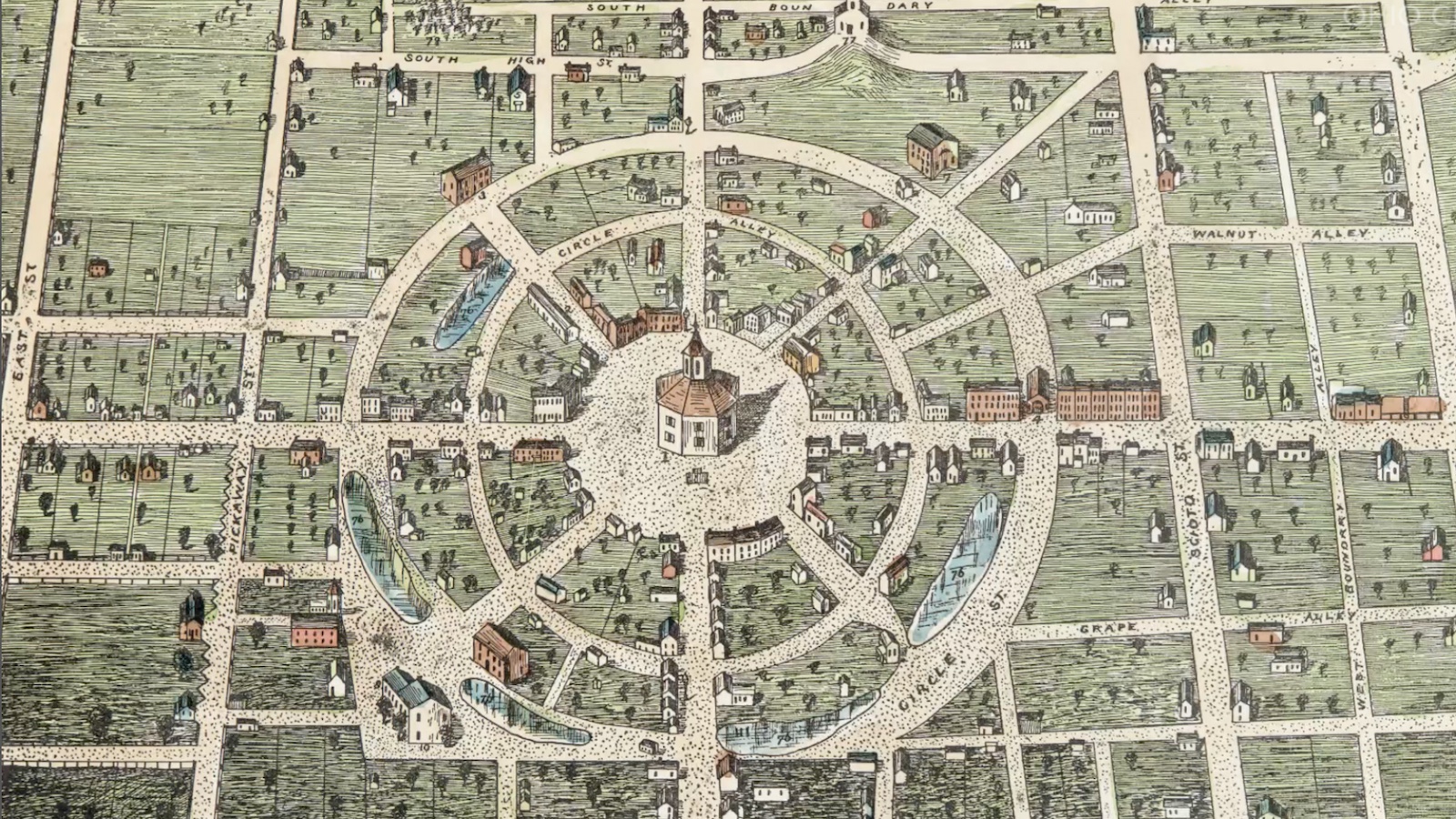

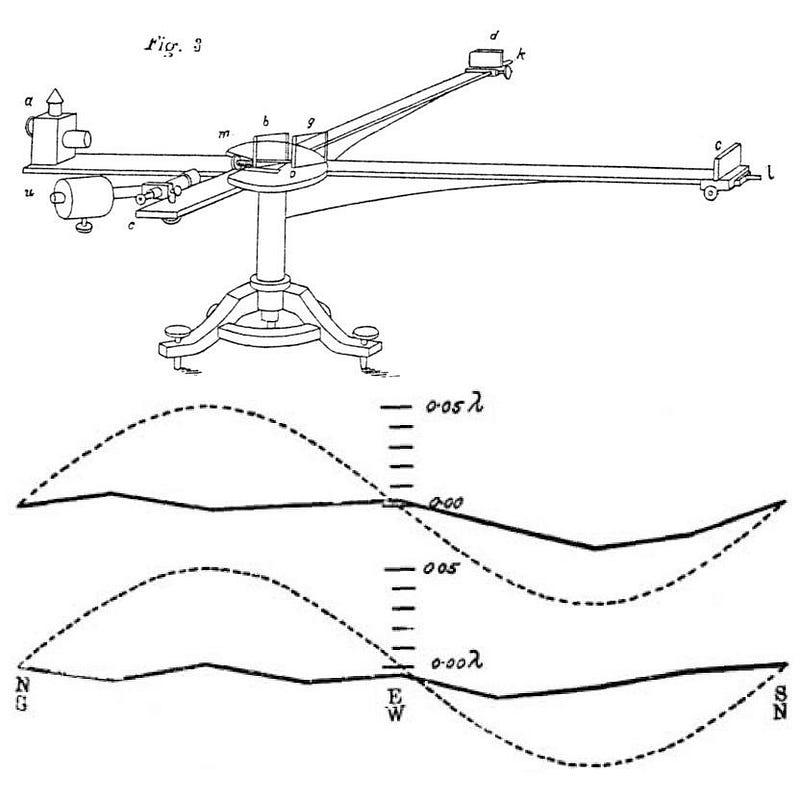

Story 1: It’s the 1880s, and scientists have measured the speed of light to very good precision: 299,800 km/s or so, with an uncertainty of about 0.005%. That’s precise enough that, if light travels through the medium of fixed space, we should be able to tell when it moves with, against, or at an angle to Earth’s motion (at 30 km/s) around the Sun.

The Michelson-Morley experiment was designed to test exactly this, anticipating that light would travel through the medium of space — then known as the aether — at different speeds dependent on the direction of Earth’s motion relative to the apparatus. Yet, when the experiment was performed, it always gave the same results, regardless of how the apparatus was oriented or when in Earth’s orbit it occurred. This was an unexpected result that flew in the face of the leading theory of the day.

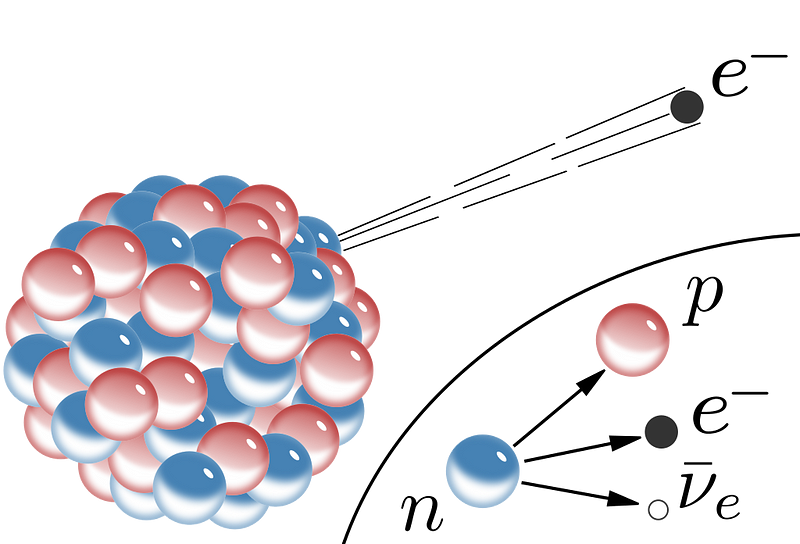

Story 2: It’s the late 1920s, and scientists have discovered three types of radioactive decay: alpha, beta, and gamma decays. In alpha decay, an unstable atomic nucleus emits an alpha particle (helium-4 nucleus), with the total energy and momentum of both “daughter” particles conserved from the “parent” particle. In gamma decay, a gamma particle (photon) is emitted, conserving both energy and momentum from the initial to final states.

But in beta decay, a beta particle (electron) is emitted, where the total energy is less for the daughter particles than the parent particle, and momentum is not conserved. Energy and momentum are two quantities that are expected to always be conserved in particle interactions, and so seeing a reaction where energy is lost and a net momentum appears out of nowhere violates both of those rules, never seen to be violated in any other particle reaction, collision, or decay.

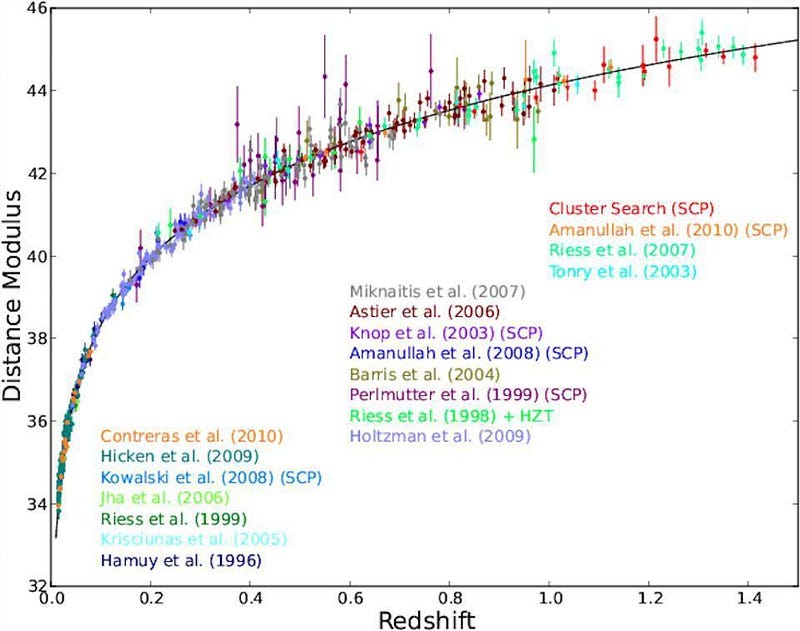

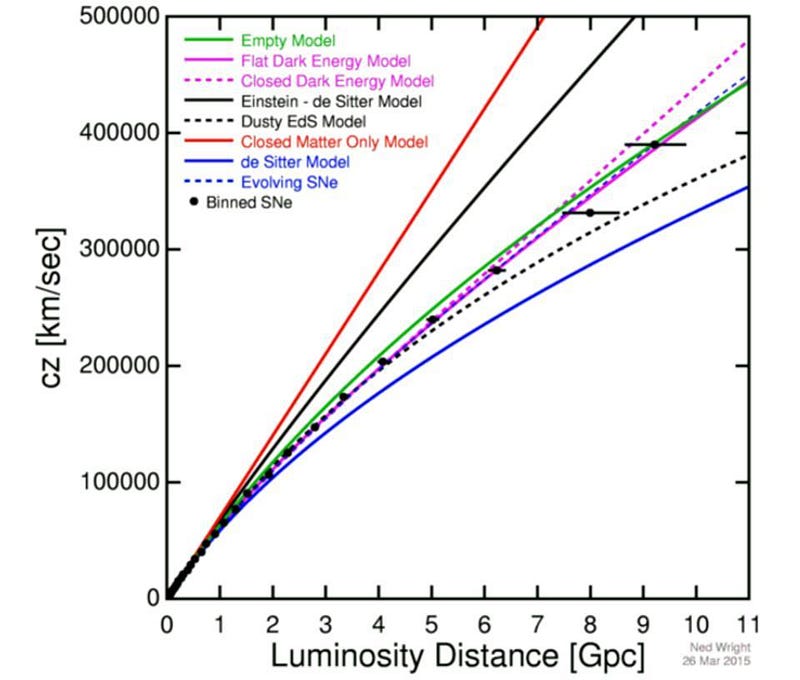

Story 3: It’s the late 1990s, and scientists are working hard to measure exactly how the Universe is expanding. A combination of ground-based observations and space-based ones (using the relatively new Hubble Space Telescope) are using every type of distance indicator to measure two numbers:

- the Hubble constant (the expansion rate today), and

- the deceleration parameter (how gravity is slowing the Universe’s expansion).

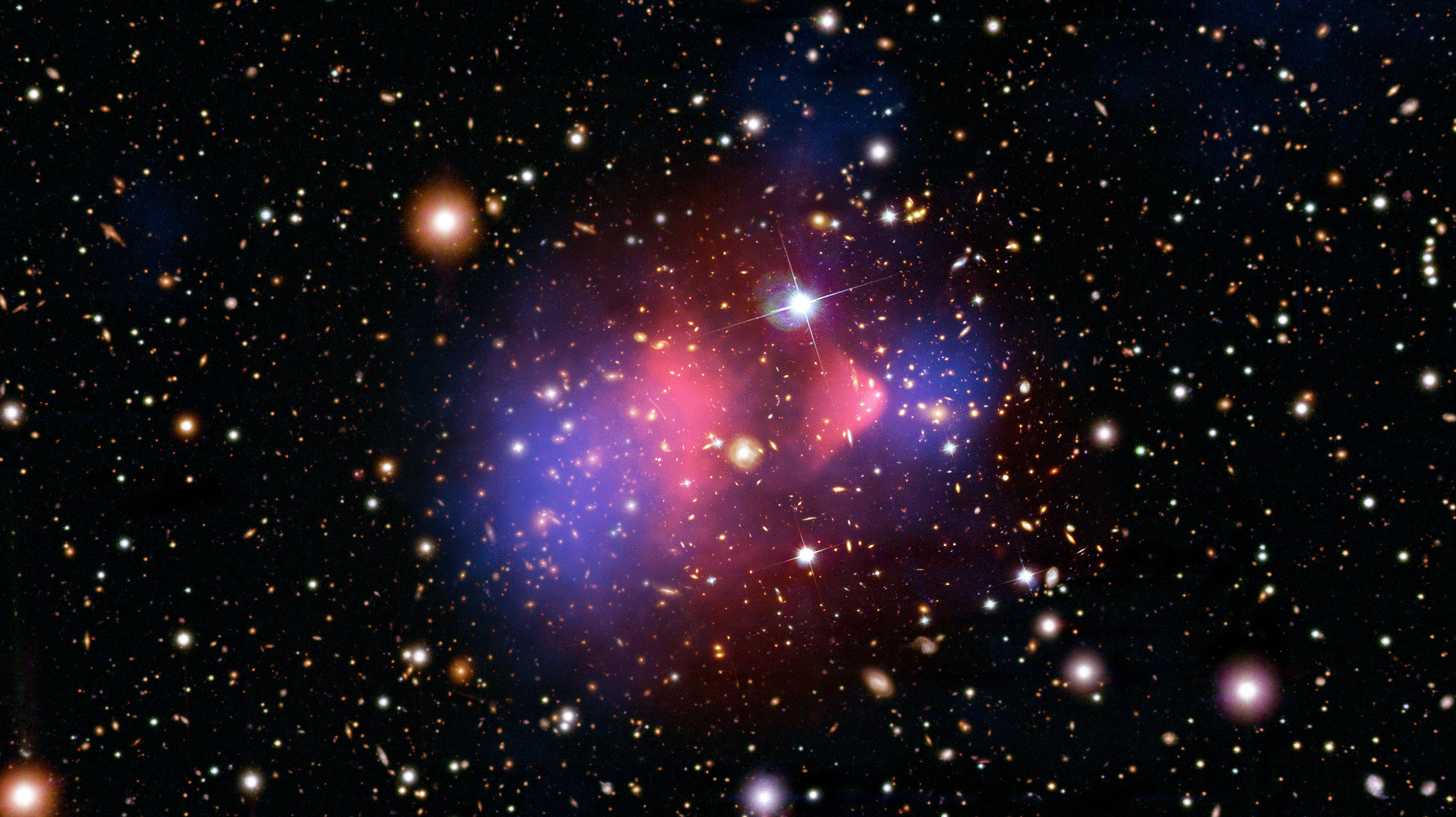

After years of carefully measuring the brightnesses and redshifts of many different type Ia supernovae at large distances, scientists tentatively publish their results. From their data, they conclude that “the deceleration parameter” is actually negative; instead of gravity slowing the Universe’s expansion, more distant galaxies appear to be speeding up in their apparent recession velocities as time goes on. In a Universe composed of normal matter, dark matter, radiation, neutrinos, and spatial curvature, this effect is theoretically impossible.

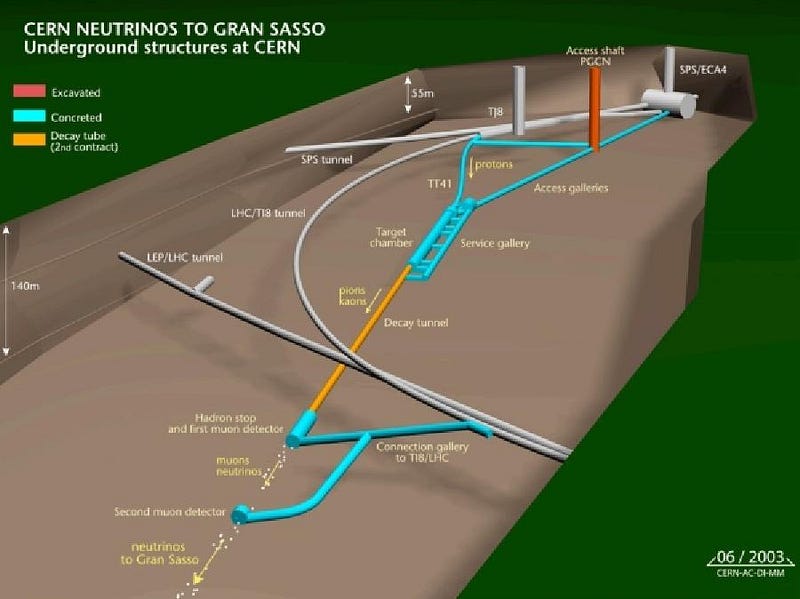

Story 4: It’s 2011, and the Large Hadron Collider has only been operating for a short while. A variety of experiments that take advantage of the energetic particles are ongoing, seeking to measure a variety of aspects about the Universe. Some of them involve collisions of particles in one direction with particles moving equally fast in the other direction; others involve “fixed target” experiments, where fast-moving particles are collided with stationary ones.

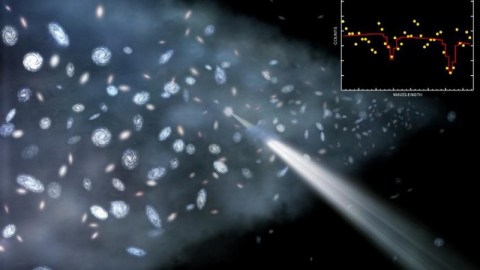

In this latter case, enormous numbers of particles are produced all moving in the same general direction: a particle shower. Some of these particles produced will quickly decay, producing neutrinos when they do. One experiment seeks to measure these neutrinos from hundreds of kilometers away, reaching a startling conclusion: the particles are arriving tens of nanoseconds earlier than expected. If all particles, including neutrinos, are limited by the speed of light, this should be theoretically impossible.

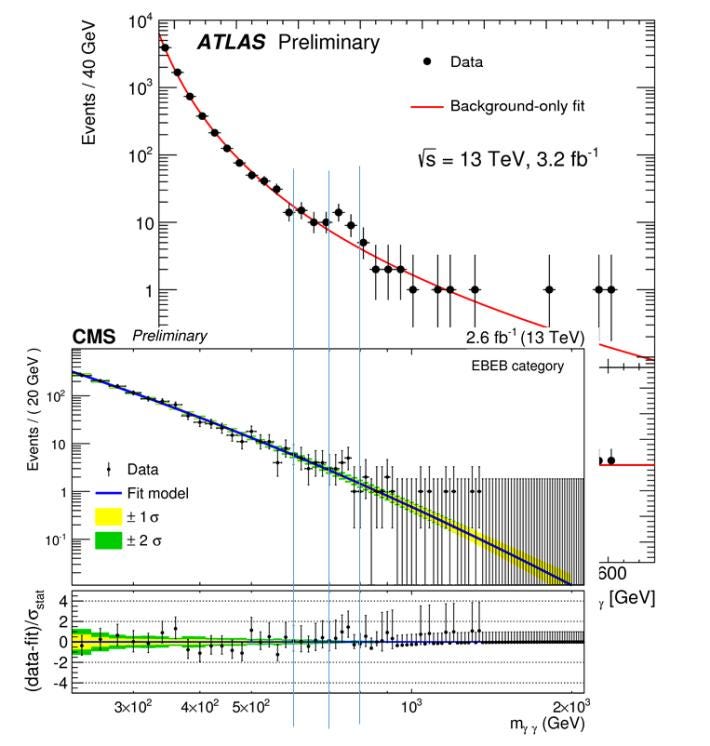

Story 5: It’s well into the 2010s, and the Large Hadron Collider has been operating for years. The full results from its first run are now in, and the Higgs boson has been discovered and awarded its Nobel, along with further confirmation of the rest of the Standard Model. With all the pieces of the Standard Model now firmly in place, and little to point to anything being out of the ordinary otherwise, particle physics seems secure as-is.

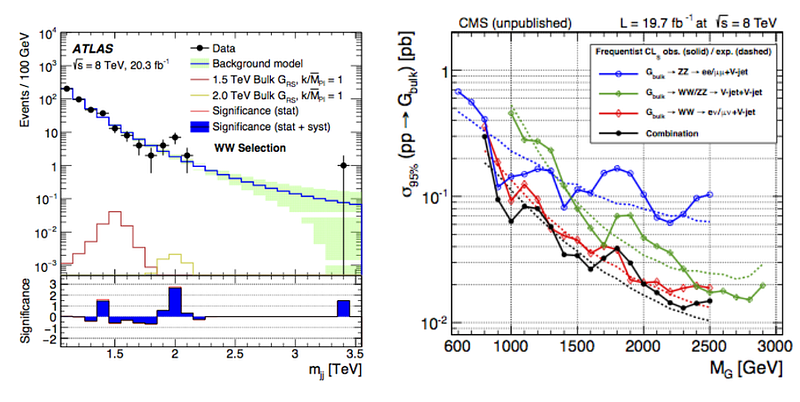

But there are a few anomalous “bumps” in the data: extra events that appear at certain energies where the Standard Model predicts that there should be no bumps. With two competing collaborations colliding particles at these maximal energies working independently, a sensible cross-check would be to see if both CMS and ATLAS find similar evidence, and both of them do. Whatever’s going on, it doesn’t match the theoretical predictions that our most successful theories of all-time give.

In each of these cases, it’s important to recognize what’s possible. In general, there are three possibilities.

- There is literally nothing to see here. What’s going on is nothing more than an error of some sort. Whether it’s because of an honest, unforeseen mistake, an erroneous setup, experimental incompetence, an act of sabotage, or a deliberate hoax or fraud perpetrated by a charlatan is irrelevant; the claimed effect is not real.

- The rules of physics, as we’ve conceived them up until now, are not as we believed them to be, and this result is a hint that there’s something different about our Universe than we’ve thought up until this point.

- There is a new component to the Universe — something not previously included in our theoretical expectations — whose effects are showing up here, possibly for the first time.

How will we know which one is at play? The scientific process demands just one thing: that we gather more data, better data, and independent data that either confirms or refutes what’s been seen. New ideas and theories that supersede the old ones are considered, so long as they:

- reproduce the same successful results as the old theories where they work,

- explain the new results where the old theories do not, and

- make at least one new prediction that differs from the old theory that can be, in principle, looked for and measured.

The correct first response to an unexpected result is to try and independently reproduce it and to compare these results with other, complementary results that should help us interpret this new result in the context of the full suite of evidence.

Each one of these five historical stories had a different ending, although they all had the potential to revolutionize the Universe. In order, here’s what happened:

- The speed of light, as further experiments demonstrated, is the same for all observers in all reference frames. There is no aether necessary; instead, our conception of how things move through the Universe is governed by Einstein’s relativity, not Newton’s laws.

- Energy and momentum are actually both conserved, but that’s because there was a new, unseen particle that’s also emitted in beta decay: the neutrino, as proposed by Wolfgang Pauli in 1930. Neutrinos, a mere hypothesis for decades, were finally directly detected 1956, two years before Pauli died.

- Initially met with skepticism, two independent teams continued to gather data on the expansion of the Universe, but skeptics weren’t convinced until improved data from the cosmic microwave background and large-scale structure data all supported the same unexpected conclusion: the Universe also contains dark energy, which causes the observed accelerated expansion.

- Initially a 6.8-sigma result by the OPERA collaboration, other experiments failed to confirm their results. Eventually, the OPERA team found there error: there was a loose cable that was giving an incorrect reading for the time-of-flight of these neutrinos. With the error fixed, the anomaly disappeared.

- Even with data from both CMS and ATLAS, the significance of these results (both the diboson and diphoton bumps) never crossed the 5-sigma threshold, and appeared to be mere statistical fluctuations. With much more data now in the LHC’s coffers, these fluctuations disappeared.

On the other hand, there are a large number of collaborations that are too quick to observe an anomaly and then make extraordinary claims based on that one observation. The DAMA collaboration claims to have directly detected dark matter, despite a whole slew of red flags and failed confirmation attempts. The Atomki anomaly, which observes a specific nuclear decay, sees an unexpected result in the distribution of angles of that decay, claiming the existence of a new particle, the X17, with a series of unprecedented properties.

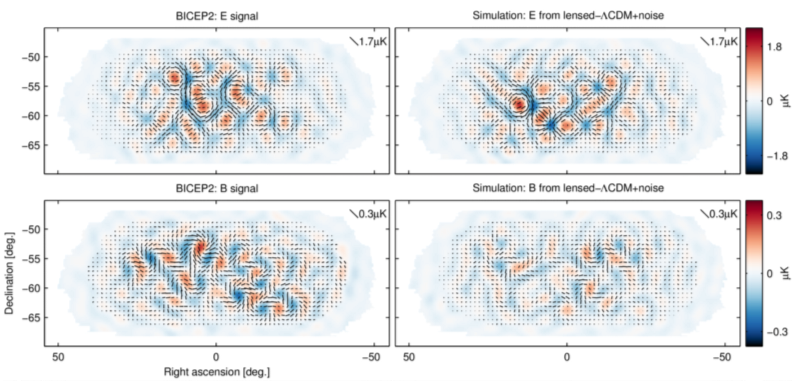

There have been claims of cold fusion, which defies the conventional rules of nuclear physics. There have been claims of reactionless, thrustless engines, which defy the rules of momentum conservation. And there have been extraordinary claims made by real physicists, such as from the Alpha Magnetic Spectrometer or BICEP2, that had mundane, rather than extraordinary, explanations.

Whenever you do a real, bona fide experiment, it’s important that you don’t bias yourself towards getting whatever result you anticipate. You’ll want to be as responsible as possible, doing everything you can to calibrate your instruments properly and understand all of your sources of error and uncertainty, but in the end, you have to report your results honestly, regardless of what you see.

There should be no penalty to collaborations for coming up with results that aren’t borne out by later experiments; the OPERA, ATLAS, and CMS collaborations in particular did admirable jobs in releasing their data with all the appropriate caveats. When the first hints of an anomaly arrive, unless there is a particularly glaring flaw with the experiment (or the experimenters), there is no way to know whether it’s an experimental flaw, evidence for an unseen component, or the harbinger of a new set of physical laws. Only with more, better, and independent scientific data can we hope to solve the puzzle.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.