Concern trolling: the fear-based tactic that derails progress, from AI to biotech

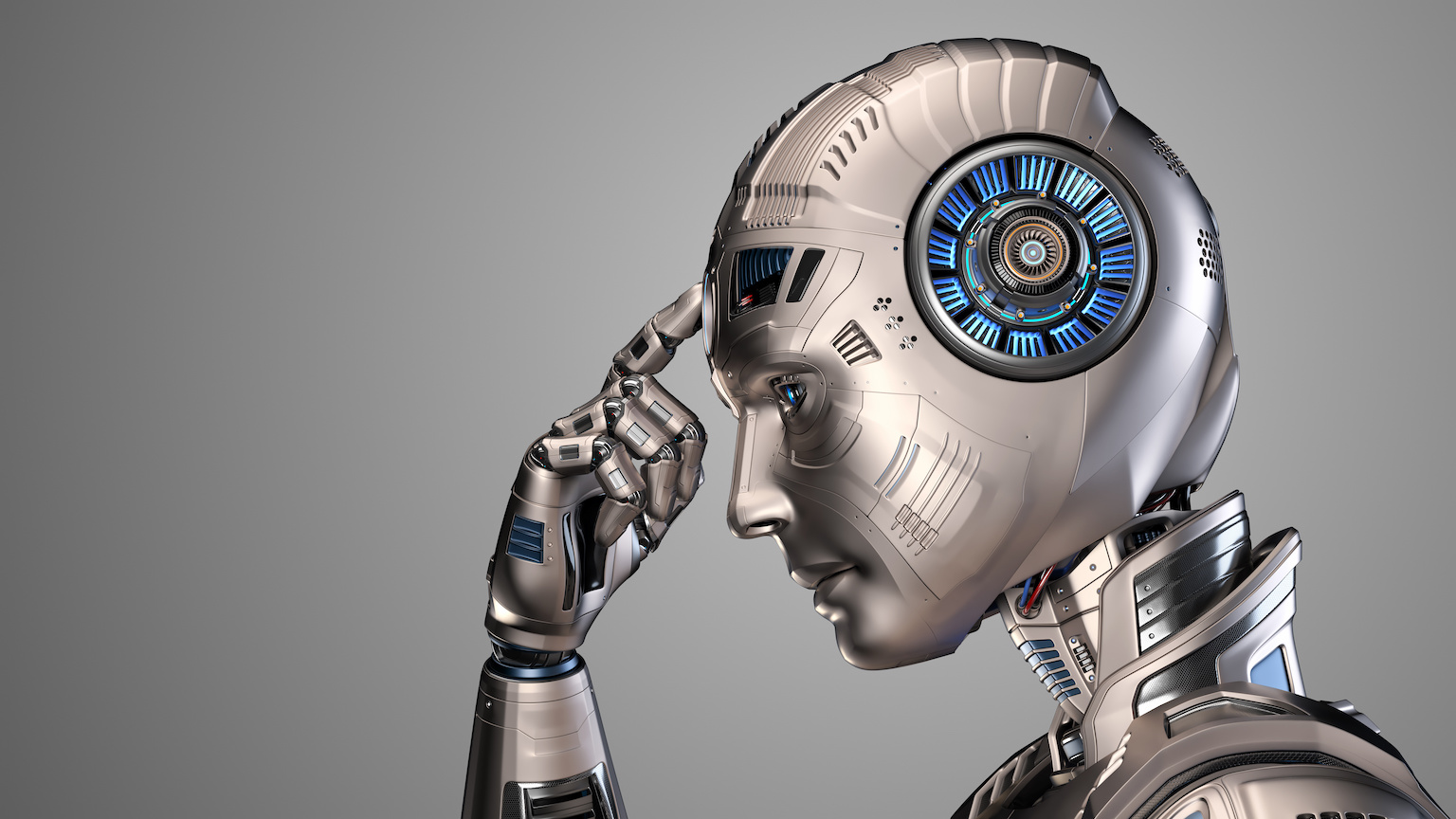

- The “Future of Life Institute” just released an open letter calling for a pause on all AI research, citing an existential threat to humanity.

- Yet nowhere has AI ever proven a problem: only in our imaginations and in science fiction. The real problem is the same as it’s always been: humans who undertake bad actions.

- There are some very real problems facing our world, but if we focus our energy on imagined problems instead, we’ll fail to address the real, necessary ones.

“What’s the worst that could happen?” This innocent-seeming question is normally uttered when someone is venturing into unknown territory, with the implication being that sure, there might be a chance of some sort of negative outcome, but the probability of a catastrophically bad outcome is exceedingly small. Nothing ventured, nothing gained, after all.

But there’s a bit of a dark side to asking that question, as the capacity for the human imagination to catastrophize seems to be unbounded. If the consequences we can imagine are bad enough, even if the probability of such an outcome is low, then in our head, the risk-reward equation tips toward the “not worth it” side. This is true even when the imagined catastrophe is completely detached from reality.

Recently, many prominent signatories issued an Open Letter from Future of Life Institute, which warned about the dangers of the unchecked development of artificial intelligence, and immediately called for a 6-month pause into the type of deep-learning AI research that’s brought us technologies like ChatGPT, Stable Diffusion, and Dall-E. They assert that “[p]owerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.”

Only, this is not a reasonable warning at all; it’s an unreasonable recommendation that totally skirts the true problem we need to reckon with: the unethical ways that humans leverage new technologies and novel resources of any type to exploit one another for personal gain. This type of behavior is not an accident, but rather a deliberate strategy that’s taken off in the 21st century and, in particular, on the internet: concern trolling. Here’s how you can spot it, and what you should focus on instead.

Concern trolling is basically when you pretend to care about an issue in order to undermine and derail any measures that would be taken to address the actual, underlying problem that’s affecting society. Instead, it’s a tactic that’s used to:

- trigger infighting among the groups/people who are actively working on the true problem,

- reframe the argument to try and take power away from an actual, effective movement,

- and to flood the public discussion with noise and distraction, often with tremendous success.

This is a particularly fruitful tactic when the seeds of fear are already present, as it’s extremely easy to take an already fearful (sometimes, justifiably so) group and point them toward an imagined boogeyman, causing them to attack and fixate on a completely extraneous, possibly even non-existent, problem instead.

In this particular case, the open letter mentions problems that have been brewing for a long time in our society, independently of LLMs and AI, such as:

- Should we let machines flood our information channels with propaganda and untruth?

- Should we automate away all the jobs, including the fulfilling ones?

- Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete, and replace us?

- Should we risk losing control of our civilization?

But “pausing” this type of research will do nothing to stop or slow these problems. We’ve already decided, on all four of these issues, that “we don’t care” is the answer. What we’re going to do about it remains not only unanswered, but completely independent of what the power or capabilities of AI systems actually are. Humans are going to do this to one another anyway, and are already doing so. The issues raised, although real, are in no way related to the development of this novel technology.

In fact, the big problem of concern trolling is this: if you get enough people to agree with the concern troll and you wind up actually empowering them, what always happens is that ineffective regulations get put into place. If the concern trolls win, whatever they’re “trolling against” gets slowed down or stopped entirely, but the actual, underlying problem remains and has even less of a chance of getting legitimately addressed. If you think that “pausing” this type of artificial intelligence research will do anything to address:

- the problem of misinformation, propaganda, untruth, and disinformation flooding our media,

- the problem of job loss due to automation,

- the problem of computers making humans obsolete for ever-increasing numbers of complex tasks,

- or the problem of civilization being out of the control of reasonable humans,

I would strongly argue that you have lost your connection with reality.

All of these problems already exist, and have existed independently of any type of general or generative artificial intelligence. These are problems for human civilizations and governments to either reckon with or not. So far, we’ve abdicated those responsibilities on a global scale with an extreme laissez-faire attitude. “Pausing” AI research will only harm AI research; it will not cause a global rethink of these actual, real issues.

There are a virtually unlimited number of examples where concern trolling has been an extraordinarily effective tactic in stalling or completely preventing meaningful action from being taken, allowing very real problems to persist and worsen while simultaneously weakening meaningful attempts to address them.

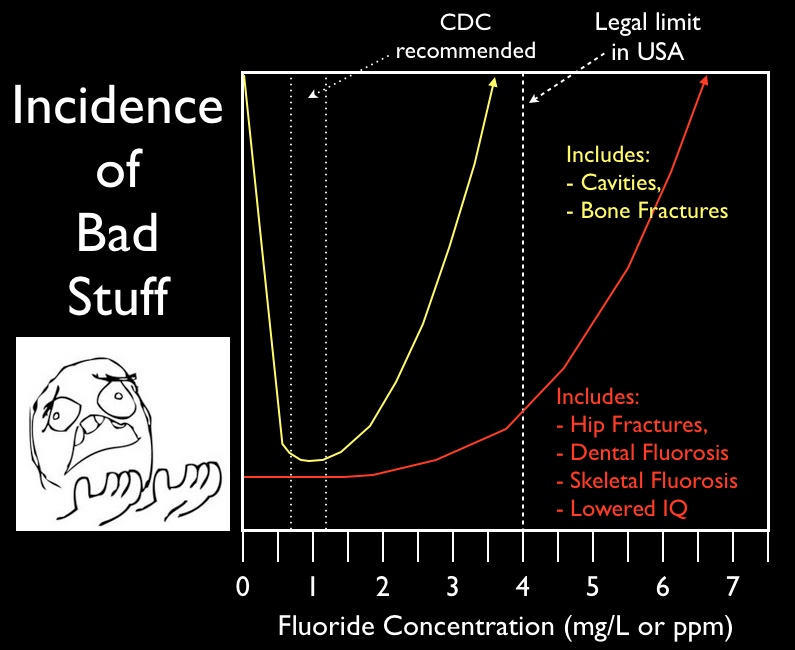

For example, the largest city in the United States that doesn’t have fluoridated drinking water — in a nation without universal dental care — is Portland, OR. In 2013, a new attempt was made to add fluoride to the drinking water, and a general election was held in May of 2013 for the citizens to vote on the issue.

Instead of a health-and-science-based look at the issues, however, anti-fluoride advocates engaged in concern trolling about the health risks and detriments of fluoride. They warned that their pristine water was going to be polluted with fluoride. That people were going to be “mass medicated” without consent. And that fluoride was a poisonous chemical. All over Portland, signs began appearing that proclaimed “Vote No! Fluoridation Chemicals.” In the end, the campaign won public opinion over by a 3:2 margin, despite their claims being scientifically baseless. As a result, Portland still has, when adjusted for socioeconomic demographics and income, children with weaker enamel and 40% more cavities than children in other major cities that do have fluoridated drinking water. The underlying public health problem continues to this day and has not been addressed in any meaningful way over the past decade.

A similar story of concern trolling has led to a prolonged epidemic of Vitamin A deficiency in a number of countries. Millions of children across the world suffer from this deficiency, as their rice-rich diets — where conventional rice contains almost no Vitamin A — do not give them nearly enough of this necessary nutrient. Without enough Vitamin A, your risk of blindness and disease susceptibility increases tremendously; according to Helen Keller International, 670,000 children die and 350,000 children go blind each year from Vitamin A deficiency.

To combat this, Golden Rice was developed by scientists. By adding extra genes to the rice plant (a genetic modification), the plant began producing beta-carotene, which humans convert into Vitamin A. As little as 2 cups a day of Golden Rice would provide 100% of a full-grown adult’s Vitamin A needs.

In 2013 in the Philippines, where 1.7 million children suffer from Vitamin A deficiency, driven by a concern troll campaign that targeted all genetic modifications (i.e., anti-GMO propaganda), an entire field of Golden Rice was destroyed by a group of 400 protestors. This was part of a trial plot that was about to be submitted for evaluation; the protestors were confident that they had saved their nation from the horrors of GMOs. Instead of Golden Rice beginning commercial production in 2014, as was previously planned, this delayed the onset of commercial production until 2021, leading to an extra 7 years of unnecessary, preventable instances of blindness, disease, and death among Filipino children.

Concern trolling is often used, as well, to gain fame, power, influence, and prominence for particular individuals. All you have to do is:

- take something that people don’t know very much about,

- feed whatever fears they may have (particularly if it’s about an imagined harm to children),

- conflate those fears with an actual, real problem (especially a health problem),

and you’re practically guaranteed to gather an army of followers to your side.

Health quacks like Mehmet Oz and Joe Mercola have built large empires by doing exactly this with a variety of issues, while getting rich off of selling snake-oil-esque “miracle cures” that have no scientific backing: the same products sold by GOOP and Alex Jones. Robert Kennedy Jr. has done this by sowing doubts about the safety and efficacy of vaccines and promoting a link to autism, helping spark a widespread (but scientifically unfounded) anti-vaccine movement. Dr. Joel Moskowitz has done this by blaming a variety of cancers on radio frequency (i.e., WiFi) radiation, despite absolutely no evidence supporting that link.

The problem is that there are real problems that people are facing: developmental disorders, diseases, environmental pollution, neurodivergences, etc. But instead of focusing their efforts on understanding and combating these problems, the act of concern trolling instead diverts attention and money toward the imagined, phantasmal causes of these charlatans.

But perhaps the biggest examples of concern trolling are when politically motivated actors spread misinformation to sow doubt and dissent around issues that have very robust scientific and health answers. Think about it; there’s an overwhelming suite of evidence that:

- global warming is real, happening, and driven by human-caused emissions of CO2,

- the #1 threat to transgender individuals’ mental health is unsupportive parents,

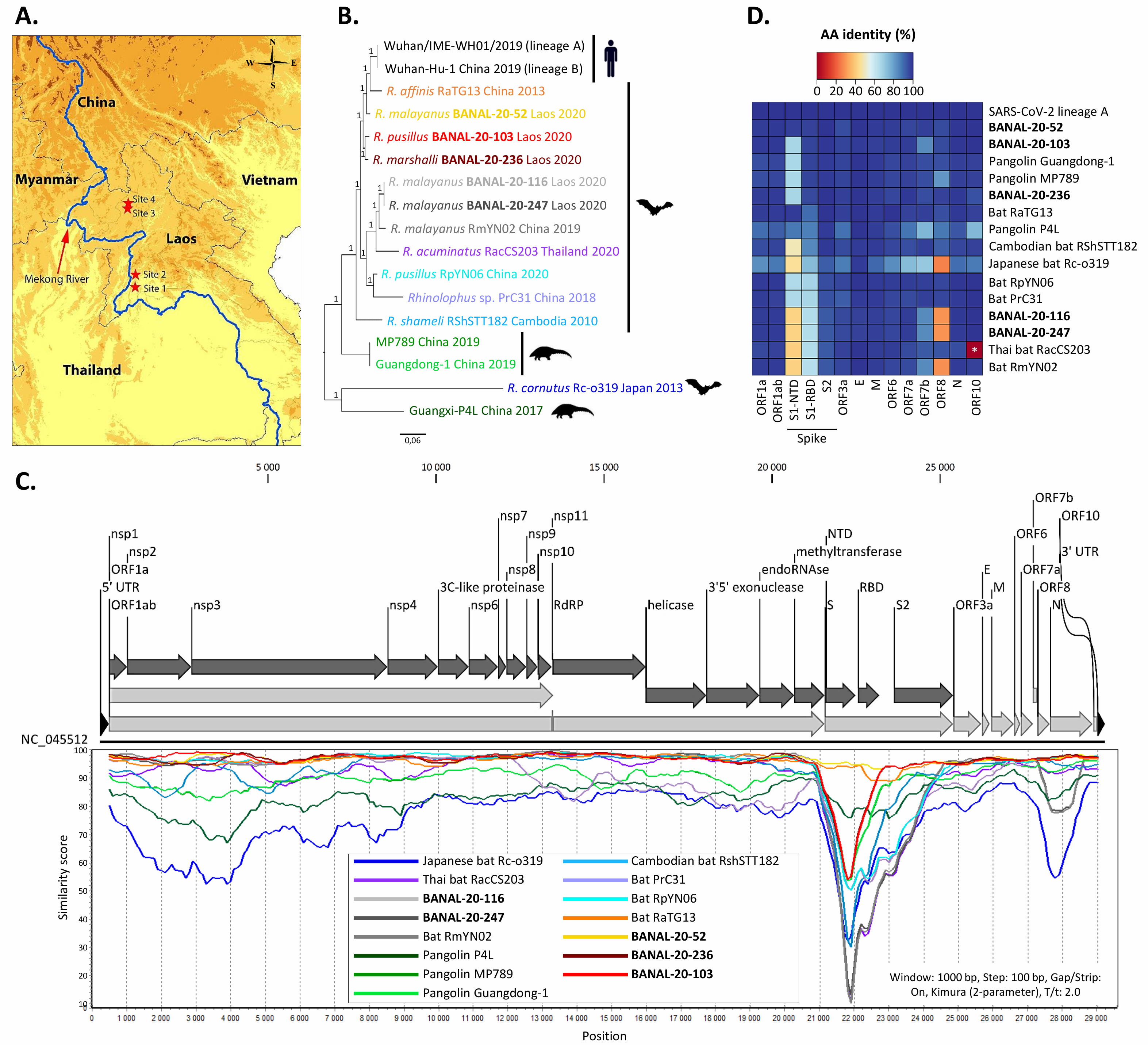

- pandemics are caused by human-animal contact, and particularly by human encroachment on previously wild spaces,

- and that gun violence is the leading cause of death among children in the United States.

But much of the public discourse around these issues is dominated by concern trolls. Many bad actors deliberately sow doubt among the general public about climate change, where none exists from a scientific perspective. By framing the healthcare rights of transgender individuals as a partisan or ideological debate, concern trolls are preventing some individuals from receiving proper, perhaps life-saving healthcare. An obsession over a conspiracy that the COVID pandemic was created from a genetically modified virus in Wuhan has hindered pandemic prevention efforts.

Additionally, many claim to want to “protect children” by whipping up fear over imaginary issues, banning books, regulating who can read to children, and preventing members of the LGBTQ community from interacting with kids. All this, while the actual problems of child sex abuse and gun violence against children go unaddressed.

There’s a particular irony, returning to the letter penned by the Future of Life Institute, that its most prominent signatory was Elon Musk. Since the first 2019 launch of brighter-than-anticipated Starlink satellites, astronomers have asked for a pause in their launch and deployment until they met the necessary specifications, as they’ve unleashed a new, unprecedented type of pollution into the night sky, with quantifiably negative global effects on all of humanity. Musk’s Tesla has been found guilty, in court, of several labor law violations, and has been caught staging — and then untruthfully advertising — an autonomous self-driving capability that does not exist, directly leading to accidents and deaths.

Remember, the crux of the argument that the open letter made, specifically about AI but generally applicable to any technology, was that technological development and applications should occur:

“only once we are confident that their effects will be positive and their risks will be manageable”

When someone openly advocates one line of reasoning for regulations that apply to their own technologies and another, contradictory one for the technologies of others, the hypocrisy should rattle you to your core.

The sober truth is that there are real societal concerns with the arrival of any new technology, but this is not because the technology itself is problematic. Instead, the problem is how human beings will use it, unethically, for their own gain and to harm others. If we genuinely hope to build a better society, those are the guardrails that need to be set up: against those who would use technology for the exploitation of others, not against the technology itself.

The tactic of concern trolling — and the diversion of resources from where they should be going to truly address an issue — is yet another front that the war against misinformation must be fought on. By tapping into our fears and outrage, this strategy often amplifies societal schisms and foists further burdens onto those who advocate for optimal science-based and health-based outcomes. From AI to biotech to disease prevention to global warming to children’s health and everywhere else, we must combat this divisive behavior and fight it with the one weapon that’s always at our disposal: the honest and complete truth.