Could Artificial Intelligence Solve The Problems Einstein Couldn’t?

With huge suites of data, we can extract plenty of signals where we know to look for them. Everything else? That’s where AI comes in.

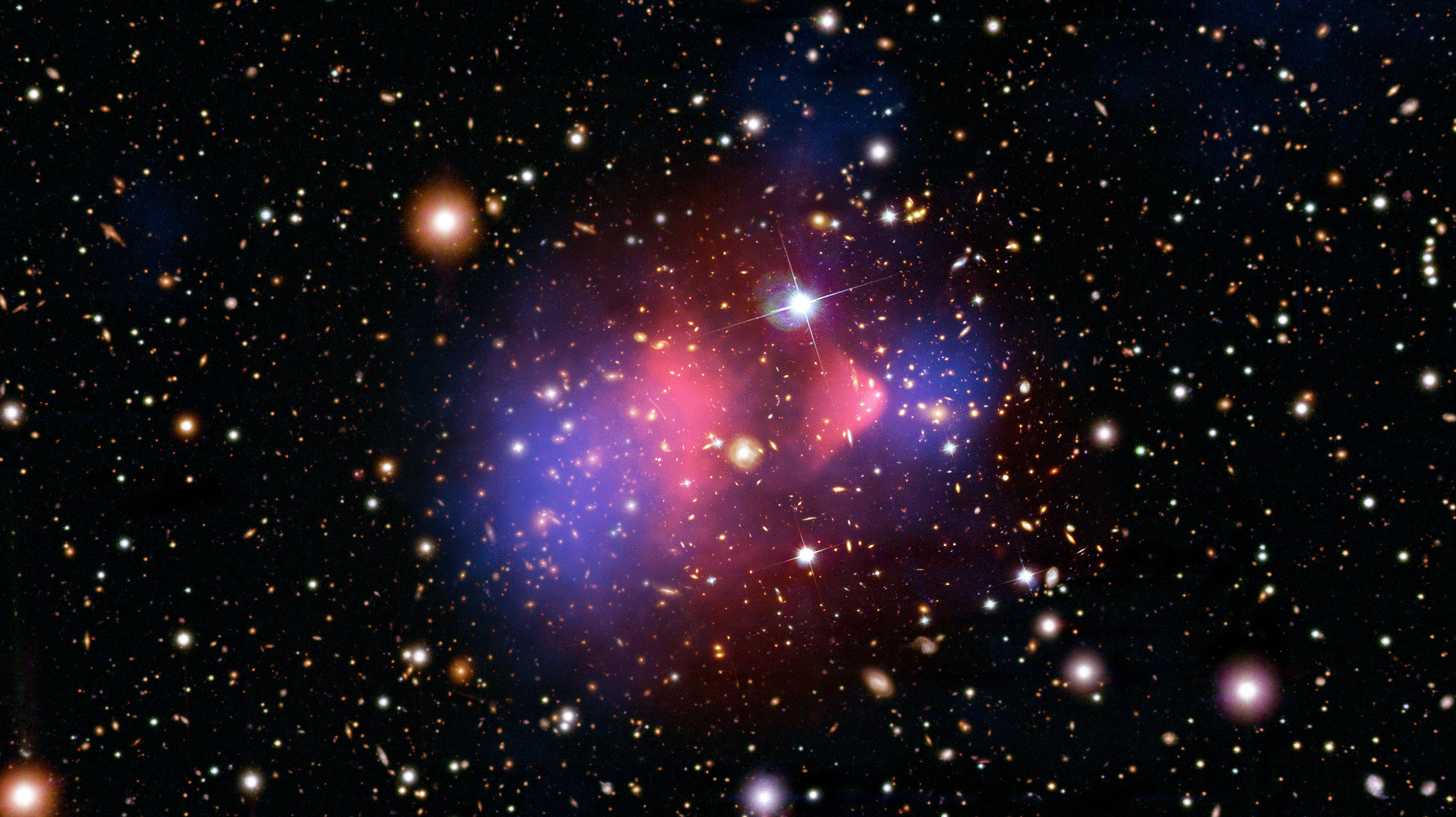

At the dawn of the 20th century, there were a number of crises in physics. Radiating objects like stars emitted a finite, well-defined amount of energy at every wavelength, defying the best predictions of the day. Newton’s laws of motion broke down and failed when objects approached the speed of light. And where gravitational fields were the strongest, such as closest to our Sun, everything from planetary motion to the bending of starlight differed from the predictions of the universal law of gravitation. Scientists responded by developing quantum mechanics and General Relativity, which revolutionized our Universe. Names like Planck, Einstein, Heisenberg, Schrodinger, Dirac and more are often hailed as the greatest scientific geniuses of our times as a result. No doubt, they solved some incredibly complex problems, and did so brilliantly. But artificial intelligence, quite possibly, could have done even better.

Einstein wouldn’t have liked that idea. When he was reflecting on his greatest discoveries in a book he wrote in 1931, he stated the following:

At times I feel certain I am right while not knowing the reason. When the eclipse of 1919 confirmed my intuition, I was not in the least surprised. In fact, I would have been astonished had it turned out otherwise. Imagination is more important than knowledge. For knowledge is limited, whereas imagination embraces the entire world, stimulating progress, giving birth to evolution. It is, strictly speaking, a real factor in scientific research.

The human brain seems to be wired to conceive of cross-disciplinary connections that enable us to advance in critical ways at critical moments. The scientific breakthrough — those “eureka” moments — have always seemed to be a uniquely human achievement. But perhaps that’s not true any longer.

There are some things that machines are better at than humans. The number of calculations a machine can perform, along with the speed it can perform them, vastly outstrips what even the most brilliant geniuses among us can do. Computer programs have, for many decades now, been able to solve computationally intensive problems that humans cannot. This isn’t just for brute forceproblems like calculating ever-more digits of π, but for sophisticated ones that were once unimaginable for a machine.

No top human has defeated a top computer program at chess in over a decade. The technology that Apple’s Siri is based on grew out of a DARPA-funded computer project that could have predicted 9/11. Fully-autonomous vehicles are on track to replace human-driven cars within the next generation. In every case, problems that were once thought best-tackled by a human mind are giving way to an AI that can do the job better.

Artificial intelligence isn’t simply a computer program where you tell it what to do and it does it; instead, it can learn and adapt on its own. It can, at an advanced enough level, write its own code. We see applications of this coming to life in the fields of computer vision, language translation, and autonomous robots. But in the sciences, we see new papers coming out all the time taking advantage of what artificial intelligence can do that humans can’t. Planets that are lurking in the NASA Kepler data have been found by AI where human-programmed techniques have missed them. Machine learning has constrained new physics that may have arisen at the Large Hadron Collider. It makes one wonder whether there are any problems at all that are uniquely suited to humans, or whether artificial intelligence can eventually solve anything as-good-or-better that a human can.

That very idea is the topic of this evening’s public lecture at the Perimeter Institute, given by Roger Melko. In many ways, the quantum wavefunction that describes any physical scenario, from a free particle to an atom to an ion to a molecule to a many-body system, is the ultimate “big data” problem. AI has already been successfully applied to a number of scientific problems and fields, including error-correction algorithms, tensor networks, searching for new states of quantum matter, and so on. Where AI can be applied, it not only changes and magnifies what we can learn from the data, it also delivers novel predictions, oftentimes that no human mind has ever thought of. If AI can spark new ideas in fundamental research, is that any different from Einstein’s definition of “imagination” and how valuable it is?

If we had AI a century ago, it’s arguable that computers, not humans, could have developed quantum mechanics and relativity. What will we learn with the advent of artificial intelligence and machine learning in the 21st century?

Tune in at 7:00 PM ET/4:00 PM PT today to catch Roger Melko’s public lecture, and follow my live-blog of the event in real-time below!

(Live blog begins 10 minutes before showtime; all times PDT; ask your questions on Twitter using #piLIVE.)

3:51 PM: So, here’s a big question that I hope gets answered: what is it that requires a human today, and what will necessarily require a human in the future? Right now, most of what AI/machine learning can discover is based on how successfully the algorithms are programmed. But could a machine devise a force law on its own? Could it have come up with relativity or the Schrodinger equation? And if not, could it do so in the future? I can’t wait to find out!

3:55 PM: This precipitates an existential crisis for many. At what point will we become too reliant on machines, and lose the skills that made us the successful species that we are? If we learn the answers to these fundamental questions, and a machine discovers it, will we be able to understand the answer when it arrives? And, if/when machines can learn to start asking these questions and answering them for themselves, will we even serve a scientific purpose? Something big to think about, I suppose!

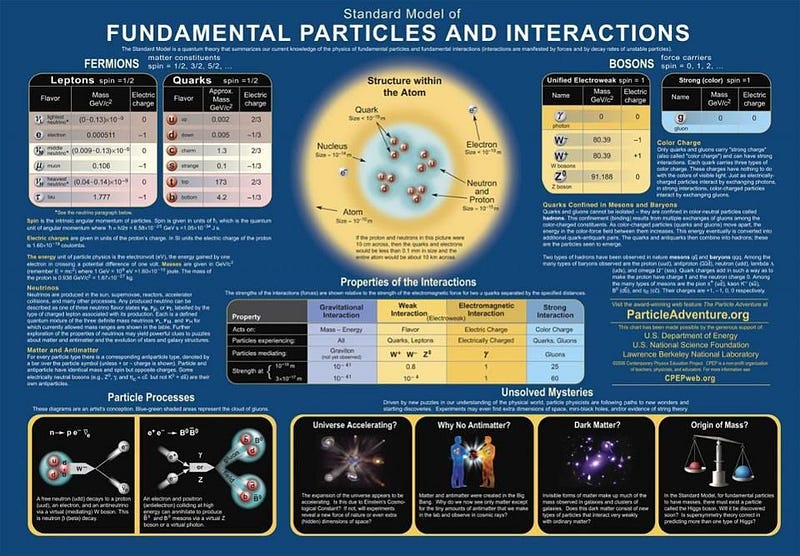

4:00 PM: Isn’t it weird how as complex as nature is, we think it’s governed by just a few fundamental forces, particles and interactions, and yet they all add up to form this incredibly complex set of structures? Let’s see what this frontier is like… and what Roger has to tell us about what artificial intelligence has to tell us about the complexity frontier!

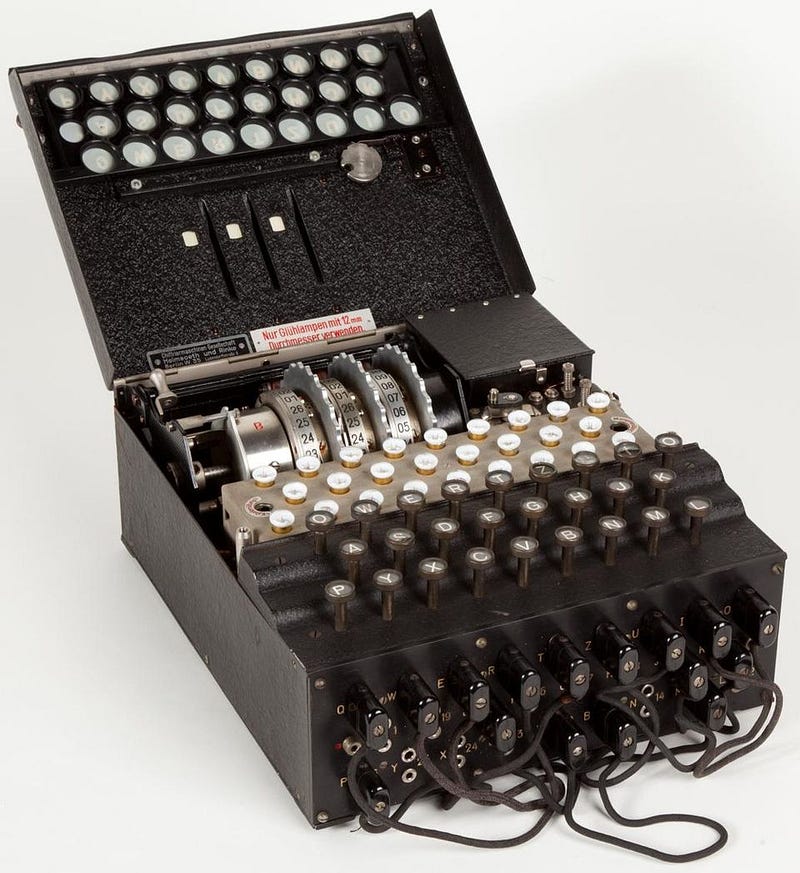

4:04 PM: While Roger talks about World War II, think about this fact: we can only predict what’s going to happen, at a quantum level, statistically. What better tools is there than a machine that can simulate systems and various possible outcomes over and over to estimate likelihoods and other potential outcomes? And imagine, of course, what “estimation” techniques (that humans are bad) at, that we can suddenly become good at?

4:07 PM: He makes exactly this point! He does it using cryptography, which (as we know) machines are already way better at than the smartest humans. We got there generations ago!

4:09 PM: This is the “ENIGMA” machine, which encrypted a message using a lot of messages, and which humans really couldn’t break. Without the codebook to tell you how this machine was set on a particular day, you can’t decode it. But an intelligent enough machine, rather than “guessing” the settings, could help you determine the answer!

4:11 PM: Roger says there are 10²⁰ possibilities for how the ENIGMA machine could be set… which equals approximately the number of grains of sand in all the beaches and oceans on Earth. This, 77 years ago, was the “complexity frontier” back then. And the person who worked to break it is a name you know: Alan Turing.

4:13 PM: How did Alan Turing crack the ENIGMA machine? He built another machine that counted up all the settings and possibilities every day, and figured out how to break the code. When the code was broken, the allies were able to listen in on whatever conversations were taking place (in German) on the U-boats on a new, daily basis. When the messages made sense, he knew the code was cracked.

4:17 PM: Now Roger is giving us a tour of computer history: the ENIAC, Bell Labs and the transistor [which John Bardeen one his first of two Nobel Prizes in physics; the other is for superconductivity and BCS (along with Cooper of “Cooper pairs” and Schrieffer of murdering a bunch of civilians fame/infamy)], and then onto the integrated circuit. Of course, Moore’s Law has brought us to exponentially more powerful machines today!

4:19 PM: He brings up Star Trek! Yes! This is a huge influencer: how can technology impact/improve all of our daily lives? Boy… good thing someone (hint-hint) you know may have written a book on this!

4:21 PM: This is a nice analogy: the thickness that your circuit is printed on, 10 nanometers, is the amount that your fingernails grow every second. Just shave ’em down and build a computer! (I wish!)

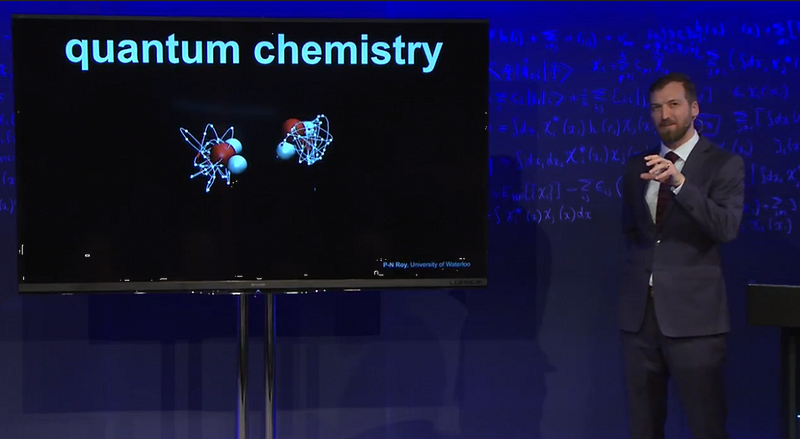

4:25 PM: Here’s a fun application: how water (or any other) molecule evolves over time, in the presence of other molecules. This quantum chemistry problem is delightful, because it skirts the line between the quantum (microscopic) and classical (macroscopic) worlds, and yet you can get the actual, in-depth quantum effects to yield the old-school, classical behavior from the simulations themselves. That’s really exciting, by the way, to be able to do this computationally!

4:27 PM: There are 10⁸⁰ particles in the observable Universe, which is why he chose the number 2²⁶⁸. Of course… he isn’t counting photons or neutrinos, which would bring this up to about 10⁹⁰, or about 2²⁹⁸. Come on, Roger, just give us the extra particles!

4:30 PM: He’s saying that only a human could write a poem, make an art piece, compose a painting. But check out what’s embedded above: it’s a sci-fi mini-movie that was entirely written by artificial intelligence. It’s nonsense, kind of, but it also is interesting in its own way… and it exists. How long before it’s writing better scripts than George Lucas? How long before it’s doing better than 1981-era George Lucas? I can’t wait to see how this unfolds!

4:33 PM: Okay, let’s come to modern “what can we do” now. We can recognize pictures of things, because we have large amounts of data and an algorithm to recognize that “this thing” is in “this picture.” This goes for trees, docks, pets, cookies, people, faces, etc. This is the field of computer vision, and, to be honest, deep learning algorithms are killing it.

4.37 PM: Artificial intelligence is a broad idea, but deeper into it is machine learning, then neural networks, and then “deep learning” is the most advanced of all. Artificial neural networks are basically like a primitive brain that learn based on experience.

4:39 PM: This is an old idea that I first heard of back in the 1980s. They built a six-legged robot in the shape of a cockroach, and didn’t teach it how to walk, but let it “figure it out” on its own, using this neural network technique. After a few hours (hey, it was the 1980s), it was walking the same way a terrestrial cockroach walks: front-and-back leg on one side, middle leg on the other side for one step; middle leg on one side, front-and-back leg on the other side for the next step, etc. 30+ years later, and we’ve scaled this up to identifying human faces in photographs.

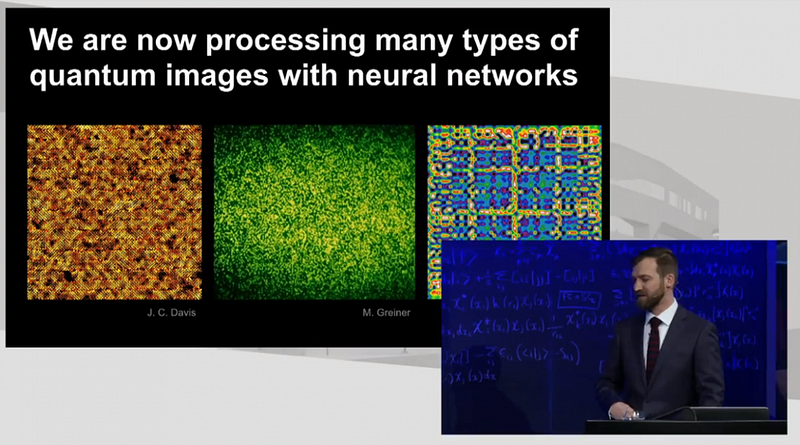

4:41 PM: He’s showing that you can apply artificial intelligence learning techniques to individual atoms (in both simulations and images, above). He won’t talk about that further, but I think the actual physics, which is what I was most excited about, is worth highlighting when it shows up in this talk!

4:44 PM: Artificial intelligence, of course, is only as good as what it’s trained on. There are some scary-looking images if you give an artificial intelligence “experience” in one realm and then send it to work/create in another realm. That’s where those weird AI-generated images you’ve seen floating around the internet come from. But if you train a neural network properly, it can “deep dream” (or create/hallucinate) a new structure that’s never existed before. The applications are fascinating, but are they real? We have to compare to reality to find out. But in a real way, this is ideation, or imagination, coming from a machine!

4:47 PM: He’s bringing up an incredible point: AI has the potential to create a dystopia for us. Getting a fine because AI has recognized your face while you’re jaywalking is certainly doable, but is it ethical? And do we care? We worry about a Terminator-esque future, but will the machines be the villains we fear so much today? Or will it be the same villain humans have always faced: other humans?

4:50 PM: Smart lenses are real, courtesy of the company “verily.” At last, you can have the augmented reality of Google Glass without looking like someone who’s wearing a Google Glass device. Umm… yay?

4:52 PM: I have to say I’m a little bummed out. When I was looking forward to this talk, it was promised to me that Roger, whose research is focused on AI-based breakthroughs in fundamental physics and in new states of quantum matter, would be talking about applications to fundamental physics problems and systems. But what we’re getting is a tour of futuristic tech that’s becoming a reality. Unfortunately, that’s not what I would call the “complexity frontier” at all.

4:55 PM: Of course, when you combine quantum computers with artificial intelligence, the next steps are something that perhaps neither a human nor a machine will be able to predict. And with that, Roger’s talk comes to an end!

4:57 PM: Q&A time. And the first one is MINE! Can an AI derive force laws? Schrodinger’s equation? The Standard Model?

Roger says that Kepler did this with Brahe’s data, leading to Newton, etc. The Balmer series led to atomic/quantum physics. This is pattern-matching. Now, we have a suite of algorithms that are as good or better than humans at pattern-matching. But as far as equations or laws? He was suspiciously silent on that front, which means not yet if we translate the traditional physics-waffle into plain English.

5:01 PM: And after a couple of more questions about ethics and who’s using AI where in physics, that’s the end. Thanks for joining me and bearing with the live-blog, and hopefully you learned something and had a good time!

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.