Merging Neutron Stars Really Can Solve Cosmology’s Biggest Conundrum

With just a few more neutron star mergers, we’ll have the best constraints of all-time.

How fast is the Universe expanding? Ever since the expanding Universe was first discovered nearly 100 years ago, it’s been one of the biggest questions plaguing cosmology. If you can measure how fast the Universe is expanding right now, as well as how the expansion rate is changing over time, you can figure out everything you’d want to know about the Universe as a whole. This includes questions like:

- What is the Universe made of?

- How long has it been since the hot Big Bang first took place?

- What is the Universe’s ultimate fate?

- Does General Relativity always govern the Universe, or do we need a different theory of gravity on large, cosmic scales?

We’ve learned a lot about our Universe over the years, but one enormous question is still in doubt. When we try to measure the expansion rate of the Universe, different methods of measuring it yield different results. One set of observations is about 9% lower than the other set, and no one has been able to figure out why. With a completely independent test that isn’t subject to any of the biases of the other methods, merging neutron stars could measure the Hubble parameter as never before. The first results just came in, and point towards exactly how we’ll reveal the ultimate answer.

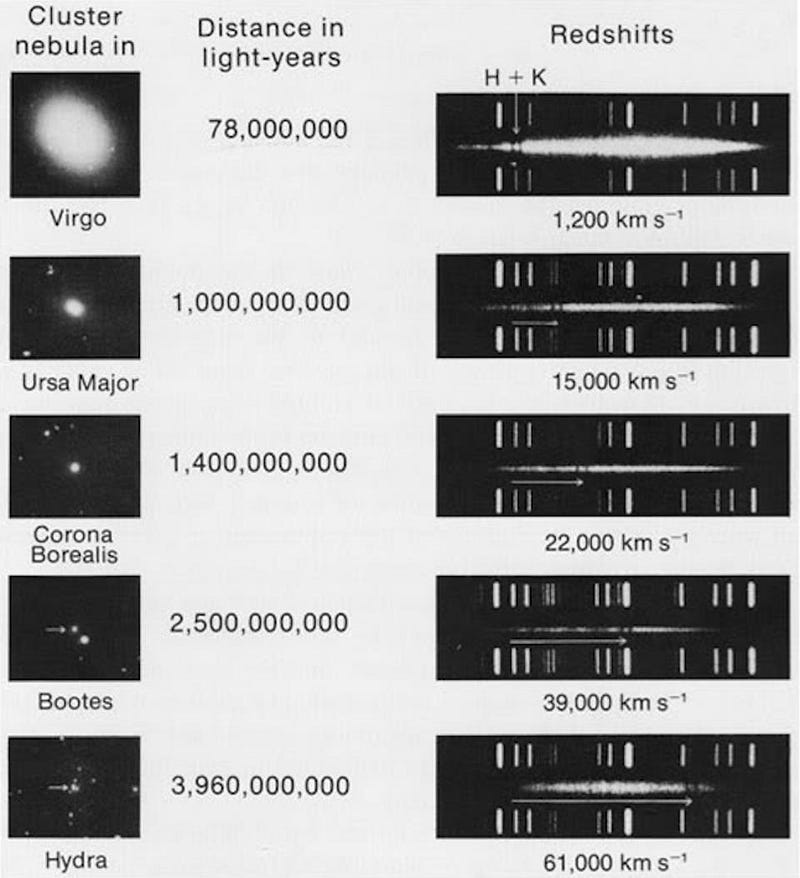

The story of measuring the Universe’s expansion goes all the way back to Edwin Hubble. Prior to the 1920s, when we saw these spiral and elliptical “nebulae” in the sky, we didn’t know whether they existed within our galaxy or whether they were distant galaxies all unto themselves. There were some clues that hinted one way or the other, but nothing was definitive. Some observers claimed that they saw these spirals rotating over time, indicating that they were close by, but others disputed those observations. Some saw that these objects had large velocities — too large to be gravitationally bound to our galaxy if so — but others disputed the interpretation of those redshift measurements.

It wasn’t until Hubble came along, with access to a new telescope that was the world’s largest and most powerful at the time, that we could definitively measure individual stars within these objects. Those measurements, because we knew how stars worked, allowed us to learn that these objects weren’t hundreds or thousands of light-years away, but millions. Spirals and ellipticals were their own galaxies after all, and the farther away they were from us, the faster they appeared to be receding.

In short order, astrophysicists put the entire picture together. Einstein’s original vision of a static Universe was impossible in a Universe filled with matter; it needed to be either expanding or contracting. The more distant a galaxy was observed to be, on average, the faster it appeared to be moving away from us, following a simple mathematical relationship. And the expansion rate, the more intricately we measured it, appeared to change over time, as the density of matter and other forms of energy — which themselves change as the Universe expands — determines what the expansion rate must be.

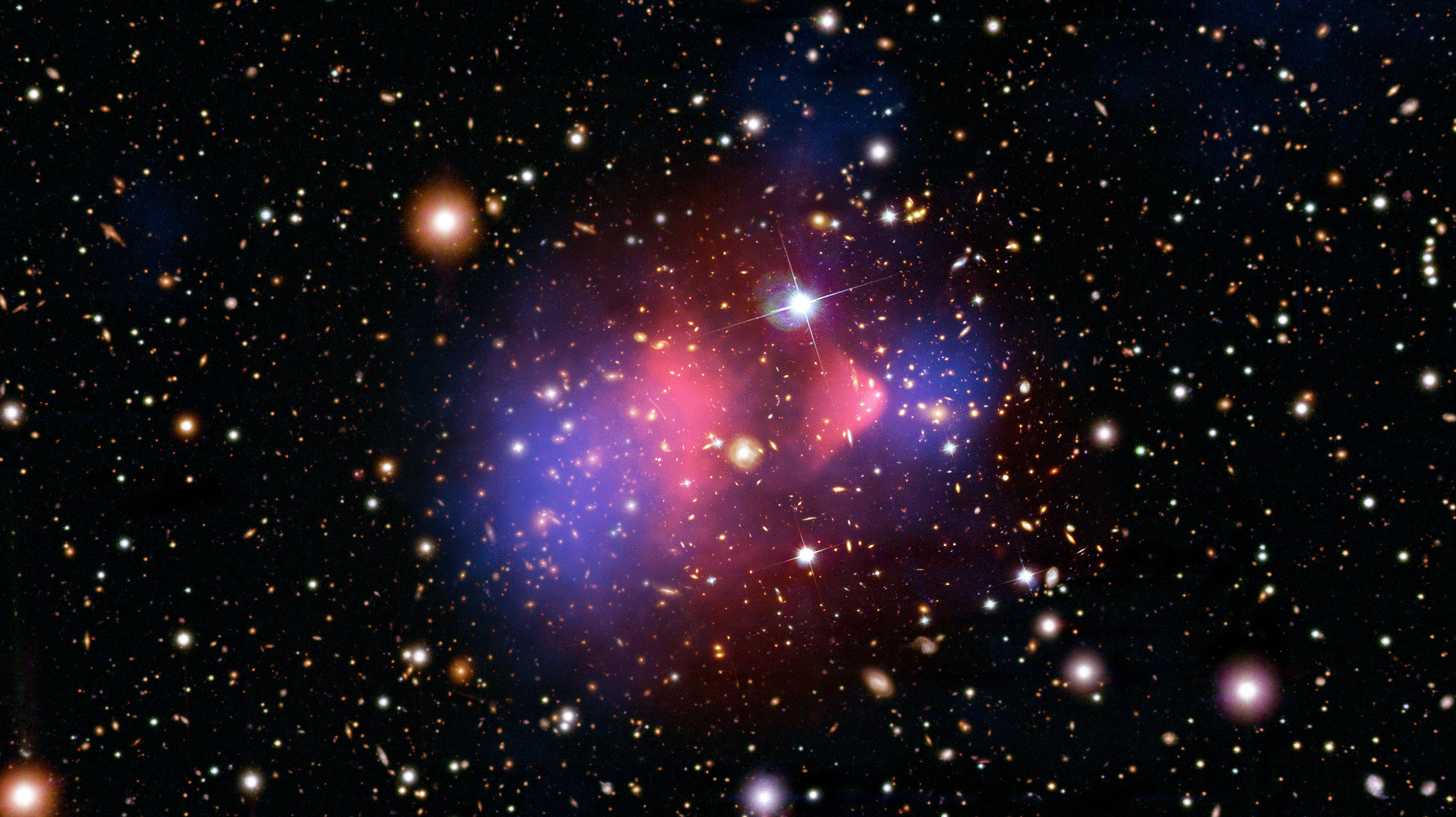

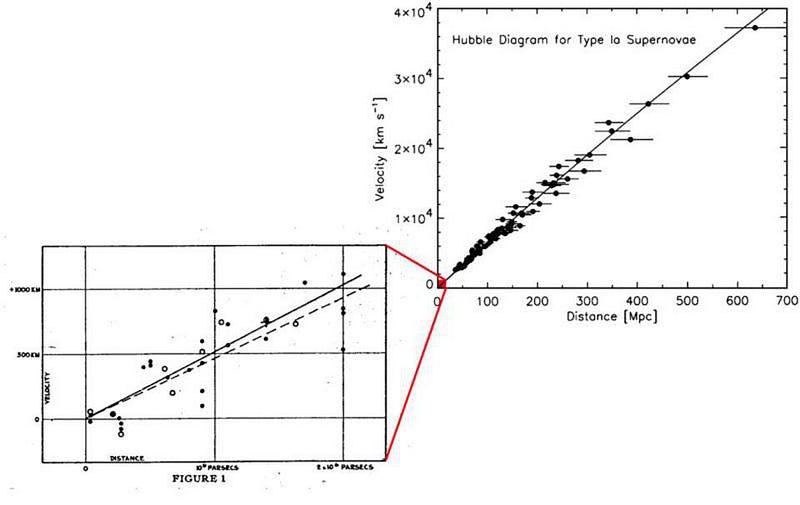

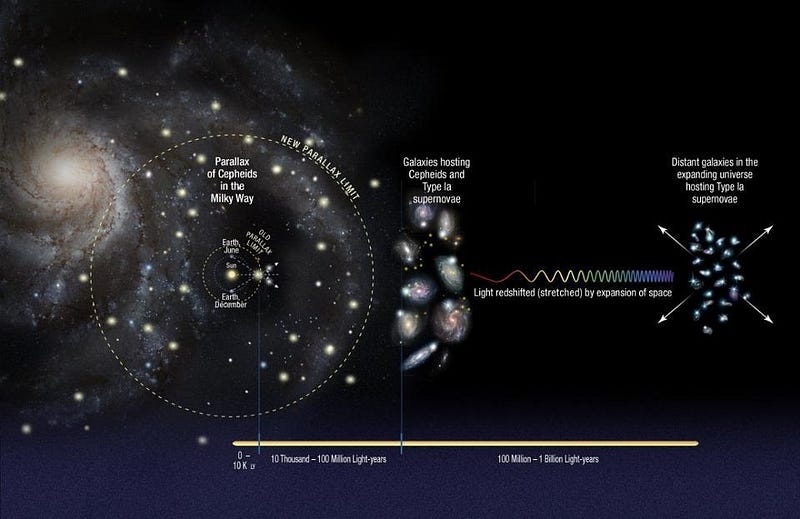

Today, we have two fundamentally different classes of way to measure how the Universe expands. One builds on Hubble’s original method: start by measuring easily understandable, nearby objects, then observe that same type of object further away, determining its distance and apparent recession speed. The effects of the expansion of the Universe will imprint themselves on that light, allowing us to infer the expansion rate. The other is completely different: start with the physics of the early Universe, and a specifically imprinted signal that was left at very early times. Measure how the expansion of the Universe has affected that signal, and you infer the expansion rate of the Universe.

The first method, generically, is known as the cosmic distance ladder. There are many independent ways to make cosmic distance ladder measurements, as you can measure many different types of stars and galaxies and many different properties that they have, and construct your distance ladder out of them. Each independent method that leverages the cosmic distance ladder, from gravitational lenses to supernovae to variable stars to galaxies with fluctuating surface brightnesses and more, all yield the same classes of result. The expansion rate is ~73–74 km/s/Mpc, with an uncertainty of only about 2%.

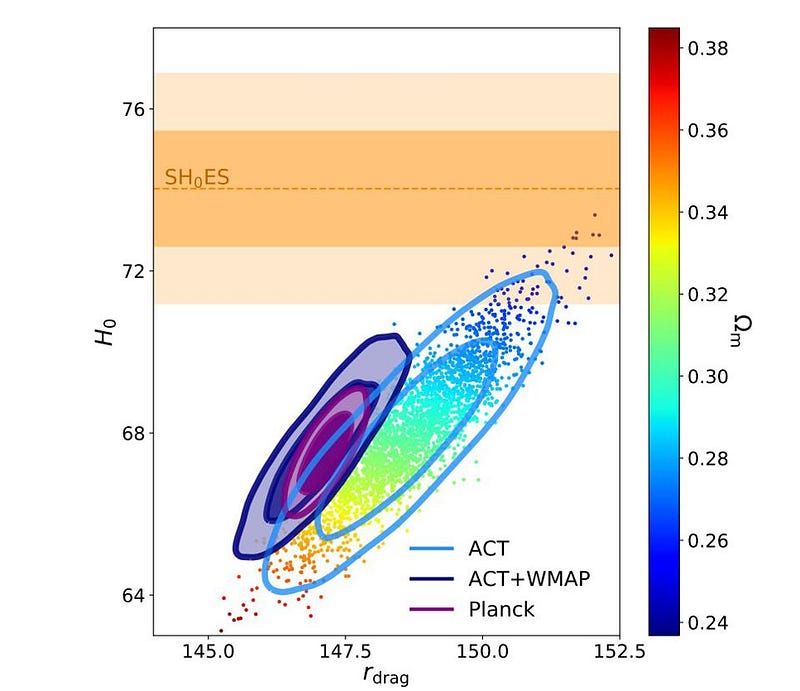

The second method, although it doesn’t have a universal name like the first one, is often thought of as the “early relic” method, since an imprint from the early Universe shows up at specifically measurable scales at various epochs. It shows up in the fluctuations in the cosmic microwave background; it shows up in the patterns by which galaxies cluster; it shows up in the changing apparent angular diameter of objects at various distances. When we apply these methods, we get the same classes of result as well, and it’s different from the first method. The expansion rate is ~67 km/s/Mpc, with an uncertainty of only 1%.

If you take the first method, it’s possible that the actual expansion rate might be as low as 72 or even 71 km/s/Mpc, but it really can’t be lower without running into problems. Similarly, you can take the second method, but it really can’t be higher than about 68 or 69 km/s/Mpc without problems. Either something is fundamentally wrong with one of these sets of methods, something is wrong with an assumption going into one set of methods (but it isn’t clear what), or something fundamentally new is going on with the Universe compared to what we expect.

What we keep hoping will happen is that there will be a completely new, independent way to measure the expansion rate that doesn’t have any of the potential pitfalls or errors or uncertainties that the other methods do. It would be revolutionary even if, for example, there were a “distance ladder” method that gave a low result, or if there were an “early relic” method that gave an anomalously high result. This puzzle, of why two different classes of methods yield two different results that are inconsistent with one another, is often called cosmology’s biggest conundrum today.

One of the places people are looking to potentially resolve this is through an entirely different set of measurements: through gravitational wave astronomy. When two objects that are locked in a gravitational death spiral radiate enough energy away, they can collide and merge, sending a colossal amount of energy through spacetime in the form of ripples: gravitational radiation. After hundreds of millions or even billions of light-years, they arrive at our detectors like LIGO and Virgo. If they have a large-enough amplitude and a frequency of just the right range, they’ll move these carefully calibrated mirrors by a tiny but periodic, regular amount.

The very first gravitational wave signal was only detected five years ago: in September of 2015. Flash forward to the present, where LIGO has been upgraded multiple times and joined by the Virgo detector, and we now have upwards of 60 gravitational wave events. A few of them — including an event in 2017 known as GW170817 and one in 2019 named GW190425 — were extremely close by and low in mass, cosmically speaking. Instead of merging black holes, these events were neutron star mergers.

The first one, in 2017, produced a light-signal as a counterpart: gamma rays, X-rays, and lower-energy afterglows across the electromagnetic spectrum. The second one, however, produced no light at all, despite many follow-up observations being conducted.

The reason? For the first merger, the masses of the initial two neutron stars were relatively low, and the post-merger object they produced was initially a neutron star. Spinning rapidly, it formed an event horizon and collapsed into a black hole in less than a second, but that was enough time for light and matter to get out in copious amounts, producing a special type of explosion known as a kilonova.

The second merger, however, had neutron stars that were more massive. Instead of merging to produce a new neutron star, it formed a black hole immediately, hiding all of that matter and light that otherwise would have escaped behind an event horizon. With nothing getting out, we have only the gravitational wave signal to teach us what happened.

However, we’ve also recently observed neutron stars to unprecedented accuracy, thanks to NASA’s NICER mission aboard the International Space Station. Among other features — such as flares, hot spots, and identifying how its rotational axis and its “pulse” axis are different — NICER helped us to measure how large these neutron stars must be in terms of their radius. With the knowledge that these neutron stars are somewhere between about 11 and 12 kilometers, with mass-dependent constraints, a team of scientists led by Tim Dietrich just published a paper where they not only determined the radii of the neutron stars during these two merger events, but used that information to infer the expansion rate of the Universe.

Using neutron star mergers — because they involve gravitational waves — is a little different than the other cosmic measurements we make. The light arriving from these mergers allows us to determine a distance in a similar fashion to how we’d do it for any other indicator: you measure the apparent brightness, you assume the intrinsic brightness, and that teaches you how far away it is. But it also involves using the gravitational wave signals: a standard “siren,” if you will, because of its wave properties, rather than a standard “candle” like we use for measuring light.

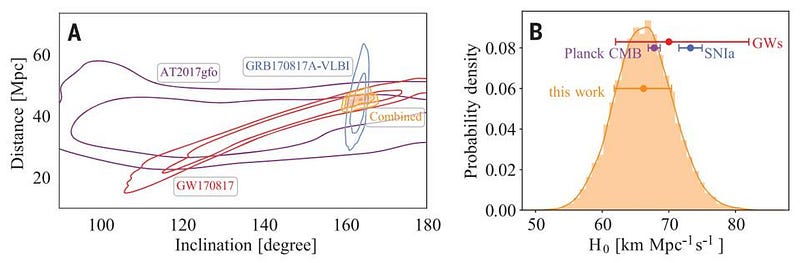

When the data is all combined, even for just one usable neutron star merger that had both a gravitational wave signal and an electromagnetic signal, yields remarkable constraints on how fast the Universe is expanding. The second neutron star merger, because of its higher masses, can help place constraints on the size of a neutron star as a function of mass, allowing them to estimate that a neutron star with 140% the mass of the Sun is precisely 11.75 km in radius, with just a ~7% uncertainty. Similarly, they infer a value for the expansion rate of the Universe: 66.2 km/s/Mpc, with an uncertainty also of about 7%.

What’s remarkable about this estimate is threefold.

- Through just one multi-messenger event, where we observe light signals and gravitational wave signals from the same astrophysical process of a merging neutron star pair, we could constrain the Hubble constant to just ~7%.

- That this event, which is based on an entirely new method but which should agree with the “distance ladder” estimate because it originates from the late-time Universe, prefers the “early relic” value, although it’s still consistent with the standard “distance ladder” value.

- And that with just nine more neutron star mergers, we’ll be able to measure the expansion rate to within 2% using this method alone. With a total of ~40 mergers, we could get the rate to a 1% precision.

What’s perhaps most important about all of this is what we learn when we look ahead to the future. In many ways, we got very lucky in 2017 by having a neutron star merger occur so close to us, and then again by having it produce light signals and a neutron star as a result before collapsing into a black hole. But as our gravitational wave detectors operate for longer periods of time, as we upgrade them to get more sensitive, and as they become able to probe objects like this over a larger volume of space, we’re bound to see more of them. When we do, we should be able to measure the expansion rate of the Universe as never before.

Regardless of what the results are, we’re going to learn something profound about the Universe. We’ve learned more, over the past few years, about the size and properties of neutron stars, and seeing them merge has empowered us to measure exactly how fast the Universe is expanding through an entirely new method. Although this new measurement won’t resolve the tension that currently exists, it may not only point the way forward towards a solution, but it might do so more precisely — in short order — than any other method so far. For gravitational wave astronomy, a field that’s only five years old in earnest, it’s a remarkable advance that will almost certainly occur over the coming years.

Starts With A Bang is written by Ethan Siegel, Ph.D., author of Beyond The Galaxy, and Treknology: The Science of Star Trek from Tricorders to Warp Drive.