Your Next Translator May Be a Robot

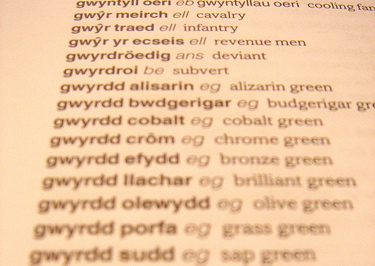

One outstanding task on the global conversation to-do list is how to communicate across languages on all our various new media. Now, a linguistic brain trust at MIT has stepped in to develop a real-time solution to not understanding each other.

The approach, pioneered by Pedro Torres-Carrasquillo of MIT’s Lincoln Laboratory, requires audio mapping a speaker’s low-level acoustics—the intonation of vowel and consonant groupings. Pedro Torres-Carrasquillo found that by focusing on these tiny parts of spoken language he could arrive at a much more accurate identification of a particular dialect than analyzing phonemes—a language’s word and phrasal groups.

Though a few years away, the real-world applications of the work is sweeping. In a surveillance context, low-level acoustics mapping could let a wire tapper narrow down a criminal’s location by dialect. From just one utterance, a phone system like Skype could identify a speaker’s language and regional dialect for the common user.

Goodbye, Caller ID.