Hawking, Musk Draft a Letter Warning of the Coming AI Arms Race

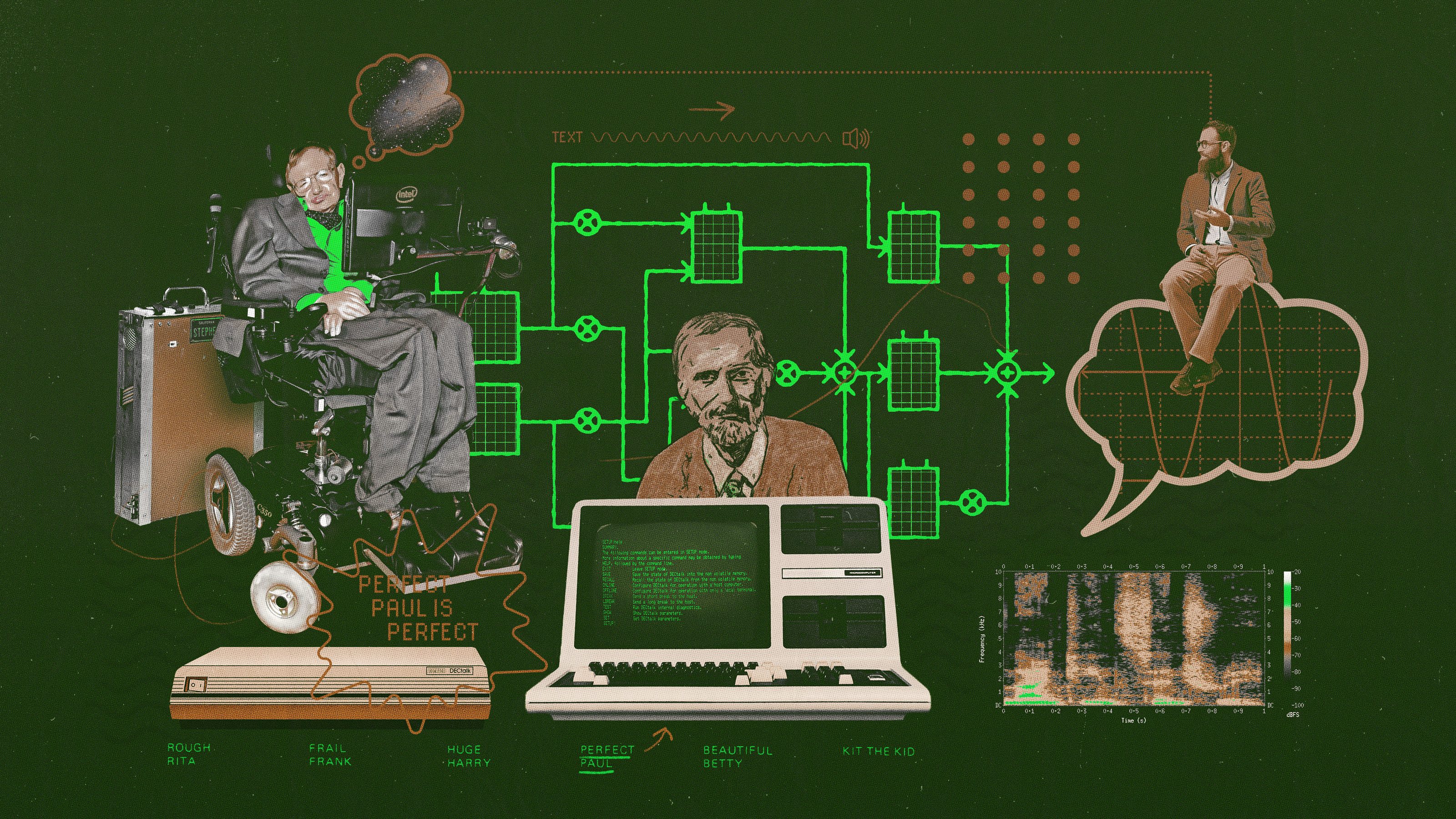

Stephen Hawking and Elon Musk have made their mistrust of artificial intelligence well-known. The two, along with a group of equally concerned scientists and engineers, have released an open letter calling for the prevention of an autonomous robotic army, warning of the disastrous consequences for humanity and future advancements.

Their fears are well-founded, as they write:

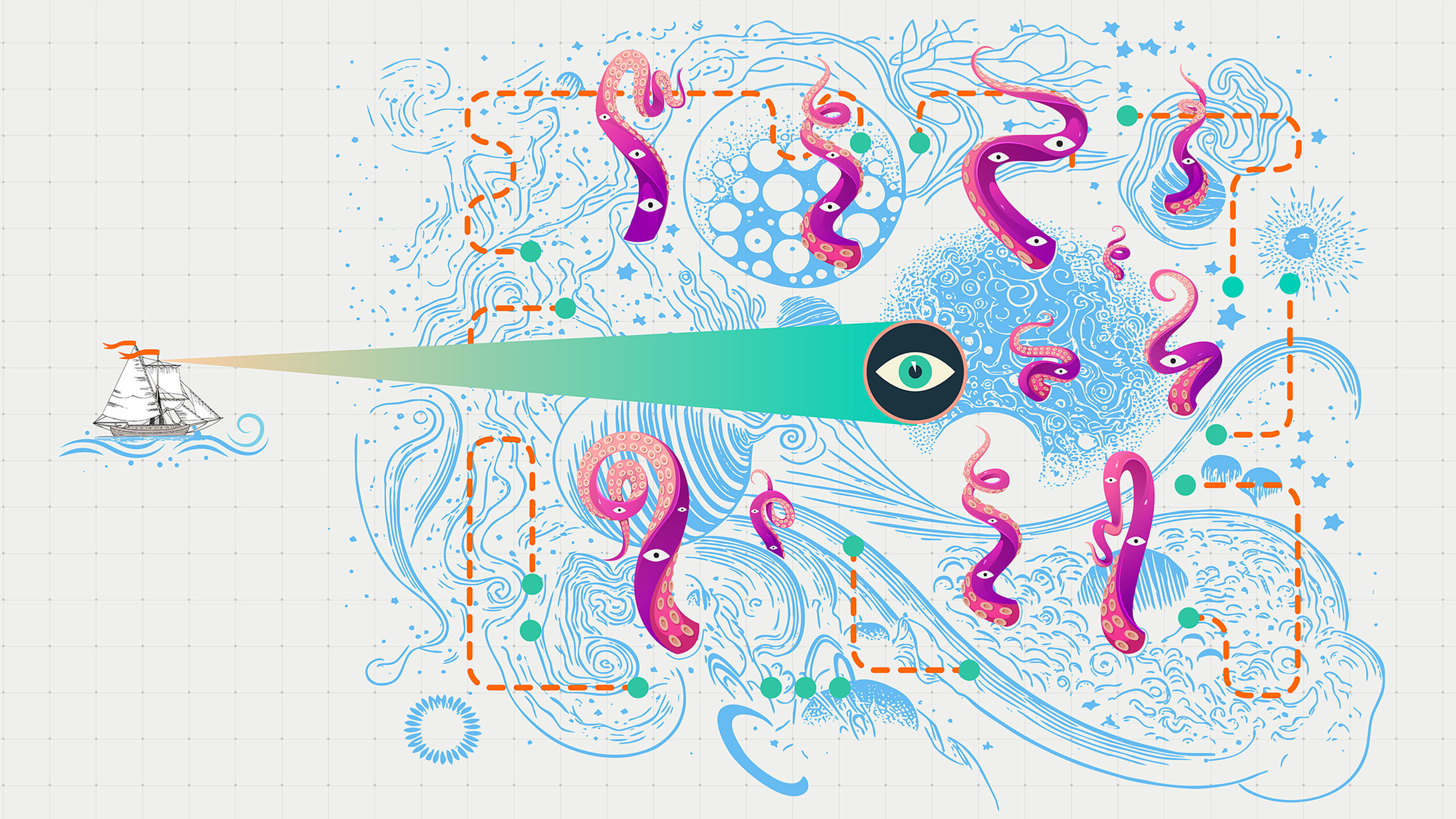

“If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: Autonomous weapons will become the Kalashnikovs of tomorrow.”

The letter likens AI weaponry to chemical and biological warfare, but with far more ethical concerns and consequences. Once nations start building AI weaponry, there will be an arms race, they write, and it will only be a matter of time until this technology gets into the “hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing, etc.” Robots could be programmed to target only select groups of people without mercy or consciousness. It is for these reasons that they “believe that a military AI arms race would not be beneficial for humanity.”

The construction of such weapons would do a disservice to the huge benefits AI could provide humans in the years to come. Building them would only further humanity’s mistrust in such technology, the authors write.

“In summary, we believe that AI has great potential to benefit humanity in many ways, and that the goal of the field should be to do so. Starting a military AI arms race is a bad idea, and should be prevented by a ban on offensive autonomous weapons beyond meaningful human control.”

Theoretical physicist Lawrence Krauss does not share in their concerns, although he understands them. He believes AI, as complex as what’s being described in this letter is still a long way off. “I guess I find the opportunities to be far more exciting than the dangers,” he said. “The unknown is always dangerous, but ultimately machines and computational machines are improving our lives in many ways.”