Elon Musk – 2 Things Humans Need to Do to Have a “Good Future”

A fascinating conference on artificial intelligence was recently hosted by the Future of Life Institute, an organization aimed at promoting “optimistic visions of the future” while anticipating “existential risks” from artificial intelligence and other directions.

The conference “Superintelligence: Science or Fiction?” featured a panel ofElon Musk from Tesla Motors and SpaceX, futurist Ray Kurzweil, Demis Hassabis of MIT’s DeepMind, neuroscientist and author Sam Harris, philosopher Nick Bostrom, philosopher and cognitive scientist David Chalmers, Skype co-founder Jaan Tallinn, as well as computer scientists Stuart Russell and Bart Selman. The discussion was led by MIT cosmologist Max Tegmark.

The conference participants offered a number of prognostications and warnings about the coming superintelligence, an artificial intelligence that will far surpass the brightest human.

Most agreed that such an AI (or AGI for Artificial General Intelligence) will come into existence. It is just a matter of when. The predictions ranged from days to years, with Elon Musk saying that one day an AI will reach a “a threshold where it’s as smart as the smartest most inventive human” which it will then surpass in a “matter of days”, becoming smarter than all of humanity.

Ray Kurzweil’s view is that however long it takes, AI will be here before we know it:

“Every time there is an advance in AI, we dismiss it as ‘oh, well that’s not really AI:’ chess, go, self-driving cars. An AI, as you know, is the field of things we haven’t done yet. That will continue when we actually reach AGI. There will be lots of controversy. By the time the controversy settles down, we will realize that it’s been around for a few years,” says Kurzweil [5:00].

Neuroscientist and author Sam Harris acknowledges that his perspective comes from outside the AI field, but sees that there are valid concerns about how to control AI. He thinks that people don’t really take the potential issues with AI seriously yet. Many think it’s something that is not going to affect them in their lifetime – what he calls the “illusion that the time horizon matters.”

“If you feel that this is 50 or a 100 years away that is totally consoling, but there is an implicit assumption there, the assumption is that you know how long it will take to build this safely. And that 50 or a 100 years is enough time,” he says [16:25].

On the other hand, Harris points out that at stake here is how much intelligence humans actually need. If we had more intelligence, would we not be able to solve more of our problems, like cancer? In fact, if AI helped us get rid of diseases, then humanity is currently in “pain of not having enough intelligence.”

Elon Musk’s point of view is to be looking for the best possible future – the “good future” as he calls it. He thinks we are headed either for “superintelligence or civilization ending” and it’s up to us to envision the world we want to live in.

“We have to figure out, what is a world that we would like to be in where there is this digital superintelligence?,” says Musk [at 33:15].

He also brings up an interesting perspective that we are already cyborgsbecause we utilize “machine extensions” of ourselves like phones and computers.

Musk expands on his vision of the future by saying it will require two things – “solving the machine-brain bandwidth constraint and democratization of AI”. If these are achieved, the future will be “good” according to the SpaceX and Tesla Motors magnate [51:30].

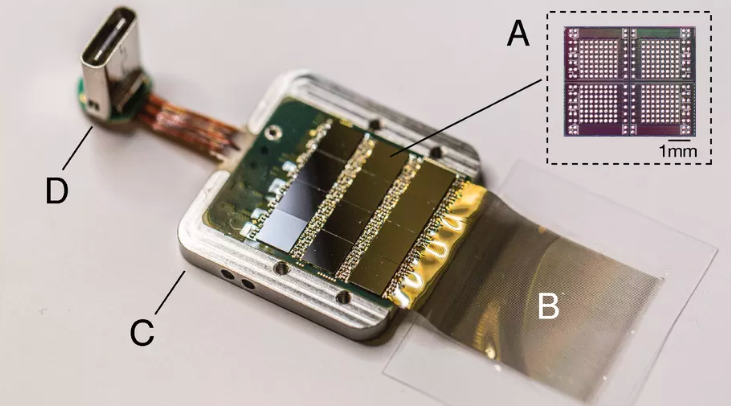

By the “bandwidth constraint,” he means that as we become more cyborg-like, in order for humans to achieve a true symbiosis with machines, they need a high-bandwidth neural interface to the cortex so that the “digital tertiary layer” would send and receive information quickly.

At the same time, it’s important for the AI to be available equally to everyone or a smaller group with such powers could become “dictators”.

He brings up an illuminating quote about how he sees the future going:

“There was a great quote by Lord Acton which is that ‘freedom consists of the distribution of power and despotism in its concentration.’ And I think as long as we have – as long as AI powers, like anyone can get it if they want it, and we’ve got something faster than meat sticks to communicate with, then I think the future will be good,” says Musk [51:47]

You can see the whole great conversation here: