The invention behind the popular video game began with guinea pigs Yo-Yo Ma and Penn and Teller.

Question: Describe the genesis of hyperinstruments.

Tod Machover: So, in a funny way, hyperinstruments – I keep talking about the Beatles, and I think it’s maybe because Sergeant Pepper's came out when I was about 13 or 14 and that was a pretty impressionable age, and it was such a kind of radical period. But that period of the Beatles really had a big influence on me and I think are directly related to hyperinstruments.

So here an album like Sergeant Pepper's comes out, incredibly new sounds, layer upon layer of studio produced sound like nothing anybody had ever done before. And it’s also the period when the Beatles realized that what they were making in the studio could never be performed. And they had already given up on performing because there were too many screaming fans and they were playing in larger and larger venues so they couldn’t even hear what they were playing, it just wasn’t any fun any more. At the same time, their music evolved to something that they could only put together in a multi-track recording studio. So, I think so many things about that period in the late ‘60’s stuck with me. The sense of – I mean, I love the cello, I love the physical sense of an instrument that’s about the size of your body that vibrates enough that even if you play an open string, you feel it. You really feel it from your toes to your hair follicles and it really uses your – you have to put a fair amount of effort into the instrument. I think a double bass for me would be too much effort. But the cello, you’re really engaged and the sound is kind of right here. So, it feels like being merged, married to an instrument. I like that feeling and I like the idea of imagining a sound and feeling a sound and then having it come out through your body, through an instrument like that. That’s an important way to make music.

Then you have something like the Beatles making this great music where they say, “You know? We just give up. We’re never going to perform this. It’s not just that we’re not going to go on tour right now; this music has too many layers. It’s got too many changes in the sound. This voice is all of a sudden gets prominent and then disappears and this is its final version.” You also have at exactly the same years – I never thought of that, but 1967, Glenn Gould, the great pianist, wrote an article called The Future of Recording, and that was exactly the period when Glenn Gould said, “I’m never going to play in public anymore. First of all, I’m kind of shy and I don’t like – it just makes me uncomfortable to play in front of people. Plus, I want to get my performances exactly right. I imagine precisely the way these different lines in the Bach sonata need to be balanced and I understand exactly the relationship between this voice to that one. And yes, I can play it, but I want to go in and adjust these things in a recording studio and that’s my new medium. Recording is the future.”

That’s funny. So, Gould and Beatles did that the same year. And I think what stuck with me at that point was, wow – I love this music and I totally get it. And here’s Gould doing it for Bach, and the Beatles doing it with their music. But they’re not performing any more. And I love performing. I think that one of the things about music is it’s supposed to be spontaneous, it’s supposed to be real human beings bouncing off of each other whether its from the stage or to the audience, or jamming with friends. I mean, I love the idea – it’s important as a composer to sit in silence and imagine these complex musical worlds in your head, but it’s also a wonderful experience to touch your music and to hear it and hear it in the room with you and to say, you can’t have an entire orchestra there, but you’d kind of like to have the orchestra there. You’d like musicians playing for you and you’d like to say, you know, what? Duh. I need ten more French horns. Or that just doesn’t work right; I need some instrument that doesn’t exist. You want to go back and forth between the sound and touching it.

So, I think from that very early time, age 13, 14, 15, I thought, yes, this rich studio produced music is the future, but it can’t be the future to go run away into the recording studio. How can we take that kind of complexity and richness and make it possible for people to touch it and play it live. That’s what hyperinstruments are.

I think the seed was planted when I was a teenager, and it took me until I got out of Juilliard. At Juilliard I was just learning to be a composer, but I was also learning how to manipulate computers. So, nobody at Juilliard, this was in the mid to late ‘70’s, nobody was interested in computers then. It was kind of too late for Moog synthesizers and there were a few computer around the world in Stanford University and MIT and Princeton or Columbia University were about the only ones where you could use punch cards and you’d go in and type out your computer program to make notes appear and, this is what I learned how to do when I was a Juilliard. I found somebody at Columbia who taught me how to do this. I’d go to the Inner City Graduate Center here in Manhattan, type out the punch cards for maybe 30 seconds of music that I wanted to, let’s say play for a string quartet, looked at my music and thought it was completely crazy and unplayable. And I’d say, no, no, it sound really good. I’m going to put it in – so, you made the punch cards. A week later, I’d go back and pick up a reel-to-reel tape and put it on the tape recorder. And of course you couldn’t edit it, you could see the notation, you’d have this ridiculous – but I learned that at Juilliard.

And then when I went to Paris in 1978, this Eurecom Institute that just opened up at the Pompidou Center and for the first time, there was really money around and people around to think about really bringing musicians and scientists and technologists together. It was like – I was very lucky to be there at that time. It was really great. And they were just starting to invent computers that worked fast enough to give you music immediately. So, instead of waiting a week to get your music back, you still have to write software, there was no graphics, but you’d write some software, say okay, now. And it would play your piece and you might be able to do a carriage return and it would make it louder or softer. It was very primitive, but it was immediate. And that’s when I started thinking about the hyperinstruments. Wow! You could have the best of both worlds. You could have layer upon layer and delicate sound and voices and instruments and sound nobody had ever heard. You could mix them altogether, but I can put them in a laboratory – put them in a studio so that they will playback immediately and if I can touch a key and have something happen, I should be able to make my own instrument. I should be able to make something where I can squeeze something, or the way I touch something should be able to change the sound.

So, I started thinking about either leveraging off of existing instruments, or making new instruments that would take what people do well in mastering an instrument, but multiplying the effect that you could produce with that instrument. So, I didn’t actually build a hyperinstrument until I moved to MIT in 1985 because at that point personal computers had just come out so you could do all kinds of things with a $2,000 box. Anyway, there were a lot of things happen in the mid-‘80’s that all of a sudden made it possible to do a lot of very quick interactive music. And with the hyperinstrument, the idea was how to take the act of performing, how to measure what a performing was playing, but also how they were playing; what the interpretation is, and let that interpretation be the equivalent of five recording engineers in the Beatles digital recording studio, in stead of a bunch of people moving knobs up and down and pushing buttons to change that effect, and saying, “Oh, let’s have that.” You simply do it by playing your instrument. And if you play the downbeat of certain measure louder, or if I play this phrase building up the intensity to this section or of I lay off and it all becomes very calm. If I chain – I’m showing as if I’m playing the cello, but the cello’s not a bad way of thinking about it because you can change all kinds of qualitative aspects of the sound. If I’m playing a note and it goes from [making singing sound] If I change that kind of quality of sound, I started to measure those kinds of things so that that sound might mean, okay, if I make that transition, then I want to change the cello, it’s not a cello anymore, it’s a voice, or it’s something I’ve never heard before. Or if I use this part of the bow and play with certain accents, I’m going to play a melody in and depending on the way I play it’s going to add its own harmony.

So, the instrument – a single person playing an instrument could make the equivalent of a multi-track recording and by interpreting the music differently, in as natural way as possible, I could shape the way this sounded. Not like being at a mixing desk in a recording studio, not like writing a computer program, not like working with the machine, but just like playing naturally. So, that’s what I wanted a hyperinstrument to be, especially for trained musicians.

Question: How did hyperinstruments lead to the creation of Guitar Hero?

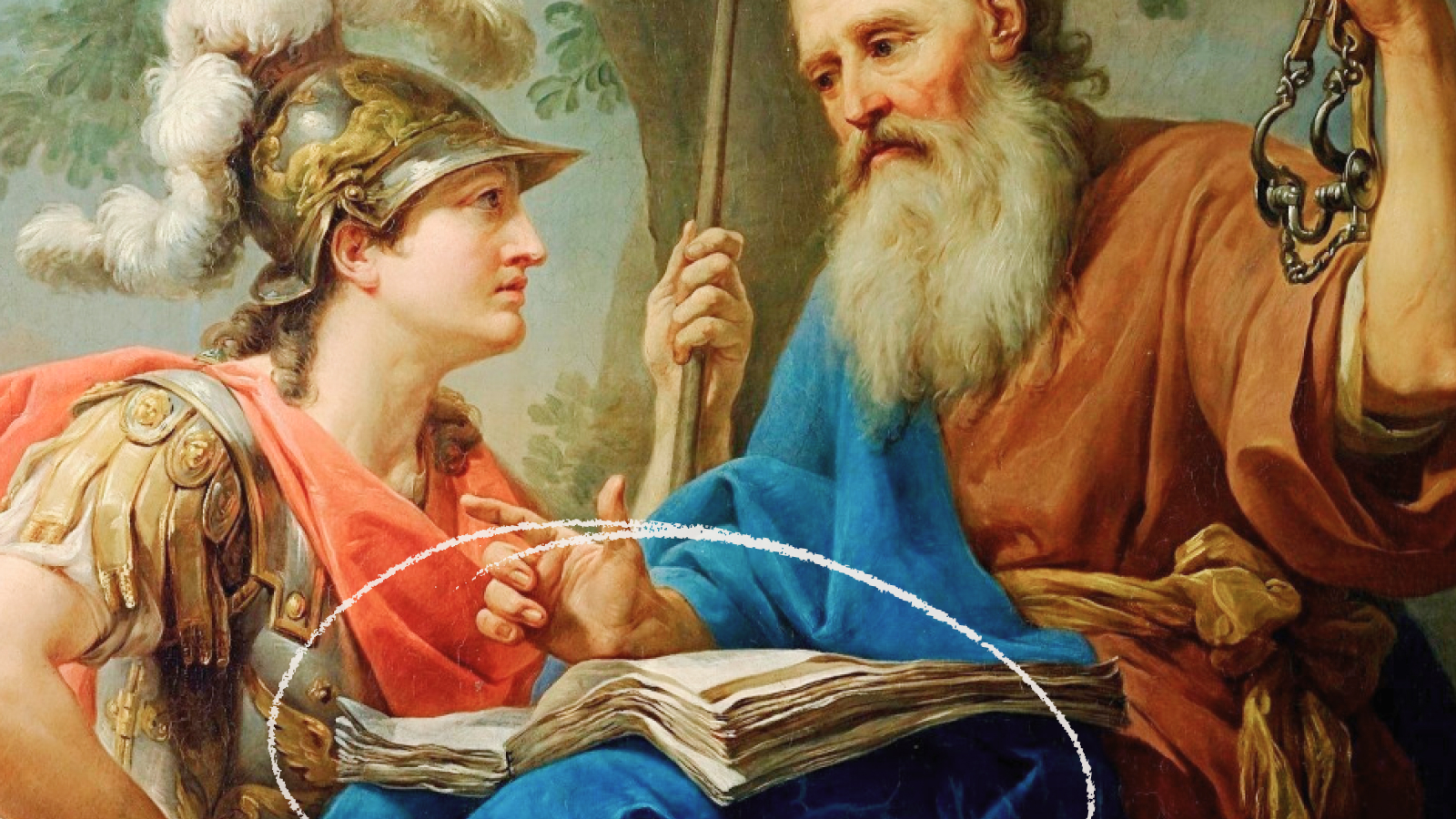

Tod Machover: So, one of the interesting things about inventing these instruments, and actually in some ways, the more unusual the instruments are that you think of, or the sounds you think of, the more radical the technology is that you’re trying to develop for music in particular. The more likely it is that something unexpected is going to come out of it that you didn’t expect at the beginning. So, as a perfect case, we built this hyper cello for Yo-Yo Ma and I wanted to keep to as close a regular cello as possible, so had a regular finger board, a regular strings, Yo-Yo’s a really nice guy, but when I started talking about taping or nailing things onto his Stradivarius, he didn’t like that idea too much. So, we made a physical instrument from scratch so we could put measurement devices and sensors right in the instrument.

So, he played this cello, you plug it in, it can sound like a regular cello, but it’s also measuring everything that’s happening. Then we took a regular bow, because measuring the bowing for cello is like breathing for a singer. So, we have to measure everything about the horse hair on the string and the pressure and how fast it’s moving, and the angle. And we found out the best way to do that was to put two computer chips on either side of the bow, to send electricity through the chips into the air, to put a little antenna on the cello. The antenna picks up the electricity and it can tell, if you write software, which side of the bow the electricity is coming from and by doing that, you can tell where the bow is, how fast it’s moving, how much bow you’re using, which part of the bow you’re on, the angle. Everything you need to have a kind of language of the gesture of bowing.

So we did that, it worked well. I wrote some pieces for Yo-Yo, he played them, and so that kind of hyper cello started taking off. But at the same time, we were rehearsing and we found that when Yo-Yo’s bow got close to the cello, when his hand got close to the cello, the measurement went all whacky. It wasn’t supposed to do that. So we went back to the lab and we found out that, sure enough, his body was absorbing electricity from this circuit, which was kind of a drag because it became unpredictable. So, first we figured out how you could modify it so could predict it. But then I started thinking, oh gee, if this electric circuit is measuring how much electricity his hand is absorbing, it actually knows where – I could tell where his hand was. And if I could do that, I could actually throw away the cello and throw away the bow and just make an instrument that measures the way somebody moves their hands.

And so, a light bulb went off and I started thinking, my gosh, all this sophisticated software for measuring how Yo-Yo plays, and how he moves and this technique of the bow, I should be able to use similar techniques for measuring the way anybody moves, and so somebody who is not a professional or a trained musician, I should be able to make a musical environment for them. So, the first instrument we made after the hyper cello was a chair. We made something called the sensor chair, and we actually made this for the magicians Penn and Teller because they were following the work and we kind of had this idea.

So we took a chair put a piece of metal on the chair. When you sit on the chair you’re rear end touches the metal and you’re body becomes part of this electric circuit just like the bow was. So, electricity is streaming out of your body, you put two poles in front of the chair and the poles have sensors in them with little lights. So when I move my arms, electricity is coming out of my arms and these sensors can tell how much electricity is in the air. So, again, if I write software it knows exactly where my hands are and also, if I’m smart enough I can write software that knows, am I moving in a jagged way? Am I moving smoothly? Am I moving continuously? Am I moving discontinuously? There are all kinds of quality I can pick up.

So we made actually a musical instrument and then a little opera magic trick for Penn and Teller which is based on sitting on this chair and playing melodies and harmonies and rhythms just by moving your hands. And it was great. And I sort of thought of it as a virtuosic instrument for anybody. I mean everybody can move their hands, but you kind of have to sit down, learn where the sounds are, learn how to shape them, learn how to communicate this to somebody. So, it was a real breakthrough. And we started making a lot of instruments for the general public.

We did a big project at the Lincoln Center for the Lincoln Center Festival called “The Brain Opera” where we made a whole orchestra of about 75 instruments, about 10 different models. We called it “the Mind Forest.” We filled up the lobby of the Juilliard Theater, and people would come in and you’d be surrounded with this web of instruments, like a driving game where you could drive musical notes through pathways. Depending on how you drove the music would change. Tabletops where you could move your fingers and change the music, big walls where you could move your whole body; same electricity system, to change big masses of sound. Percussion instruments that looked like these rubber organic pads. Hundreds of them all over the room. So, the audience got to experiment with these and we put on a performance every hour at the Brain Opera, but half of it was prepared by me ahead of time, half of it we took what the audience had just done with these instruments. So, it was a collaboration through these instruments with the audience.

And around that time, I had a lot of really interesting students in my group, a lot of them have gone on to do really, really interesting things in all these kinds of areas. How to make music accessible to the general public.

A couple of these students started a company right near MIT, it’s still there. The company is called Harmonics. And they started taking these same, especially this idea of a driving game, where I could drive a musical note through a series of roads. They actually took some joy sticks and had a piece of music that would play. And depending on how you moved – if you moved the right joy stick, it would push this music to different harmonies, if you moved the left joy stick; it would make the rhythm more complex. So, just by moving the joy sticks, anybody could modify this music. And they did work like that for about seven or eight years. They made some games that were kind of okay. They were actually very good games. They didn’t sell all that much. And then somebody came to them and said, “Did you ever think of using – making a plastic guitar and what would it be like if you took exactly the same software and had somebody with a guitar interface instead of these joysticks."

So we all talked about that. They made this game called Guitar Hero and nobody expected it to be a success. And actually when Guitar Hero first came out, the big, like, Best Buy and Target and all those places bought very few of them. But the fact that it was a guitar interface and not joysticks, so many people had imagined what it would feel like to play a guitar. And the game play, the idea that it wasn’t just moving music around with joysticks, but you were being graded at how accurate you were and it was very easy to tell how well you were doing. And as we know, it became a success very fast. So, that came directly out of the work on the bow and the cello and that turning into this sensor chair and all that software went into Harmonics and is now out there as Guitar Hero and Rock Band.

Recorded on January 14, 2010