Making computer chips act more like brain cells

The human brain is an amazing computing machine. Weighing only three pounds or so, it can process information a thousand times faster than the fastest supercomputer, store a thousand times more information than a powerful laptop, and do it all using no more energy than a 20-watt lightbulb.

Researchers are trying to replicate this success using soft, flexible organic materials that can operate like biological neurons and someday might even be able to interconnect with them. Eventually, soft “neuromorphic” computer chips could be implanted directly into the brain, allowing people to control an artificial arm or a computer monitor simply by thinking about it.

Like real neurons — but unlike conventional computer chips — these new devices can send and receive both chemical and electrical signals. “Your brain works with chemicals, with neurotransmitters like dopamine and serotonin. Our materials are able to interact electrochemically with them,” says Alberto Salleo, a materials scientist at Stanford University who wrote about the potential for organic neuromorphic devices in the 2021 Annual Review of Materials Research.

Salleo and other researchers have created electronic devices using these soft organic materials that can act like transistors (which amplify and switch electrical signals) and memory cells (which store information) and other basic electronic components.

The work grows out of an increasing interest in neuromorphic computer circuits that mimic how human neural connections, or synapses, work. These circuits, whether made of silicon, metal or organic materials, work less like those in digital computers and more like the networks of neurons in the human brain.

Conventional digital computers work one step at a time, and their architecture creates a fundamental division between calculation and memory. This division means that ones and zeroes must be shuttled back and forth between locations on the computer processor, creating a bottleneck for speed and energy use.

The brain does things differently. An individual neuron receives signals from many other neurons, and all these signals together add up to affect the electrical state of the receiving neuron. In effect, each neuron serves as both a calculating device — integrating the value of all the signals it has received — and a memory device: storing the value of all of those combined signals as an infinitely variable analog value, rather than the zero-or-one of digital computers.

Researchers have developed a number of different “memristive” devices that mimic this ability. When you run electric currents through them, you change the electrical resistance. Like biological neurons, these devices calculate by adding up the values of all the currents they have been exposed to. And they remember through the resulting value their resistance takes.

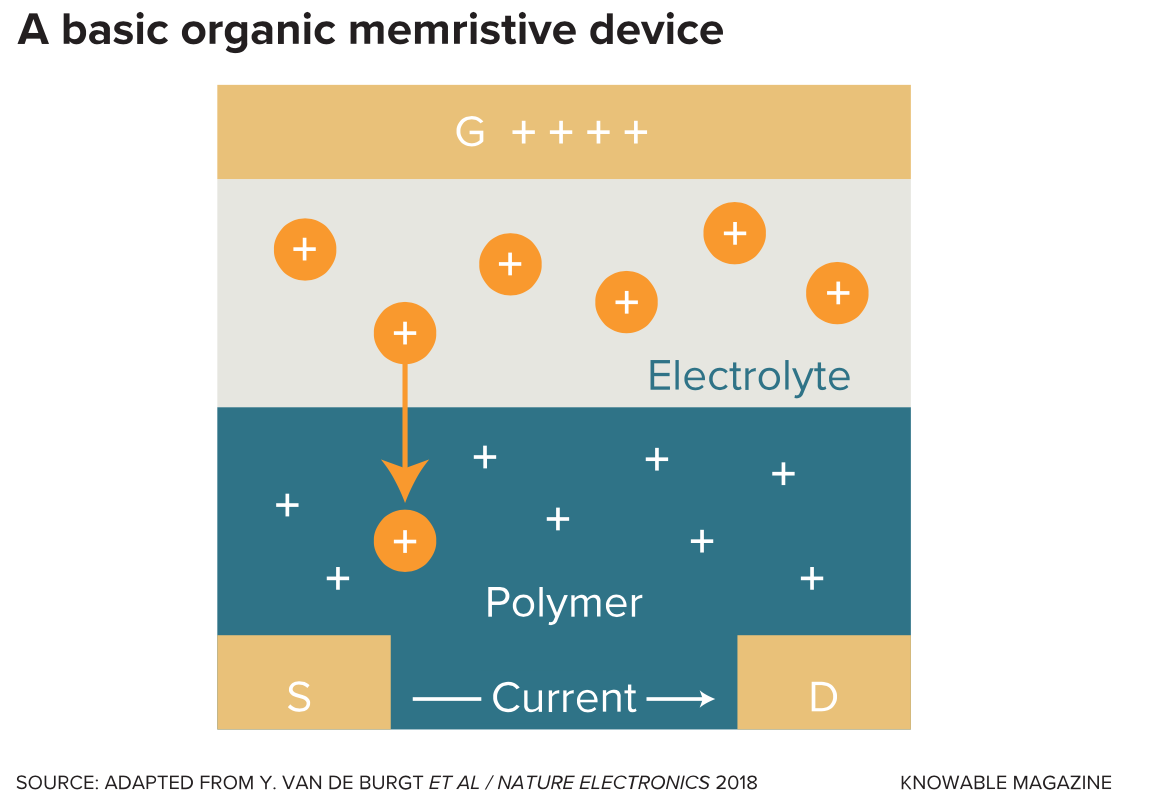

A simple organic memristor, for example, might have two layers of electrically conducting materials. When a voltage is applied, electric current drives positively charged ions from one layer into the other, changing how easily the second layer will conduct electricity the next time it is exposed to an electric current. (See diagram.) “It’s a way of letting the physics do the computing,” says Matthew Marinella, a computer engineer at Arizona State University in Tempe who researches neuromorphic computing.

The technique also liberates the computer from strictly binary values. “When you have classical computer memory, it’s either a zero or a one. We make a memory that could be any value between zero and one. So you can tune it in an analog fashion,” Salleo says.

At the moment, most memristors and related devices aren’t based on organic materials but use standard silicon chip technology. Some are even used commercially as a way of speeding up artificial intelligence programs. But organic components have the potential to do the job faster while using less energy, Salleo says. Better yet, they could be designed to integrate with your own brain. The materials are soft and flexible, and also have electrochemical properties that allow them to interact with biological neurons.

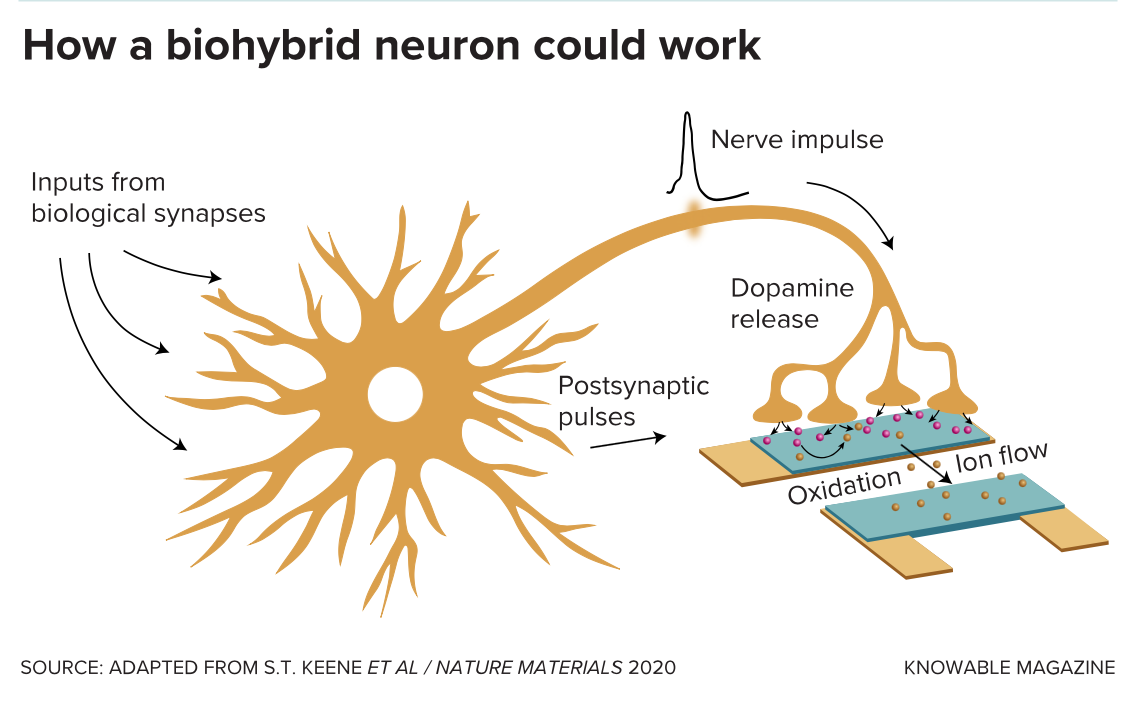

For instance, Francesca Santoro, an electrical engineer now at RWTH Aachen University in Germany, is developing a polymer device that takes input from real cells and “learns” from it. In her device, the cells are separated from the artificial neuron by a small space, similar to the synapses that separate real neurons from one another. As the cells produce dopamine, a nerve-signaling chemical, the dopamine changes the electrical state of the artificial half of the device. The more dopamine the cells produce, the more the electrical state of the artificial neuron changes, just as you might see with two biological neurons. (See diagram.) “Our ultimate goal is really to design electronics which look like neurons and act like neurons,” Santoro says.

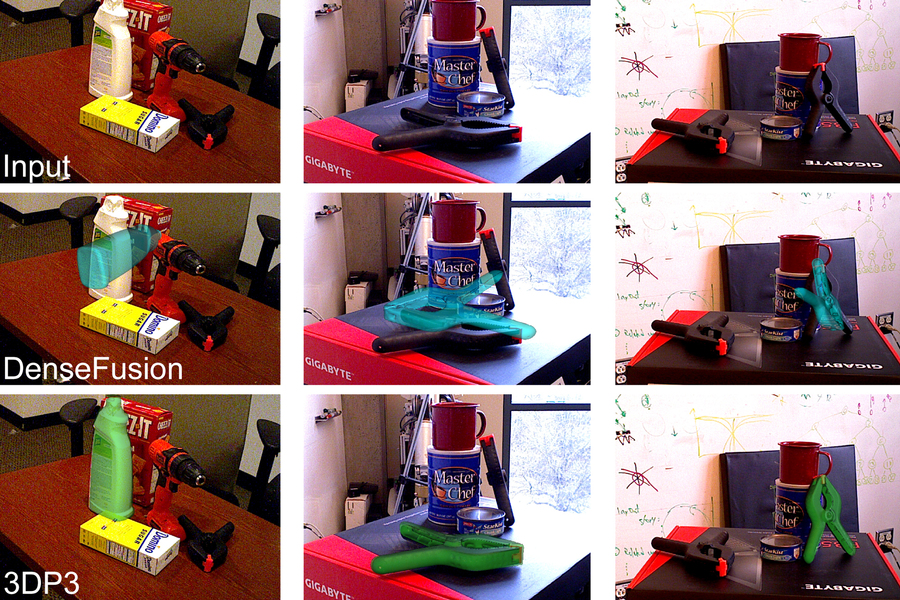

The approach could offer a better way to use brain activity to drive prosthetics or computer monitors. Today’s systems use standard electronics, including electrodes that can pick up only broad patterns of electrical activity. And the equipment is bulky and requires external computers to operate.

Flexible, neuromorphic circuits could improve this in at least two ways. They would be capable of translating neural signals in a much more granular way, responding to signals from individual neurons. And the devices might also be able to handle some of the necessary computations themselves, Salleo says, which could save energy and boost processing speed.

Low-level, decentralized systems of this sort — with small, neuromorphic computers processing information as it is received by local sensors — are a promising avenue for neuromorphic computing, Salleo and Santoro say. “The fact that they so nicely resemble the electrical operation of neurons makes them ideal for physical and electrical coupling with neuronal tissue,” Santoro says, “and ultimately the brain.”

This article originally appeared in Knowable Magazine, a nonprofit publication dedicated to making scientific knowledge accessible to all. Sign up for Knowable Magazine’s newsletter.