Our Dangerous Inability to Agree on What is TRUE

There were a lot of thoughtful comments on my observations last week about the ethics of denying that climate change is real. Many felt that I was arrogant, since my case was predicated on my belief that climate change is real. They felt it was arrogant for me to assume that I am rational and right, and that the deniers are wrong because their underlying biases blind them to the truth I am smart enough to know. After all, my critics said, my underlying biases influence me too. How can I claim I’m right, and they’re wrong, if my perceptions are as subjective as theirs?

This fair challenge (I do apologize to those who felt I sounded arrogant) raises a far more profound question than who is right or wrong about climate change. Given that human cognition is never the product of pure dispassionate reason, but a subjective interpretation of the facts based on our feelings and biases and instincts, when can we ever say that we know who is right and who is wrong, about anything? When can we declare a fact so established that it’s fair to say, without being called arrogant, that those who deny this truth don’t just disagree…that they’re just plain wrong. When can we know…with certainty…that anything is true? And more important, what happens to us if we can’t agree?

This isn’t about matters of faith, or questions of ultimately unknowable things which by definition can not be established by fact. This is a question about what is knowable, and provable by careful objective scientific inquiry, a process which includes challenging skepticism rigorously applied precisely to establish what, beyond any reasonable doubt, is in fact true. The way evolution has been established. The way we have established that vaccines don’t cause autism, or that cigarettes do cause cancer, or the way science established, against accepted truth and deep religious belief at the time, that the earth revolves around the sun, and not the other way around.

With enough careful investigation and scrupulously challenged evidence, we can establish knowable truths that are not just the product of our subjective motivated reasoning. We can apply our powers of reason and our ability to objectively analyze the facts and get beyond the point where what we ‘know’ is just an interpretation of the evidence through the subconscious filters of who we trust and our biases and instincts. We can get to the point where if someone wants to continue believe that the sun revolves around the earth, or that vaccines cause autism, or that evolution is a deceit, it is no longer arrogant – though it may still be provocative – to call those people wrong.

This matters for social animals like us, whose safety and very survival ultimately depend on our ability to coexist. Views that have more to do with competing tribal biases than objective interpretations of the evidence create destructive and violent conflict. Denial of scientifically established ‘truth’ cause all sorts of serious direct harms. Consider a few examples;

• The widespread faith-based rejection of evolution feeds intense polarization.

• Continued fear of vaccines is allowing nearly eradicated diseases to return.

• Those who deny the evidence of the safety of genetically modified food are also denying the immense potential benefits of that technology to millions.

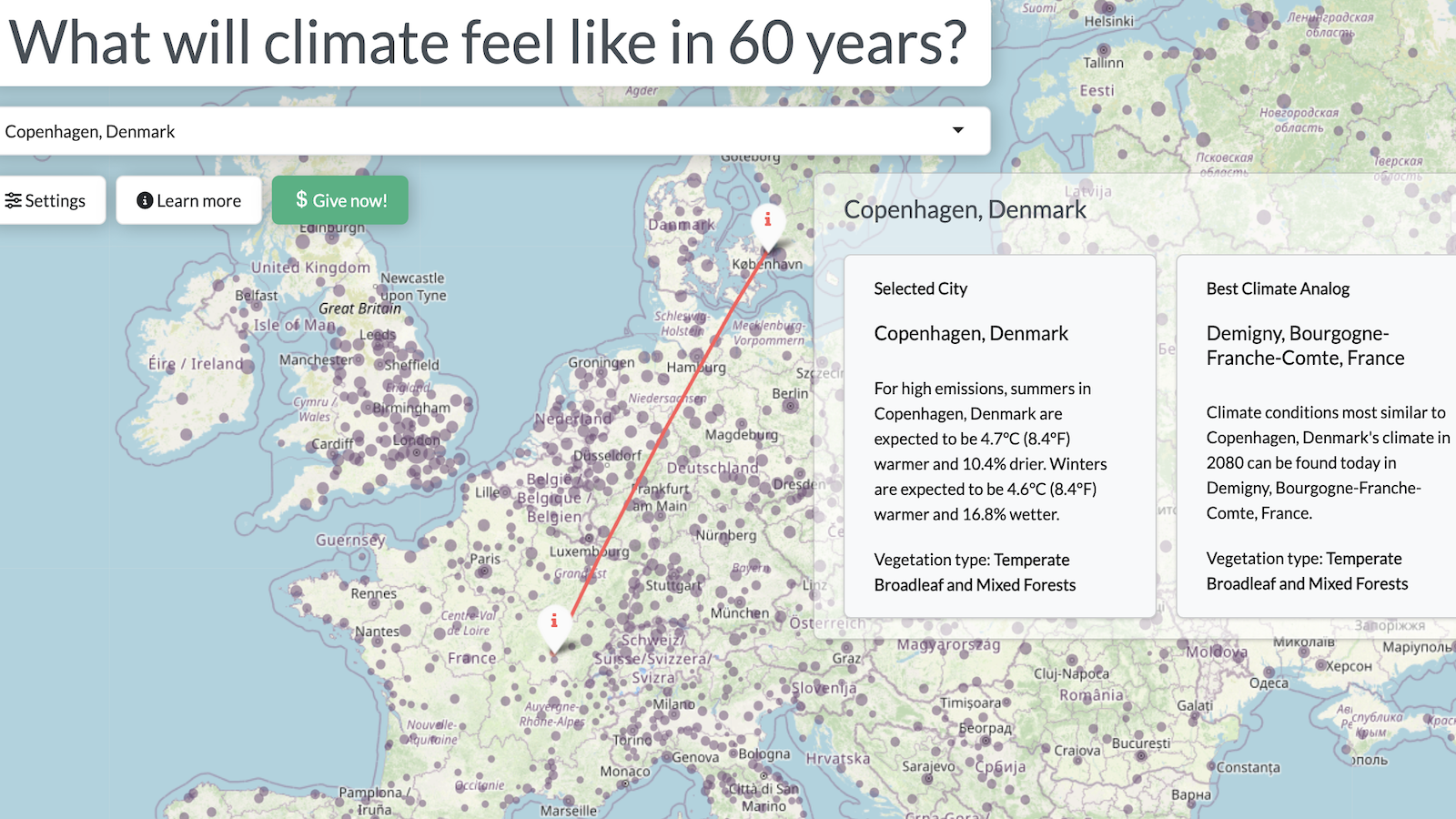

• Denying the powerful evidence for climate change puts us all in serious jeopardy should that evidence prove to be true.

To address these harms, we need to understand why we often have trouble agreeing on what is true (what some have labeled science denialism). Social science has taught us that human cognition is innately, and inescapably, a process of interpreting the hard data about our world – its sights and sound and smells and facts and ideas – through subjective affective filters that help us turn those facts into the judgments and choices and behaviors that help us survive. The brain’s imperative, after all, is not to reason. It’s job is survival, and subjective cognitive biases and instincts have developed to help us make sense of information in the pursuit of safety, not so that we might come to know ‘THE universal absolute truth’. This subjective cognition is built-in, subconscious, beyond free will, and unavoidably leads to different interpretations of the same facts.

But here is a truth with which I hope we can all agree. Our subjective system of cognition can be dangerous. It can produce perceptions that conflict with the evidence, what I call The Perception Gap, which can in turn produce profound harm. The Perception Gap can lead to disagreements that create destructive and violent social conflict, to dangerous personal choices that feel safe but aren’t, and to policies more consistent with how we feel than what is in fact in our best interest. The Perception Gap may in fact be potentially more dangerous than any individual risk we face. We need to recognize the greater threat that our subjective system of cognition can pose, and in the name of our own safety and the welfare of the society on which we depend, do our very best to rise above it or, when we can’t, account for this very real danger in the policies we adopt.

Let me close with where we began, using the example of climate change to make the larger case, quoting David Roberts of