Google Wants to Take the Steering Wheel out of Its Autonomous Cars, Doesn’t Trust Humans

Robots could be considered legal drivers in the United States. This means human occupants inside the vehicle wouldn’t require a valid license in order to ride inside — the software would be the vehicle’s legal “driver.”

Late last year, Google submitted a proposal to the National Highway Traffic Safety Administration (NHTSA) for an autonomous car that has “no need for a human driver.”

The NHTSAresponded this month to Google‘s requestin a letter, reported on by Reuters, which said, “NHTSA will interpret ‘driver’ in the context of Google’s described motor vehicle design as referring to the (self-driving system), and not to any of the vehicle occupants. We agree with Google its (self-driving car) will not have a ‘driver’ in the traditional sense that vehicles have had drivers during the last more than 100 years.”

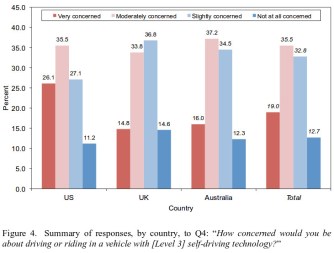

Robots make people skittish; the idea of taking control away from a person and entrusting their life to a piece of software is unsettling to some. However, public opinion may have won in California, as the government is considering a ban on the use of unmanned cars before they’ve even come on the market.

“Safety is our highest priority and primary motivator as we do this,” Google spokesman Johnny Luu wrote in a statement. “We’re gravely disappointed that California is already writing a ceiling on the potential for fully self-driving cars to help all of us who live here.”

Brad Templeton, a software architect, civil rights advocate, and entrepreneur, pointed out in his blog, “In the normal history of car safety regulation, technologies are built and deployed by vendors and are usually on the road for decades before they get regulated, but people are so afraid of robots that this normal approach may not happen here.”

There are a lot of legal and moral questions surrounding autonomous vehicles. Should manufacturers notify passengers about the car’s moral compass (e.g., would the AI crash the car if it meant saving a group of pedestrians)? And if the car did crash, who’s at fault?

“We’re going to need new kinds of laws that deal with the consequences of well-intentioned autonomous actions that robots take,” says Jerry Kaplan, who teaches Impact of Artificial Intelligence in the Computer Science Department at Stanford University.

When autonomous cars do become the popular mode of transportation, it’s likely we’ll see more benefits come out of this adoption than tragedy. Drunk drivers would become a non-issue; the elderly would become independent; and the environment would benefit from more efficient driving.

According to the NHTSA letter, Google has expressed “concern that providing human occupants of the vehicle with mechanisms to control things like steering, acceleration, braking … could be detrimental to safety because the human occupants could attempt to override the (self-driving system’s) decisions.”

“I think 100 years from now, people will look back and say, ‘Really? People used to drive their cars? What are they, insane?’ Humans are the worst control system to put in front of a car,” says Peter H. Diamandis, the chairman and CEO of the XPRIZE Foundation.

***

Photo Credit: NOAH BERGER / Stringer / Getty

Graph courtesy of UMichigan study: A survey of public opinion about autonomous and self-driving vehicles in the U.S., the U.K., and Australia.