Driverless Cars Will Create Very Inattentive Riders

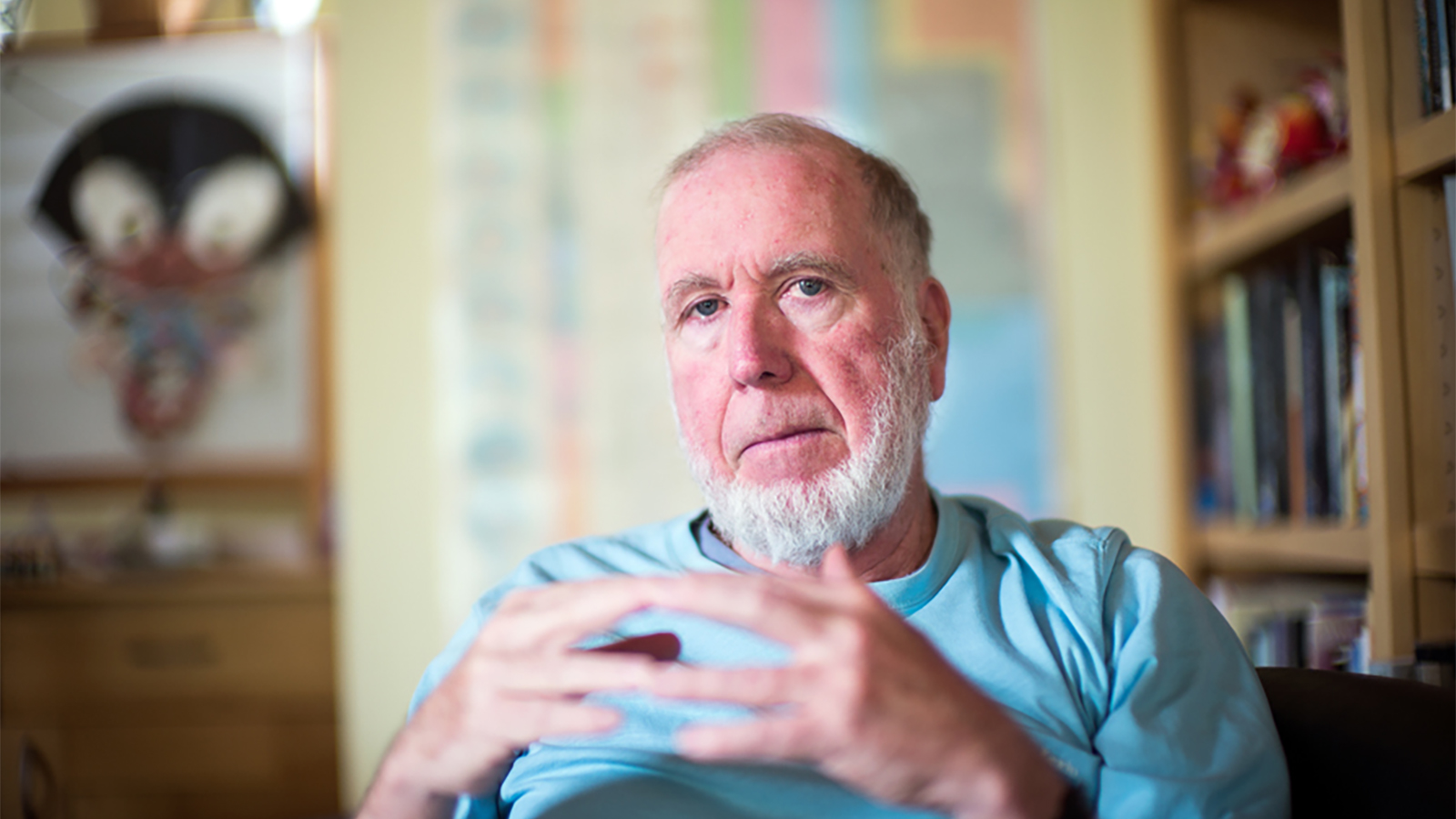

Driverless cars require trust. They require us to trust programmers who code-in automatic responses: Slow down when it’s raining; keep a certain distance from the cars in front of you, etc. That means giving up control, says Jerry Kaplan, a fellow at The Stanford Center for Legal Informatics. Problem is, many of us aren’t ready to hand over control of our cars. Kaplan asks us to consider the number of social interactions and ethical scenarios we’re handing over to a self-driving car, proposing the “Trolley Problem” as one of his main concerns.

The Trolley Problem is a thought experiment in ethics. The setup is simple: There’s a runaway trolley barreling down the tracks about to hit five people tied up — they can’t move. You, a bystander, have the option to pull a lever, which will divert the trolley, saving the five people, but killing one person sitting on the other track. What do you do?

Brad Templeton, a consultant on Google’s autonomous vehicles, is well aware of the proposed Trolley Problem, and asks people to consider real life. “In reality, such choices are extremely rare,” he writes in his blog. “How often have you had to make such a decision, or heard of somebody making one? Ideal handling of such situations is difficult to decide, but there are many other issues to decide as well.”

Engineers are aware of the scenarios and have been working on solving a multitude of them for decades, Templeton assures. “It’s extremely rare for a newcomer to come up with a scenario they have not thought of,” he wrote in another post. “In addition, developers are putting cars on the road, with over a million miles in Google’s case, to find the situations that they didn’t think of just by thinking and driving themselves.”

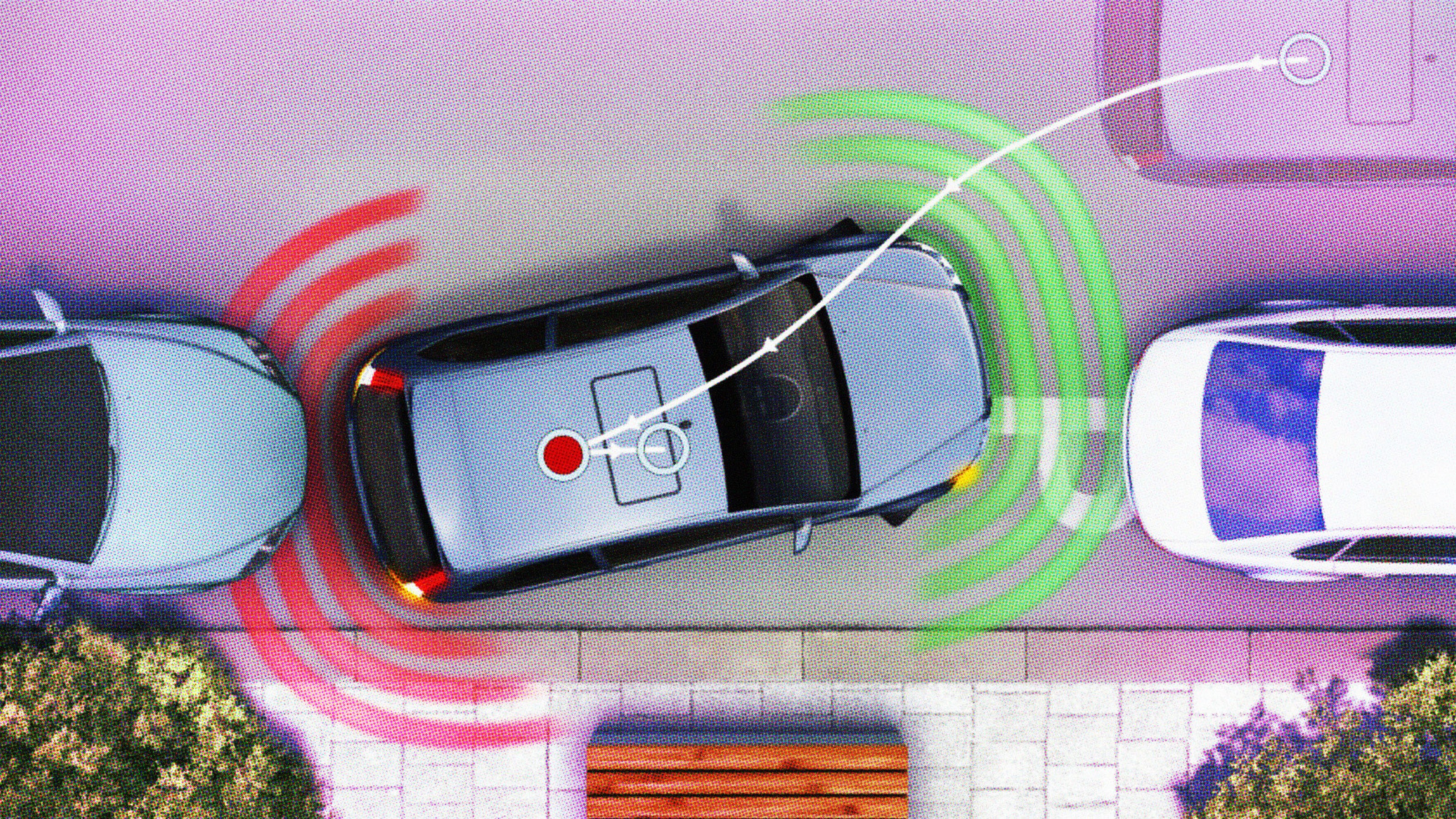

Still, people dwell on scenarios that computers struggle to handle. In other words, we still want control. When an autonomous car does encounter unknowns, engineers suggest turning control over to humans. That’s a solution being investigated by Dr. Anuj K. Pradhan at the University of Michigan Transportation Research Institute.

Pradhan performs a simulation meant to test two things: how people react to being inside an autonomous car and how they respond when a driving situation becomes too complex for a driverless car to compute.

“When a computer voice shouts ‘autonomous mode disengaged’ and you look up to see the back of a truck that you are hurtling towards at high speed. Maybe you will be able to respond quickly enough, but maybe not. You are at a disadvantage. From BBC Future’s experience in the simulator, and in other experimental autonomous vehicles such as Google’s cars, it is pretty easy to become relatively relaxed.”

Ultimately, we shouldn’t throw the baby out with the bathwater. These ethical dilemmas are small compared to the good autonomous vehicles could do. More efficient driving means lower carbon emissions. It could also prevent many accidents, as causes leading up to a crash are mostly attributed to human error.

In a 2008 report, the National Highway Traffic Safety Administration found “the critical reason for the critical pre-crash event was attributed to the driver in a large proportion of the crashes. Many of these critical reasons included a failure to correctly recognize the situation (recognition errors), poor driving decisions (decision errors), or driver performance errors.”

Photo Credit: Justin Sullivan / Getty Staff