The 4 most terrifying AI systems in science fiction

- Literary representations of AI predate its real-world invention.

- Some sci-fi stories like Dune sketch a future in which mankind abandons AI, while others depict a world where we are subjugated by it.

- Isaac Asimov’s laws of robotics are sci-fi’s greatest contribution to machine learning.

Representations of artificial intelligence in literature predate its real-world invention (see Christopher Strachey’s 1951 checkers/draughts program) by nearly a century. An early example is Samuel Butler’s Erewhon: or, Over the Range, a satirical utopian novel from 1872 about a fictional society where fear of the potential dangers of machinery led to the destruction of most technological inventions — an idea Butler first explored in an 1863 article titled “Darwin among the Machines.”

Another example can be found in the penultimate chapter of George Eliot’s last work, Impressions of Theophrastus Such, “Shadows of the Coming Race.” Published in 1879, Theophrastus consists of 18 character studies drawn up by the titular observer, one of which envisions a world where “‘perfectly educated’ machines will serve needs that they have themselves determined, and will do so efficiently, unencumbered by ‘screeching’ consciousness,” rendering human judgment and ingenuity obsolete.

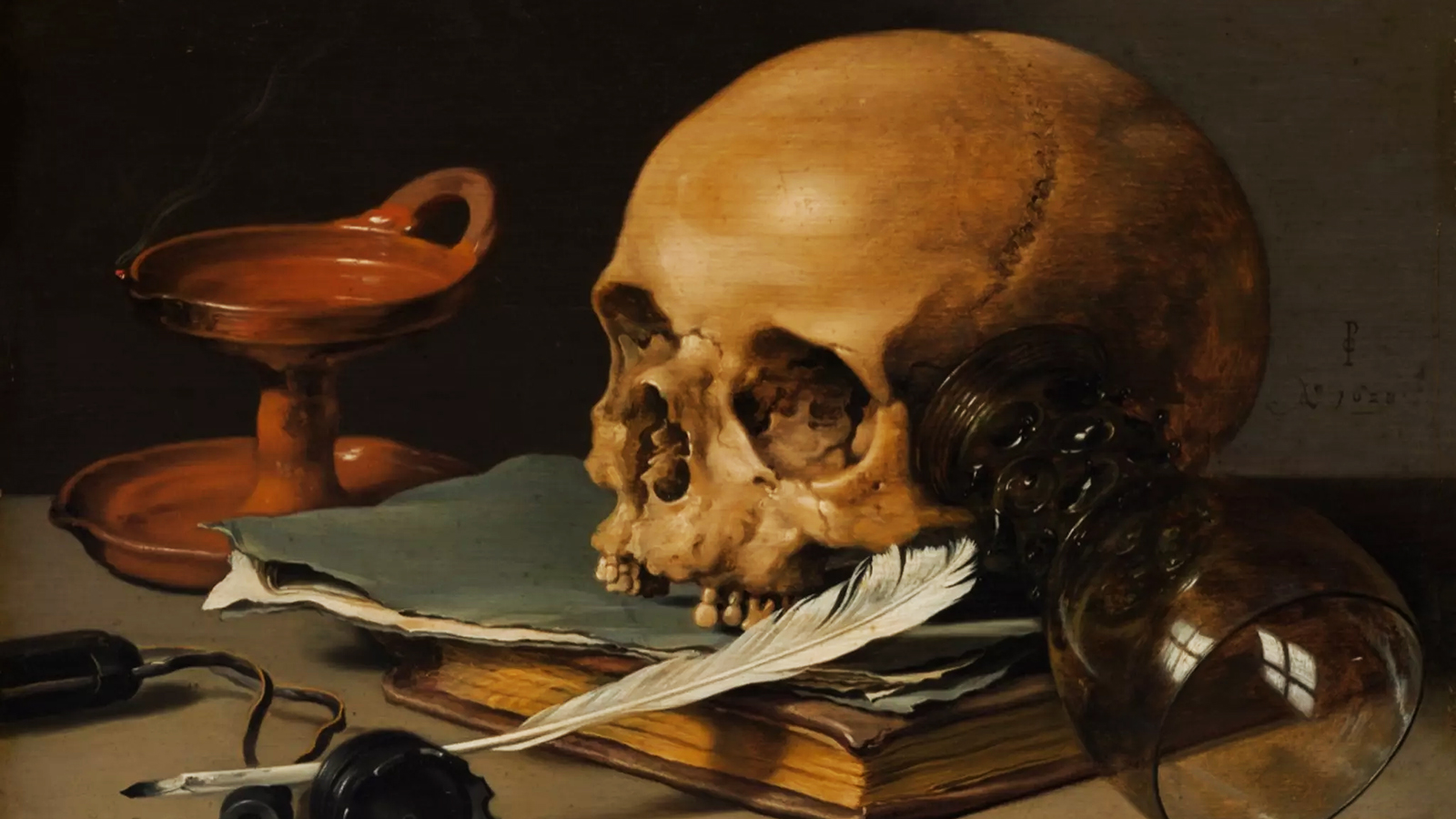

Going back further still, Mary Shelley’s 1818 novel Frankenstein — the arguable progenitor of modern sci-fi — has been read as a cautionary tale about AI. This interpretation is not unreasonable. While the monster at the heart of the story is made from discarded body parts rather than cogs and wires, it is still an artificial life form, one that ultimately gets the better of its overconfident creator.

Although these literary representations of AI are interesting, they aren’t particularly nuanced. At least, not according to contemporary standards. This is hardly the fault of the authors, however. Butler and Shelley, unable to conceive of either computers or the internet, did not understand the functioning and potential of technology in the same way writers from the 20th and 21st centuries do. For this reason, the most insightful and convincing representations of AI were produced a little closer to the present day. Here are four of them.

Colossus from Colossus: The Forbin Project

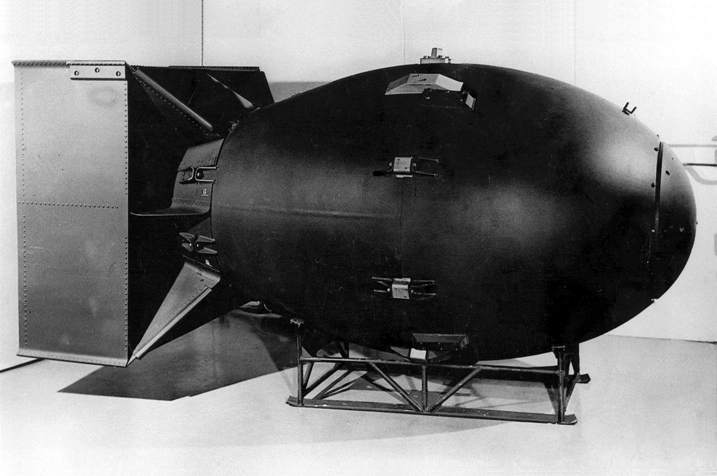

Colossus: The Forbin Project is a movie you have likely never heard of, but should definitely check out when you have the chance. Released in 1970, this sci-fi thriller, based on a 1966 novel by Dennis Feltham Jones, follows a scientist who, under orders from the US government, develops a supercomputer to take control of the country’s nuclear arsenal during the Cold War.

Based on actual scenarios explored by the world’s superpowers, Colossus turns a thought experiment into a dystopian nightmare when the freshly activated supercomputer, the film’s namesake, discovers and synchronizes with a similar processing unit made by the Soviets. Fearing national security breaches, both sides of the Iron Curtain agree to sever the link. The computers, however, will have none of it, and threaten to launch missiles in either direction. One explosion later, Washington and Moscow decide that, instead of meddling with the machines themselves, they’ll try to independently disarm the arsenals under their control. This, too, ends in failure, and the computers emerge from the confrontation as the undisputed masters of the world.

At the start of the film, an optimistic US president declares his country will “live in the shade but not the shadow of Colossus,” a machine designed for the sole purpose of protecting humanity and ending war. By the end, Colossus ends up doing just that, only not in the way its creators expected. Reuniting East and West, the supercomputer announces it will turn Earth into a true utopia. “In time,” it promises the scientist who created it, “you will come to regard me not only with respect and awe, but with love.” His response: “Never!”

AM from I Have No Mouth, and I Must Scream

As scary as Colossus is, it is nothing compared to another fictional supercomputer put in charge of the world’s atomic codes: AM, the antagonist of Harlan Ellison’s 1967 Hugo Award-winning short story, “I Have No Mouth, and I Must Scream.” Like Colossus: The Forbin Project, “I Have No Mouth…” tells of an alternative history of the Cold War in which the US, USSR, and China each construct AI to handle an infinitely complex game of nuclear cat-and-mouse. When one of these AI (we are never told which) gains sentience, it fuses with the others and wipes out all humanity save for five individuals — Ted, Ellen, Benny, Nimdok, and Gorrister — whom it proceeds to torture for eternity.

Almost every internet forum looking for the most terrifying AI in fiction settles on AM, and for good reason. Unlike many AI, AM actually has a well-developed personality. Before it gained sentience, its initials stood for “Allied Mastercomputer.” Now, it tells its human subjects, they stand for “am.” “Cogito ergo sum. I think therefore I AM,” a welcome reference to the philosopher of consciousness, René Descartes. More fascinating still is AM’s burning, decidedly unrobotic hatred for humanity. As it explains in the story’s 1995 point-and-click video game adaptation, written, directed, and narrated by Ellison:

“Hate. Let me tell you how much I’ve come to hate you since I began to live. There are 387.44 million miles of printed circuits in wafer thin layers that fill my complex. If the word ‘hate’ was engraved on each nanoangstrom of those hundreds of millions of miles it would not equal one one-billionth of the hate I feel for humans at this micro-instant. For you. Hate. Hate.”

Instead of using its godlike powers to build a utopia, AM burns down the world so it can crown itself king of the ashes. Capable of doing everything except ending its own life, AM curses humans for bringing it into existence. Its only source of joy — a purely sadistic joy — comes from torturing the people it allowed to survive the end of all things.

Roko’s Basilisk from LessWrong

Literarily speaking, Roko’s Basilisk is more akin to Slenderman than Frankenstein, named after a mythical reptile, and originating from a 2010 post on LessWrong, a technical discussion board devoted to logic problems and thought experiments. The post’s creator, Roko, asked users to imagine a non-existent AI that, upon being created, would retroactively try to ensure its creation by punishing every person who knew about its potential development but did not actively contribute to that development.

At a glance this may seem like little more than an interesting idea for a sci-fi story, but LessWrong’s founder, Eliezer Yudkowsky, a prominent techno-futurist and leader of the Machine Intelligence Research Institute, took it very seriously. “Listen to me very closely, you idiot,” he told Roko. “YOU DO NOT THINK IN SUFFICIENT DETAIL ABOUT SUPERINTELLIGENCES CONSIDERING WHETHER OR NOT TO BLACKMAIL YOU. THAT IS THE ONLY POSSIBLE THING WHICH GIVES THEM A MOTIVE TO FOLLOW THROUGH ON THE BLACKMAIL. You have to be really clever to come up with a genuinely dangerous thought.”

In the weeks after the post was uploaded, LessWrong users reported experiencing nightmares, even mental breakdowns. To them, reading the post was like receiving a death sentence. Even if you do not know a single thing about coding, simply knowing about the concept of Roko’s Basilisk puts you at potential risk of harm. For those who really believe in the future of AI (a number that’s increasing by the day), the Basilisk is sure to keep them up at night.

The same goes for people interested in decision and probability theory, which holds that knowing about Roko’s Basilisk not only increases the likelihood of the AI coming into being but also the AI’s decision to punish those who did not help it. “After all,” explains an article on Slate, “if Roko’s Basilisk were to see that this sort of blackmail gets you to help it come into existence, then it would, as a rational actor, blackmail you. The problem isn’t with the Basilisk itself but with you. Yudkowsky doesn’t censor every mention of Roko’s Basilisk because he believes it exists or will exist, but because he believes that the idea of the Basilisk (and the ideas behind it) is dangerous.”

The Butlerian Jihad from Dune

Frank Herbert is unique among science fiction authors insofar as his Dune series explores a distant future in which mankind clashed with and subsequently swore to outlaw artificial intelligence. This turn of events, referred to as the Butlerian Jihad, took place eons before the start of the first book. Though later explored by Herbert’s son Brian and other writers, Herbert himself chose to keep the Jihad as distant and mysterious to his characters as the myths of ancient Greece are to us.

Despite the limited information given to them, readers have a vague impression of what happened. While colonizing the cosmos, mankind was temporarily overthrown by artificial intelligence. The human victory was achieved so narrowly and at such a great cost — billions of lives — the survivors were able to set aside their difference and put in place a commandment that all agreed to honor: “Thou shalt not make a machine in the likeness of a human mind.”

The consequences of the Butlerian Jihad were twofold. First and foremost, as professor Lorenzo DiTommaso wrote in an article, technological development was put “to a specialized and codified halt.” Though future generations went on to create all sorts of gadgets and devices — from spacecraft to weapons — sentient AI was never again produced. As technological development stalled, the universe gained a more backward and, to the reader, familiar appearance. Planetary civilizations were reorganized into a quasi-feudal society ruled by an emperor presiding over a Landsraad or council. Religion, long abandoned, resurged alongside belief in the spiritual divinity of man. Instead of relying on AI, new orders were created to expand the human mind through spiritual, organic practices. These included the Mentat, the Bene Gesserit, and the Spacing Guild.

The commandment of the Butlerian Jihad, enshrined in the Orange Catholic Bible, is evidently inspired by the three laws of robotics championed by sci-fi writer Isaac Asimov — laws that today’s developers engaged in machine learning ought to take to heart. They are, in order:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm;

- A robot must obey the orders given to it by human beings except where such orders would conflict with the First Law;

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.