Scientists find neurons that process language on different timescales

Using functional magnetic resonance imaging (fMRI), neuroscientists have identified several regions of the brain that are responsible for processing language. However, discovering the specific functions of neurons in those regions has proven difficult because fMRI, which measures changes in blood flow, doesn’t have high enough resolution to reveal what small populations of neurons are doing.

Now, using a more precise technique that involves recording electrical activity directly from the brain, MIT neuroscientists have identified different clusters of neurons that appear to process different amounts of linguistic context. These “temporal windows” range from just one word up to about six words.

The temporal windows may reflect different functions for each population, the researchers say. Populations with shorter windows may analyze the meanings of individual words, while those with longer windows may interpret more complex meanings created when words are strung together.

“This is the first time we see clear heterogeneity within the language network,” says Evelina Fedorenko, an associate professor of neuroscience at MIT. “Across dozens of fMRI experiments, these brain areas all seem to do the same thing, but it’s a large, distributed network, so there’s got to be some structure there. This is the first clear demonstration that there is structure, but the different neural populations are spatially interleaved so we can’t see these distinctions with fMRI.”

Fedorenko, who is also a member of MIT’s McGovern Institute for Brain Research, is the senior author of the study, which appeared in Nature Human Behavior. MIT postdoc Tamar Regev and Harvard University graduate student Colton Casto are the lead authors of the paper.

Temporal windows

Functional MRI, which has helped scientists learn a great deal about the roles of different parts of the brain, works by measuring changes in blood flow in the brain. These measurements act as a proxy of neural activity during a particular task. However, each “voxel,” or three-dimensional chunk, of an fMRI image represents hundreds of thousands to millions of neurons and sums up activity across about two seconds, so it can’t reveal fine-grained detail about what those neurons are doing.

One way to get more detailed information about neural function is to record electrical activity using electrodes implanted in the brain. These data are hard to come by because this procedure is done only in patients who are already undergoing surgery for a neurological condition such as severe epilepsy.

“It can take a few years to get enough data for a task because these patients are relatively rare, and in a given patient electrodes are implanted in idiosyncratic locations based on clinical needs, so it takes a while to assemble a dataset with sufficient coverage of some target part of the cortex. But these data, of course, are the best kind of data we can get from human brains: You know exactly where you are spatially and you have very fine-grained temporal information,” Fedorenko says.

In a 2016 study, Fedorenko reported using this approach to study the language processing regions of six people. Electrical activity was recorded while the participants read four different types of language stimuli: complete sentences, lists of words, lists of non-words, and “jabberwocky” sentences — sentences that have grammatical structure but are made of nonsense words.

Those data showed that in some neural populations in language processing regions, activity would gradually build up over a period of several words, when the participants were reading sentences. However, this did not happen when they read lists of words, lists of nonwords, of Jabberwocky sentences.

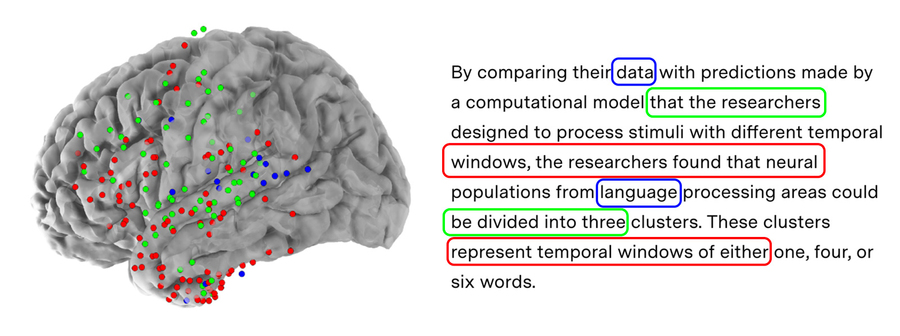

In the new study, Regev and Casto went back to those data and analyzed the temporal response profiles in greater detail. In their original dataset, they had recordings of electrical activity from 177 language-responsive electrodes across the six patients. Conservative estimates suggest that each electrode represents an average of activity from about 200,000 neurons. They also obtained new data from a second set of 16 patients, which included recordings from another 362 language-responsive electrodes.

When the researchers analyzed these data, they found that in some of the neural populations, activity would fluctuate up and down with each word. In others, however, activity would build up over multiple words before falling again, and yet others would show a steady buildup of neural activity over longer spans of words.

By comparing their data with predictions made by a computational model that the researchers designed to process stimuli with different temporal windows, the researchers found that neural populations from language processing areas could be divided into three clusters. These clusters represent temporal windows of either one, four, or six words.

“It really looks like these neural populations integrate information across different timescales along the sentence,” Regev says.

Processing words and meaning

These differences in temporal window size would have been impossible to see using fMRI, the researchers say.

“At the resolution of fMRI, we don’t see much heterogeneity within language-responsive regions. If you localize in individual participants the voxels in their brain that are most responsive to language, you find that their responses to sentences, word lists, jabberwocky sentences and non-word lists are highly similar,” Casto says.

The researchers were also able to determine the anatomical locations where these clusters were found. Neural populations with the shortest temporal window were found predominantly in the posterior temporal lobe, though some were also found in the frontal or anterior temporal lobes. Neural populations from the two other clusters, with longer temporal windows, were spread more evenly throughout the temporal and frontal lobes.

Fedorenko’s lab now plans to study whether these timescales correspond to different functions. One possibility is that the shortest timescale populations may be processing the meanings of a single word, while those with longer timescales interpret the meanings represented by multiple words.

“We already know that in the language network, there is sensitivity to how words go together and to the meanings of individual words,” Regev says. “So that could potentially map to what we’re finding, where the longest timescale is sensitive to things like syntax or relationships between words, and maybe the shortest timescale is more sensitive to features of single words or parts of them.”

The research was funded by the Zuckerman-CHE STEM Leadership Program, the Poitras Center for Psychiatric Disorders Research, the Kempner Institute for the Study of Natural and Artificial Intelligence at Harvard University, the U.S. National Institutes of Health, an American Epilepsy Society Research and Training Fellowship, the McDonnell Center for Systems Neuroscience, Fondazione Neurone, the McGovern Institute, MIT’s Department of Brain and Cognitive Sciences, and the Simons Center for the Social Brain.

Republished with permission of MIT News. Read the original article.