How to prove the Big Bang with an old TV set

- One of the wildest predictions of the Big Bang, which asserts that today’s Universe arose from an early, hot, dense state, is that there should be a leftover, low-energy bath of radiation permeating the whole of space.

- When you calculate what the wavelength of that radiation ought to be today, many billions of years later, it turns out to be just right to interact with an old television set’s “rabbit ear” antennae.

- If you turn an old TV set to channel 03, about 1% of that static-like “snow” you see originates from the Big Bang itself, enabling you to “discover” the Big Bang with an old TV set under the right conditions.

When it comes to the question of how our Universe came to be, science was late to the game. For innumerable generations, it was philosophers, theologians and poets who pontificated on the matter of our cosmic origins. But all of that changed in the 20th century, when theoretical, experimental, and observational developments in physics and astronomy finally brought these questions into the realm of testable science.

When the dust settled, the combination of cosmic expansion, the primeval abundances of the light elements, the Universe’s large-scale structure, and the cosmic microwave background all combined to anoint the Big Bang as the hot, dense, expanding origin of our modern Universe. While it wasn’t until the mid-1960s that the cosmic microwave background was detected, a careful observer could have detected it in the most unlikely of places: on a run-of-the-mill television set.

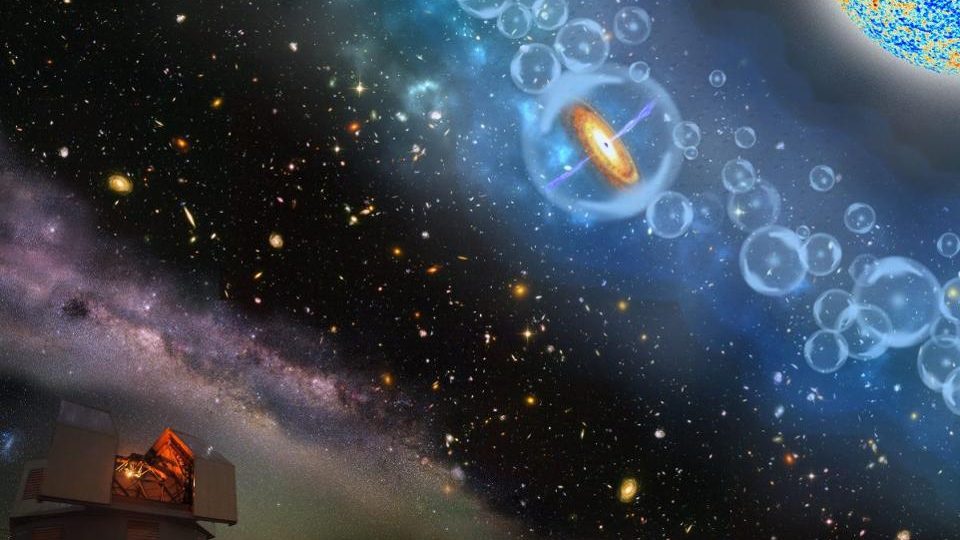

In order to understand how this works, we need to understand what the cosmic microwave background is. When we examine the Universe today, we find that it’s filled with galaxies: approximately 2 trillion of them that we can observe, according to the best modern estimates. The ones that are close by look a lot like ours does, as they are filled with stars that are very similar to the stars in our own galaxy.

This is what you’d expect if the physics that governed those other galaxies were the same as the physics in ours. Their stars would be made of protons, neutrons, and electrons, and their atoms would obey the same quantum rules that atoms in the Milky Way do. However, there’s a slight difference in the light we receive. Instead of the same atomic spectral lines that we find here at home, the light from the stars in other galaxies displays atomic transitions that are shifted.

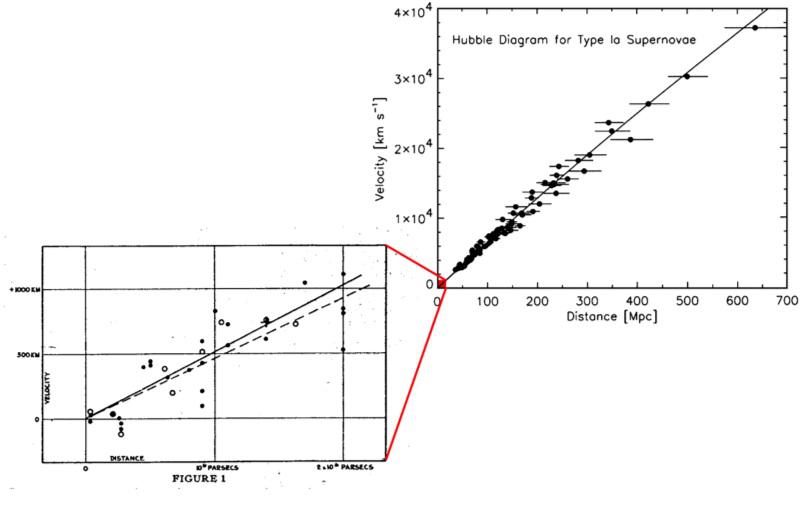

These shifts are unique to each particular galaxy, but they all follow a particular pattern: the farther away a galaxy is (on average), the greater the amount that its spectral lines are shifted toward the red part of the spectrum. The farther we look, the greater the shifts that we see.

Although there were many possible explanations for this observation, different ideas would give rise to different specific observable signatures. The light could be scattering off of intervening matter, which would redden it but also blur it, yet distant galaxies appear just as sharp as nearby ones. The light could be shifted because these galaxies were speeding away from a giant explosion, but if so, they would be sparser the farther away we get, yet the density of the Universe remains constant. Or the fabric of space itself could be expanding, where the more distant galaxies simply have the light shift by greater amounts as it travels throughout an expanding Universe.

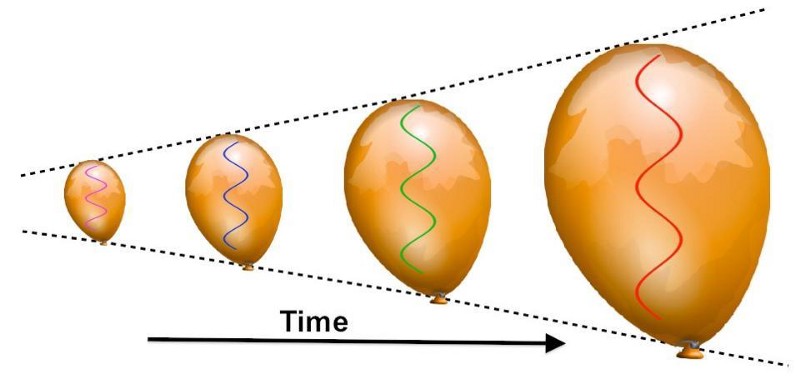

This last point turned out to be in spectacular agreement with our observations, and helped us understand that it was the fabric of space itself that was expanding as time progresses. The reason the light is redder the farther away we look is because of the fact that the Universe has expanded over time, and the light within that Universe gets its wavelength stretched by the expansion. The longer the light has been traveling, the greater the redshift due to the expansion.

As we move forward in time, emitted light gets shifted to larger wavelengths, which have lower temperatures and smaller energies. But that means if we view the Universe in the opposite fashion — by imagining it as it was farther back in time — we’d see light that had smaller wavelengths, with higher temperatures and greater energies. The farther back you extrapolate, the hotter and more energetic this radiation should get.

Although it was a breathtaking theoretical leap, scientists (beginning with George Gamow in the 1940s) began extrapolating this property back farther and farther, until a critical threshold of a few thousand Kelvin was reached. At that point, the reasoning went, the radiation present would be energetic enough that some of the individual photons could ionize neutral hydrogen atoms: the building block of stars and the primary contents of our Universe.

When you transitioned from a Universe that was above that temperature threshold to one that was below it, the Universe would go from a state that was filled with ionized nuclei and electrons to one that was filled with neutral atoms. When matter is ionized, it scatters off of radiation; when matter is neutral, radiation passes right through those atoms. That transition marks a critical time in our Universe’s past, if this framework is correct.

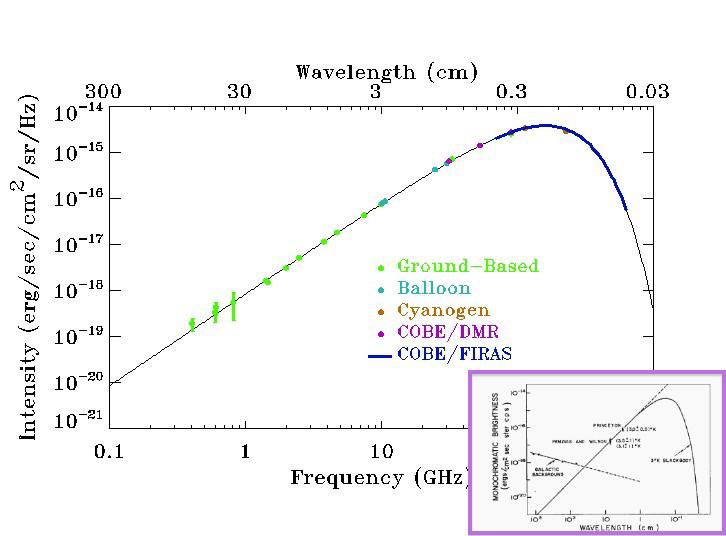

The spectacular realization of this scenario is that it means that today, that radiation would have cooled from a few thousand Kelvin to just a few degrees above absolute zero, as the Universe must have expanded by anywhere from a factor of hundreds to a few thousand since that epoch. It should remain even today as a background coming to us from all directions in space. It should have a specific set of spectral properties: a blackbody distribution. And it should be detectable somewhere in the range of microwave to radio frequencies.

Remember that light, as we know it, is much more than just the visible portion our eyes are sensitive to. Light comes in a variety of wavelengths, frequencies, and energies, and that an expanding Universe doesn’t destroy light, it simply moves it to longer wavelengths. What was ultraviolet, visible and infrared light billions of years ago becomes microwave and radio light as the fabric of space stretches.

It wasn’t until the 1960s that a team of scientists sought to actually detect and measure the properties of this theoretical radiation. Over at Princeton, Bob Dicke, Jim Peebles (who won 2019’s Nobel Prize), David Wilkinson and Peter Roll planned to build and fly a radiometer capable of searching for this radiation, with the intent of confirming or refuting this hitherto untested prediction of the Big Bang.

But they never got the chance. 30 miles away, two scientists were making use of a new piece of equipment — a giant, ultra-sensitive, horn-shaped radio antenna — and were failing to calibrate it over and over. While signals emerged from the Sun and the galactic plane, there was an omnidirectional noise they simply couldn’t get rid of. It was cold (~3 K), it was everywhere, and it wasn’t a calibration error. After communication with the Princeton team, they realized what it was: it was the leftover glow from the Big Bang.

Subsequently, scientists went on to measure the entirety of the radiation associated with this cosmic microwave background signal and determined that it did, indeed, match the predictions of the Big Bang. In particular, it did follow a blackbody distribution, it was peaked at 2.725 K, it extended into both the microwave and radio portions of the spectrum, and it is perfectly even throughout the Universe to better than 99.99% precision.

If we take a modern view of things, we know now that the cosmic microwave background radiation — the radiation that confirmed the Big Bang and caused us to reject all the alternatives — could have been detected in any of a whole slew of wavelength bands, if only the signals had been collected and analyzed with a view toward identifying it.

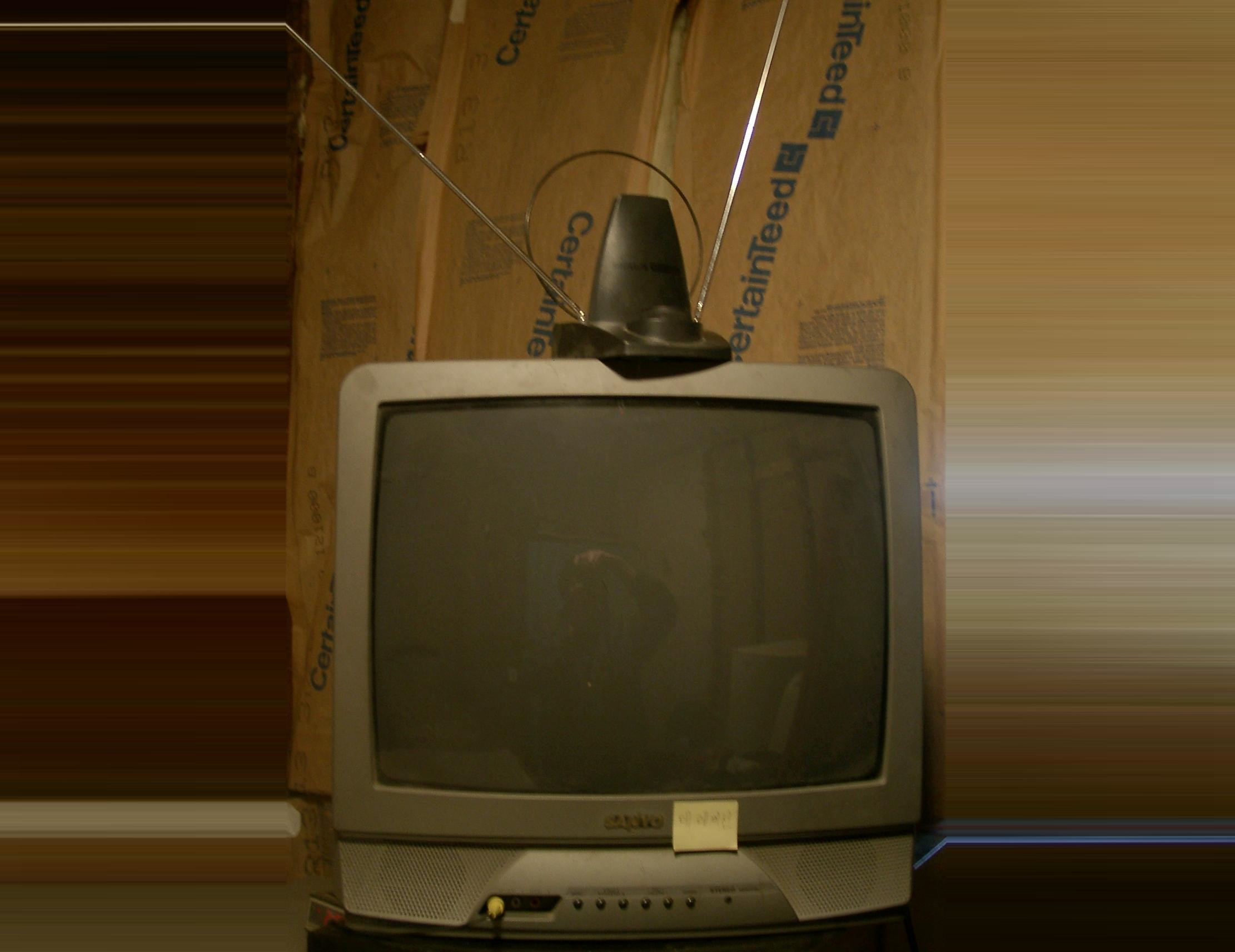

Remarkably, a simple but ubiquitous device began showing up in households all over the world, particularly in the United States and Great Britain, in the years immediately following World War II: the television set.

The way a television works is relatively simple. A powerful electromagnetic wave is transmitted by a tower, where it can be received by a properly sized antenna oriented in the correct direction. That wave has additional signals superimposed atop it, corresponding to audio and visual information that had been encoded. By receiving that information and translating it into the proper format (speakers for producing sound and cathode rays for producing light), we were able to receive and enjoy broadcast programming right in the comfort of our own homes for the first time. Different channels broadcasted at different wavelengths, giving viewers multiple options simply by turning a dial.

Unless, that is, you turned the dial to channel 03.

Channel 03 was — and if you can dig up an old television set, still is — simply a signal that appears to us as “static” or “snow.” That “snow” you see on your television comes from a combination of all sorts of sources:

- thermal noise of the television set and its surrounding environment,

- human-made radio transmissions,

- the Sun,

- black holes,

- and all sorts of other directional astrophysical phenomena like pulsars, cosmic rays and more.

But if you were able to either block all of those other signals out, or simply took them into account and subtracted them out, a signal would still remain. It would only be about 1% of the total “snow” signal that you see, but there would be no way of removing it. When you watch channel 03, 1% of what you’re watching comes from the Big Bang’s leftover glow. You are literally watching the cosmic microwave background.

If you wanted to perform the ultimate experiment imaginable, you could power a rabbit-ear-style television set on the far side of the Moon, where it would be shielded from 100% of Earth’s radio signals. Additionally, for the half of the time the Moon experienced night, it would be shielded from the full complement of the Sun’s radiation as well. When you turned that television on and set it to channel 03, you’d still see a snow-like signal that simply won’t quit, even in the absence of any transmitted signals.

This small amount of static cannot be gotten rid of. It will not change in magnitude or signal character as you change the antenna’s orientation. The reason is absolutely remarkable: it’s because that signal is coming from the cosmic microwave background itself. Simply by extracting the various sources responsible for the static and measuring what’s left, anyone from the 1940s onward could have detected the cosmic microwave background at home, proving the Big Bang decades before scientists did.

In a world where experts tell you over and over “Don’t try this at home,” this is one lost technology we shouldn’t forget. In the fascinating words of Virginia Trimble, “Pay attention. Someday, you’ll be the last one who remembers.”