Cosmology’s biggest conundrum is official, and no one knows how the Universe has expanded

- There are two fundamentally different ways of measuring the expanding Universe: a “distance ladder” and an “early relic” method.

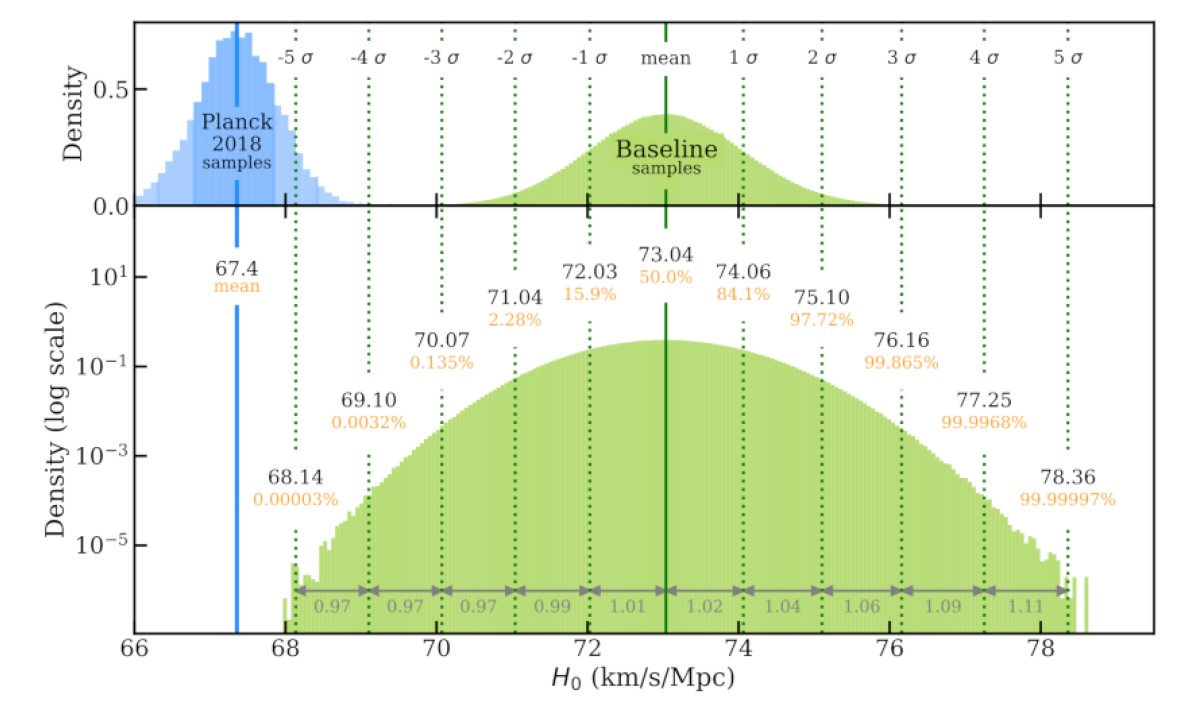

- The early relic method prefers an expansion rate of ~67 km/s/Mpc, while the distance ladder prefers a value of ~73 km/s/Mpc — a discrepancy of 9%.

- Owing to Herculean efforts by the distance ladder teams, their uncertainties are now so low that there is a 5-sigma discrepancy between the values. If the discrepancy isn’t due to an error, there may be a new discovery.

Do we really understand what’s going on in the Universe? If we did, then the method we used to measure it wouldn’t matter, because we’d get identical results regardless of how we obtained them. If we use two different methods to measure the same thing, however, and we get two different results, you’d expect that one of three things was happening:

- Perhaps we’ve made an error, or a series of errors, in using one of the methods, and therefore it’s given us a result that’s erroneous. The other one, therefore, is correct.

- Perhaps we’ve made an error in the theoretical work that underlies one or more of the methods, and that even though the entirety of the data is solid, we’re reaching the wrong conclusions because we’ve calculated something improperly.

- Perhaps no one has made an error, and all the calculations were done correctly, and the reason we’re not getting the same answer is because we’ve made an incorrect assumption about the Universe: that we’ve gotten the laws of physics correct, for example.

Of course, anomalies come along all the time. That’s why we demand multiple, independent measurements, different lines of evidence that support the same conclusion, and incredible statistical robustness, before jumping the gun. In physics, that robustness needs to reach a significance of 5-σ, or less than a 1-in-a-million chance of being a fluke.

Well, when it comes to the expanding Universe, we’ve just crossed that critical threshold, and a longstanding controversy now forces us to reckon with this uncomfortable fact: different methods of measuring the expanding Universe lead to different, incompatible results. Somewhere out there in the cosmos, the solution to this mystery awaits.

If you want to measure how fast the Universe is expanding, there are two basic ways to go about it. They both rely on the same underlying relationship: If you know what’s actually present in the Universe in terms of matter and energy, and you can measure how quickly the Universe is expanding at any moment in time, you can calculate what the Universe’s expansion rate was or will be at any other time. The physics behind that is rock solid, having been worked out in the context of general relativity way back in 1922 by Alexander Friedmann. Nearly a century later, it’s such a cornerstone of modern cosmology that the two equations that govern the expanding Universe are simply known as the Friedmann equations, and he’s the first name in the Friedmann-Lemaitre-Robertson-Walker (FLRW) metric: the spacetime that describes our expanding Universe.

With that in mind, the two methods of measuring the expanding Universe are either:

- The early relic method — You take some cosmic signal that was created at a very early time, you observe it today, and based on how the Universe has cumulatively expanded (through its effect on the light traveling through the expanding Universe), you infer what the Universe is made of.

- The distance ladder method — You try to measure the distances to objects directly along with the effects the expanding Universe has had on the emitted light, and infer how quickly the Universe has expanded from that.

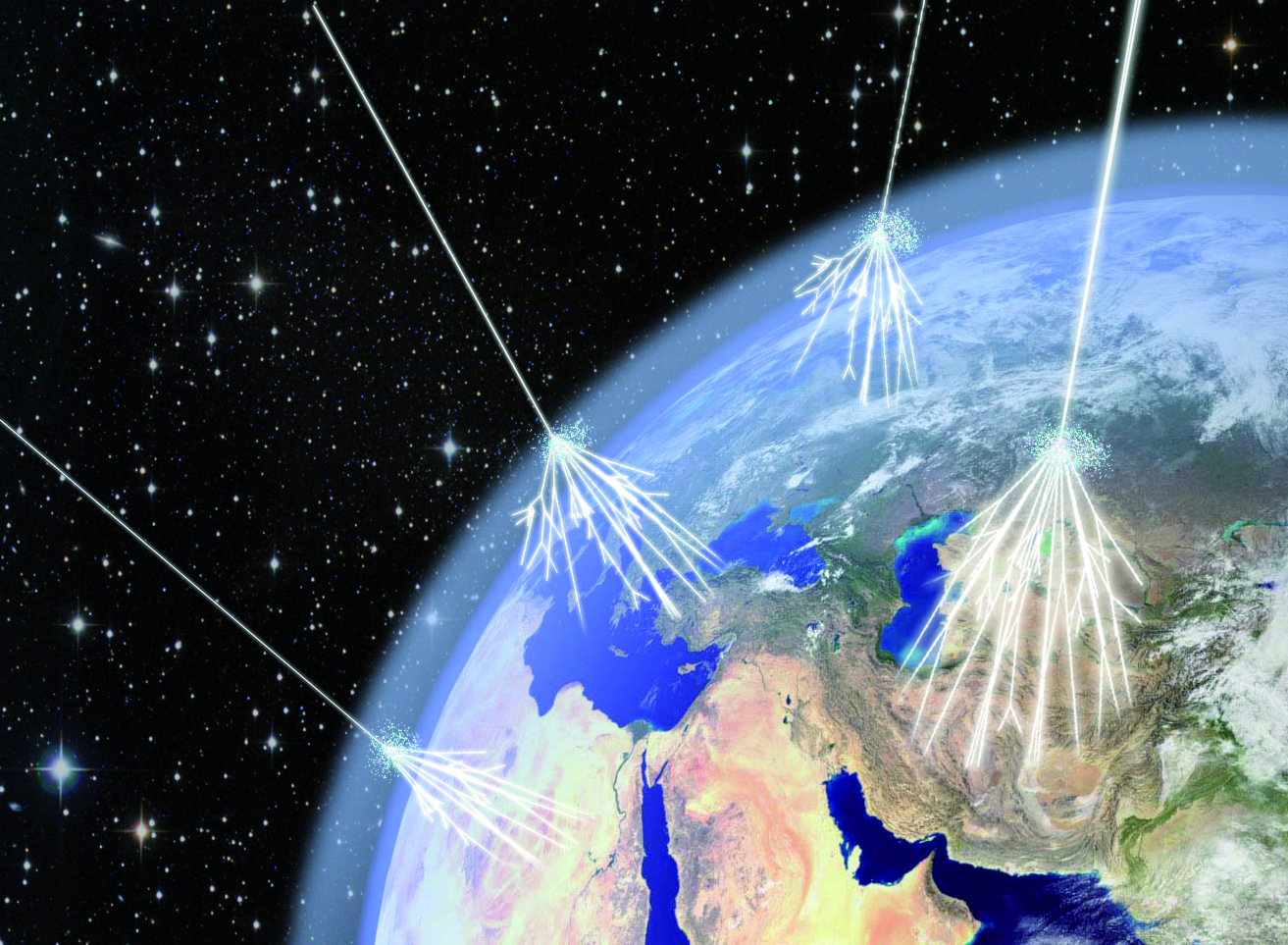

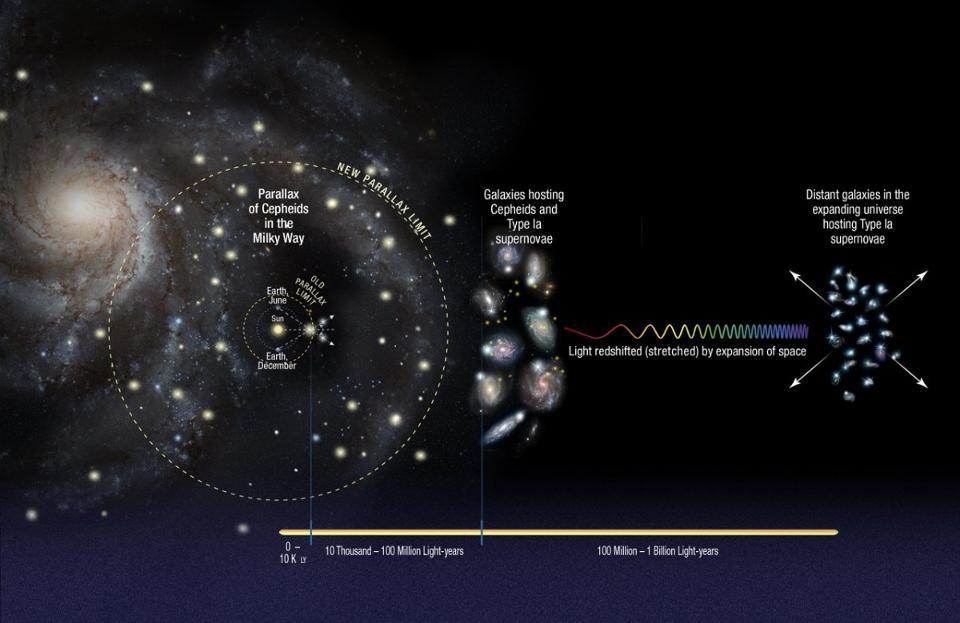

Credit: NASA/JPL-Caltech

Neither of these are really a method in and of themselves, but rather each describes a set of methods: an approach as to how you can determine the expansion rate of the Universe. Each one of these has multiple methods within it. What I call the “early relic” method includes using the light from the cosmic microwave background, leveraging the growth of large-scale structure in the Universe (including through the imprint of baryon acoustic oscillations), and through the abundances of the light elements left over from the Big Bang.

Basically, you take something that occurred early on in the history of the Universe, where the physics is well known, and measure the signals where that information is encoded in the present. From these sets of methods, we infer an expansion rate, today, of ~67 km/s/Mpc, with an uncertainty of about 0.7%.

Meanwhile, we have an enormous number of different classes of objects to measure, determine the distance to, and infer the expansion rate from using the second set of methods: the cosmic distance ladder.

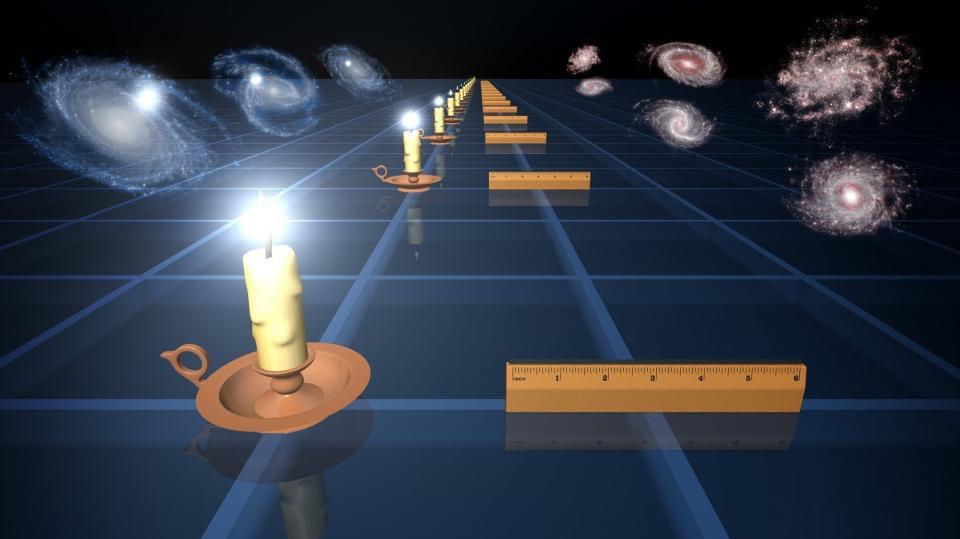

Credit: NASA, ESA, A. Feild (STScI), and A. Riess (JHU)

For the nearest objects, we can measure individual stars, such as Cepheids, RR Lyrae stars, stars at the tip of the red giant branch, detached eclipsing binaries, or masers. At greater distances, we look to objects that have one of these classes of objects and also have a brighter signal, like surface brightness fluctuations, the Tully-Fisher relation, or a type Ia supernova, and then go even farther out to measure that brighter signal to great cosmic distances. By stitching them together, we can reconstruct the expansion history of the Universe.

And yet, that second set of methods yields a consistent, but a very, very different set of values from the first. Instead of ~67 km/s/Mpc, with an uncertainty of 0.7%, it has consistently yielded values between 72 and 74 km/s/Mpc. These values date all the way back to 2001 when the results of the Hubble Space Telescope’s key project were published. The initial value, ~72 km/s/Mpc, had an uncertainty of about 10% when it was first published, and that itself was a revolution for cosmology. Values had previously ranged from about 50 km/s/Mpc to 100 km/s/Mpc, and the Hubble Space Telescope was designed specifically to resolve that controversy; the reason it was named “the Hubble space telescope” is because its goal was to measure “the Hubble constant,” or the expansion rate of the Universe.

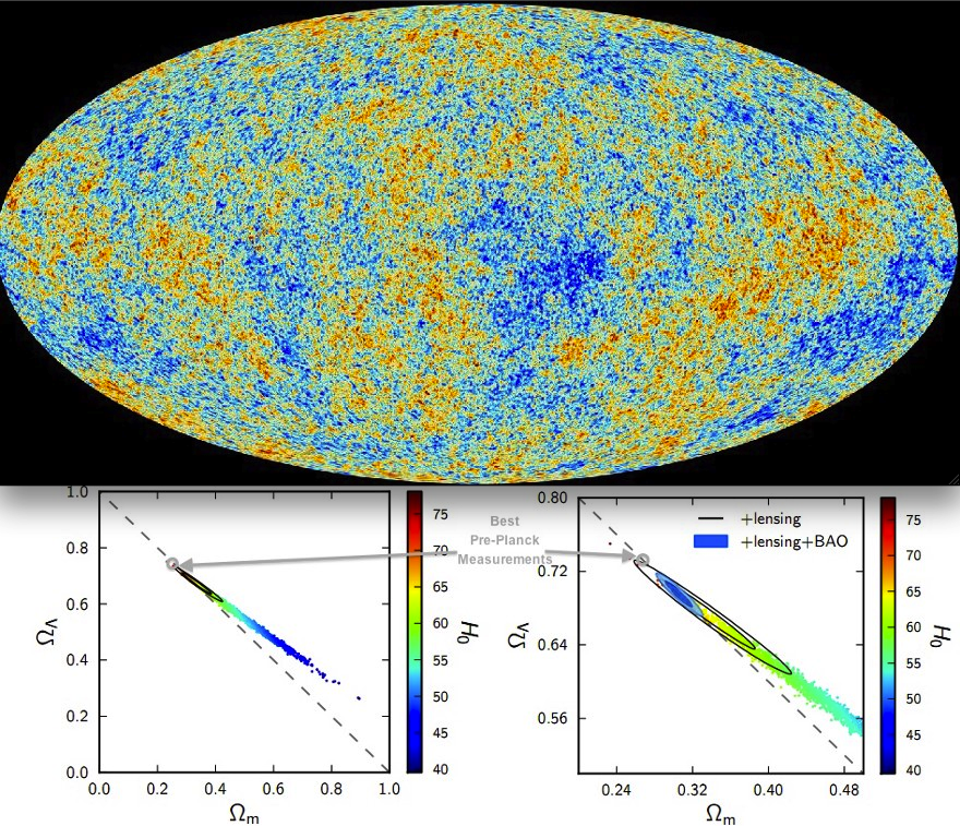

Credit: ESA & the Planck Collaboration: P.A.R. Ade et al., A&A, 2014

When the Planck satellite finished returning all of its data, many assumed that it would have the final say on the matter. With nine different frequency bands, all-sky coverage, the capability to measure polarization as well as light, and unprecedented resolution down to ~0.05°, it would provide the tightest constraints of all time. The value it provided, of ~67 km/s/Mpc, has been the gold standard ever since. In particular, even despite the uncertainties, there was so little wiggle-room that most people assumed that the distance ladder teams would discover previously unknown errors or systematic shifts, and that the two sets of methods would someday align.

But that’s why we do the science, rather than merely assume we know what the answer “needs to be” in advance. Over the past 20 years, a number of new methods have been developed to measure the expansion rate of the Universe, including methods that take us beyond the traditional distance ladder: standard sirens from merging neutron stars and strong lensing delays from lensed supernovae that give us the same cosmic explosion on repeat. As we’ve studied the various objects we use to make the distance ladder, we’ve slowly but steadily been able to reduce the uncertainties, all while building up greater statistical samples.

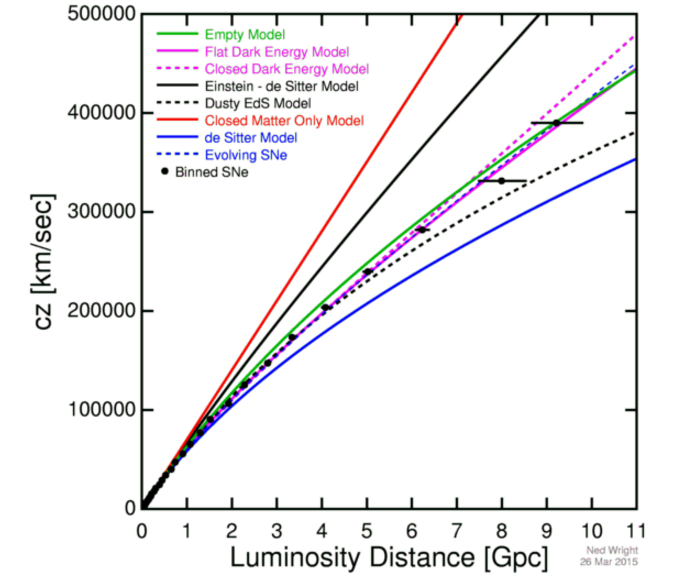

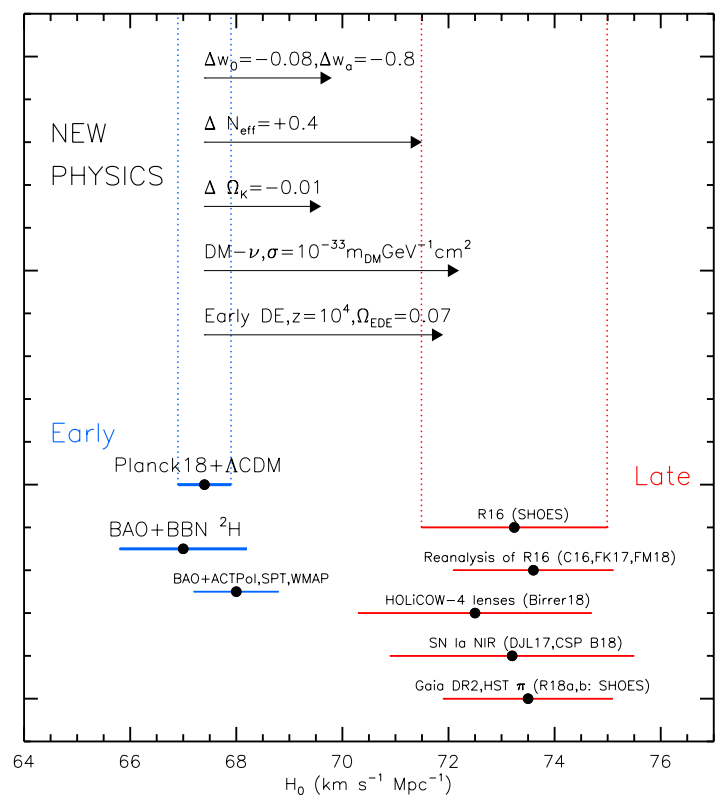

Credit: A.G. Riess, Nat Rev Phys, 2020

As the errors went down, the central values stubbornly refused to change. They remained between 72 and 74 km/s/Mpc throughout. The idea that the two methods would someday reconcile with one another seemed progressively farther away, as new method after new method continued to reveal the same mismatch. While theorists were more than happy to come up with potentially exotic solutions to the puzzle, a good solution became harder and harder to find. Either some fundamental assumptions about our cosmological picture were incorrect, we lived in a puzzlingly unlikely, underdense region of space, or a series of systematic errors — none of them large enough to account for the discrepancy on their own — were all conspiring to shift the distance ladder set of methods to higher values.

A few years ago, I, too, was one of the cosmologists who assumed that the answer would lie somewhere in a yet-unidentified error. I assumed that the measurements from Planck, bolstered by the large-scale structure data, were so good that everything else must fall into place in order to paint a consistent cosmic picture.

With the latest results, however, that’s no longer the case. A combination of many avenues of recent research has reduced the uncertainties in various distance ladder measurements precipitously.

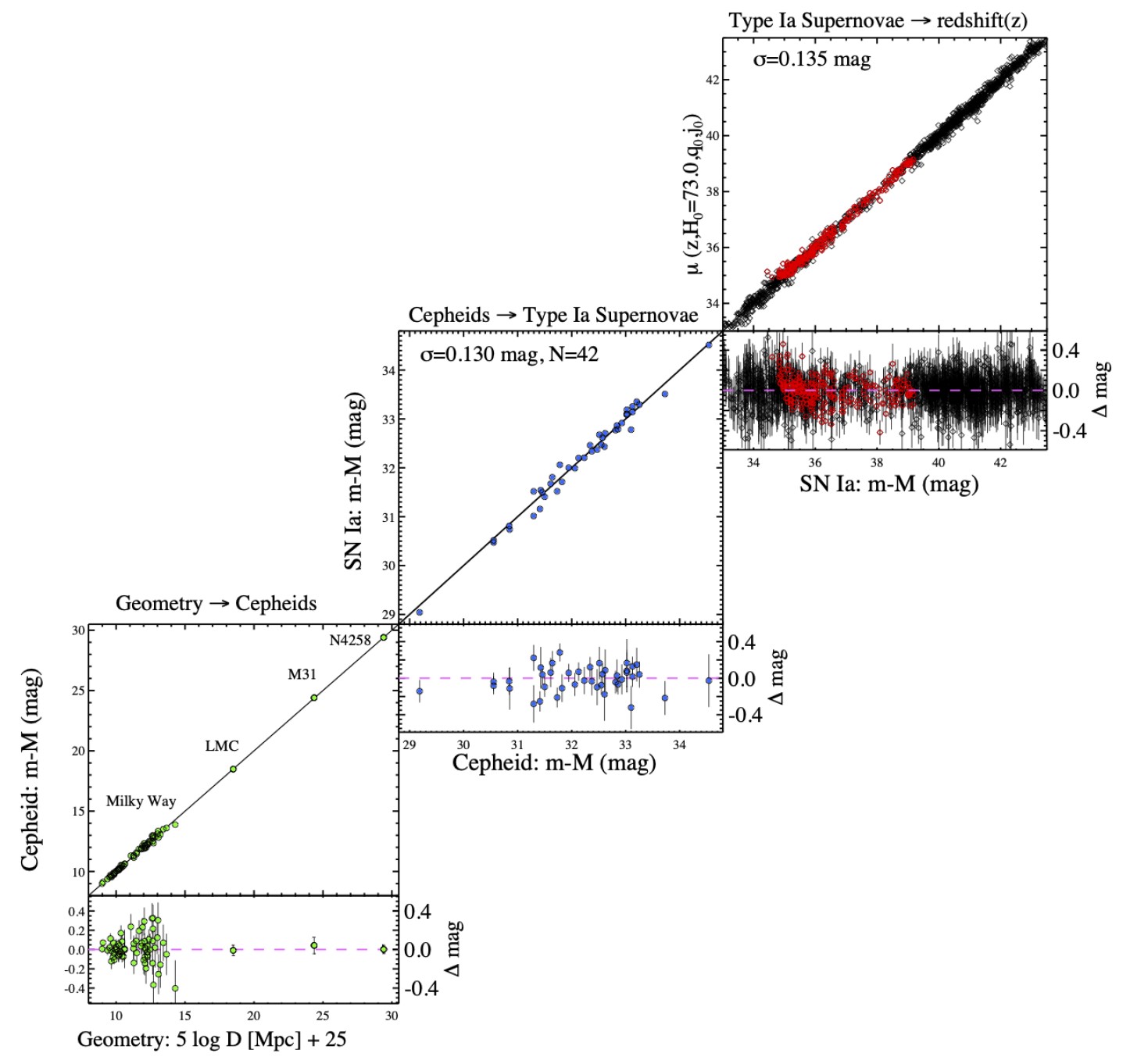

Credit: A.G. Riess et al., ApJ, 2022

This includes research such as:

- improving a calibration to the Large Magellanic Cloud, the nearest satellite galaxy to the Milky Way

- a large increase in the total number of type Ia supernovae: to more than 1700, at present

- improvement in the calibrations of supernova light-curves

- accounting for the effects of peculiar velocities, which are superimposed atop the overall expansion of the Universe

- improvements in the measured/inferred redshifts of the supernovae used in the cosmic analysis

- improvements in dust/color modeling and other aspects of supernova surveys

Whenever there’s a chain of events in your data pipeline, it makes sense to look for the weakest link. But with the current state of affairs, even the weakest links in the cosmic distance ladder are now incredibly strong.

It was only a little less than three years ago that I thought I had identified a particularly weak link: there were only 19 galaxies that we knew of that possessed both robust distance measurements, through the identification of individual stars that resided inside of them, and that also contained type Ia supernovae. If even one of those galaxies had its distance mismeasured by a factor of 2, it could have shifted the entire estimation of the expansion rate by something like 5%. Since the discrepancy between the two different sets of measurements was about 9%, it seemed like this would be a critical point to poke at, and it could have led to a resolution of the tension entirely.

Credit: A.G. Riess et al., ApJ, 2022

In what’s sure to be a landmark paper upon its publication in early 2022, we now know that cannot be the cause of the two different methods yielding such different results. In a tremendous leap, we now have type Ia supernova in 42 nearby galaxies, all of which have extremely precisely determined distances owing to a variety of measurement techniques. With more than double the previous number of nearby supernova hosts, we can safely conclude that this wasn’t the source of error we were hoping for. In fact, 35 of those galaxies have beautiful Hubble imagery of them available, and the “wiggle room” from this rung of the cosmic distance ladder leads to an uncertainty of less than 1 km/s/Mpc.

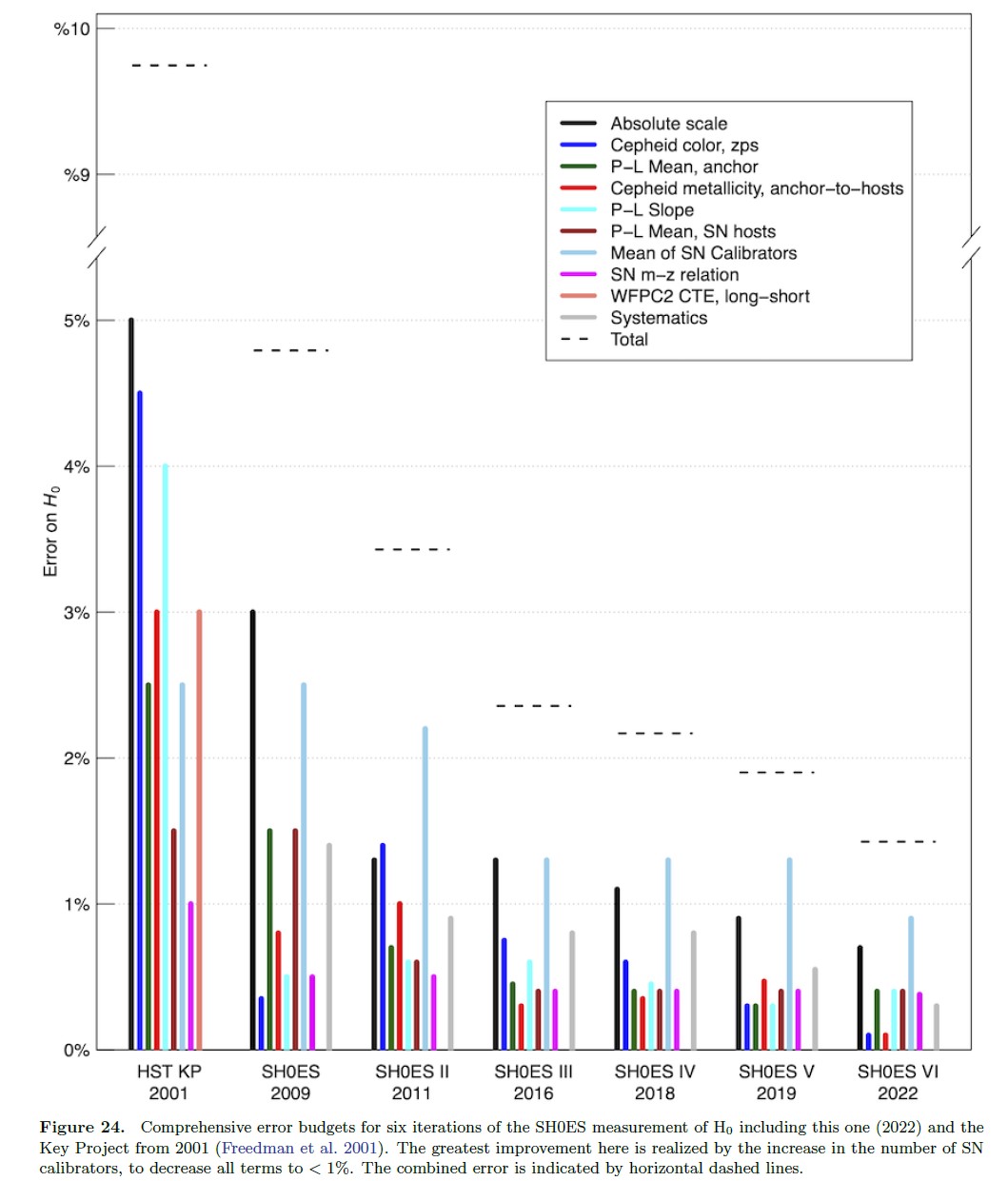

In fact, that’s the case for every potential source of error that we’ve been able to identify. Whereas there were nine separate sources of uncertainty that could have shifted the value of the expansion rate today by 1% or more back in 2001, there are none today. The largest source of error could only shift the average value by less than one percent, and that achievement is largely on account of the large increase in the number of supernova calibrators. Even if we combine all sources of error, as indicated by the horizontal, dashed line in the figure below, you can see that there is no way to reach, or even approach, that 9% discrepancy that exists between the “early relic” method and the “distance ladder” method.

Credit: A.G. Riess et al., ApJ, 2022

The whole reason that we use 5-σ as the gold standard in physics and astronomy is that a “σ” is shorthand for standard deviation, where we quantify how likely or unlikely we are to have the “true value” of a measured quantity within a certain range of the measured value.

- You’re 68% likely that the true value is within 1-σ of your measured value.

- You’re 95% likely that the true value is within 2-σ of the measured value.

- 3-σ gets you 99.7% confidence.

- 4-σ gets you 99.99% confidence.

But if you get all the way to 5-σ, there’s only around a 1-in-3.5 million chance that the true value lies outside of your measured values. Only if you can cross that threshold will we have made a “discovery.” We waited until 5-σ was reached until we announced the discovery of the Higgs boson; many other physics anomalies have shown up with say, a 3-σ significance, but they will be required to cross that gold-standard threshold of 5-σ before they cause us to reevaluate our theories of the Universe.

However, with the latest publication, the 5-σ threshold for this latest cosmic conundrum over the expanding Universe has now been crossed. It’s now time, if you haven’t been doing so already, to take this cosmic mismatch seriously.

Credit: A.G. Riess et al., ApJ, 2022

We’ve studied the Universe thoroughly enough that we’ve been able to draw a set of remarkable conclusions concerning what can’t be causing this discrepancy between the two different sets of methods. It isn’t due to a calibration error; it isn’t due to any particular “rung” on the cosmic distance ladder; it isn’t because there’s something wrong with the cosmic microwave background; it isn’t because we don’t understand the period-luminosity relationship; it isn’t because supernovae evolve or their environments evolve; it isn’t because we live in an underdense region of the Universe (that’s been quantified and can’t do it); and it isn’t because a conspiracy of errors are all biasing our results in one particular direction.

We can be quite confident that these different sets of methods really do yield different values for how quickly the Universe is expanding, and that there isn’t a flaw in any of them that could easily account for it. This forces us to consider what we once thought unthinkable: Perhaps everyone is correct, and there’s some new physics at play that’s causing what we’re observing as a discrepancy. Importantly, because of the quality of observations that we have today, that new physics looks like it occurred during the first ~400,000 years of the hot Big Bang, and could have taken the form of one type of “energy” transitioning into another. When you hear the term “early dark energy,” which you no doubt will over the coming years, this is the problem it’s attempting to solve.

As always, the best thing we can do is obtain more data. With gravitational wave astronomy just getting started, more standard sirens are expected in the future. As James Webb takes flight and 30-meter-class telescopes come online, as well as the Vera Rubin observatory, strong lensing surveys and large-scale structure measurements should improve dramatically. A resolution to this current conundrum is far more likely with improved data, and that’s exactly what we’re attempting to uncover. Never underestimate the power of a quality measurement. Even if you think you know what the Universe is going to bring you, you’ll never know for certain until you go and find out the scientific truth for yourself.