The Neuroscience of Cocktail Party Conversation

So you’re at a cocktail party, and, like at most cocktail parties, there are a handful of conversations happening around the room. Yet, despite the dull roar of laughter and discussion, you have no trouble focusing on the voice of the person with whom you’re speaking. You see her eyes and lips moving and you understand every word she is saying. But as often happens, you begin to lose interest in the conversation. And though you continue to nod your head and say things like, “Uh-huh, Oh really?” you consciously turn your attention to the uproarious banter of a more enticing conversation happening elsewhere in the room.

How is it that in a blink of an eye, we’re able to selectively refocus our auditory attention to a distant conversation, while ignoring the conversation that’s happening right in front of us? The answer, it turns out, is a mystery

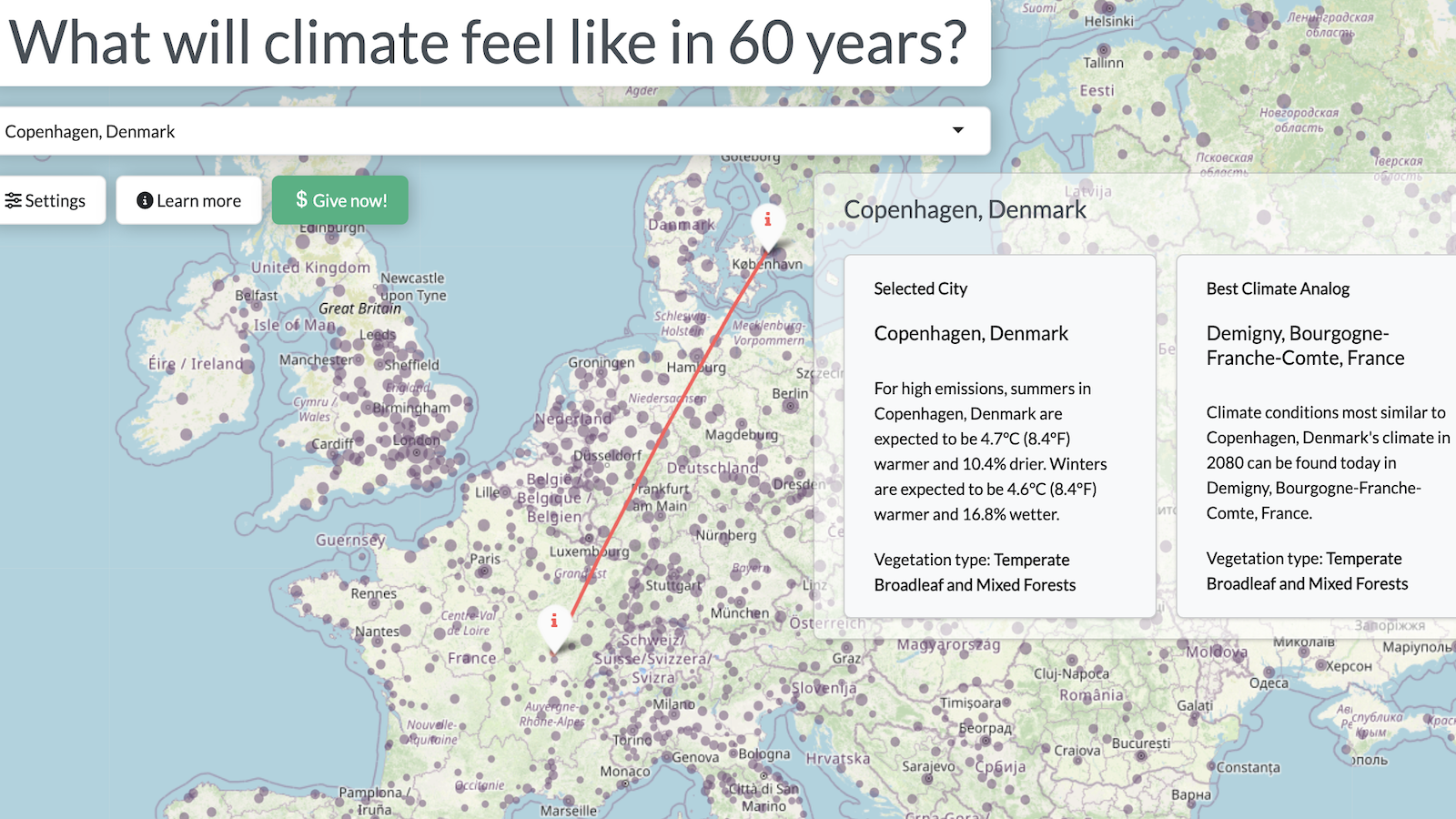

According to Tony Zador, a Professor of Biology and Program Chair of Neuroscience at the Cold Spring Harbor Laboratory, the brain mechanisms responsible for any kind of attention, whether visual or auditory, are probably not fundamentally different. However, the challenge of understanding how the brain goes about selectively listening to one cocktail party conversation over another is twofold. The first issue is one of computation: “We have a whole bunch of different sounds and from a bunch of different sources and they are superimposed at the level of the ears and somehow they’re added together and to us it’s typically pretty effortless to separate out those different threads of the conversation, but actually that’s a surprisingly difficult task,” says Zador. Scientists were able to program computers to recognize speech in controlled situations and quiet rooms as far back as ten years ago, says Zador, but when deployed in real world settings with background noise their algorithms fail completely. Humans on the other hand have evolved to effortlessly break down the components of an auditory scene.

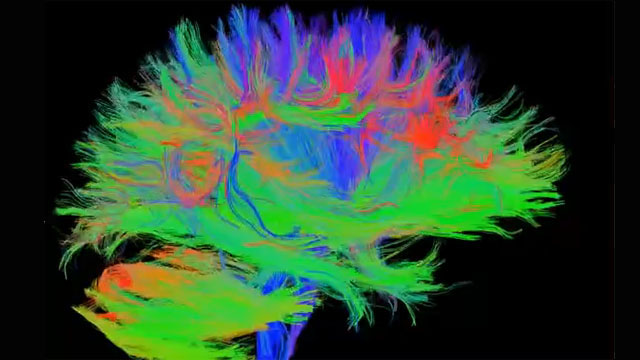

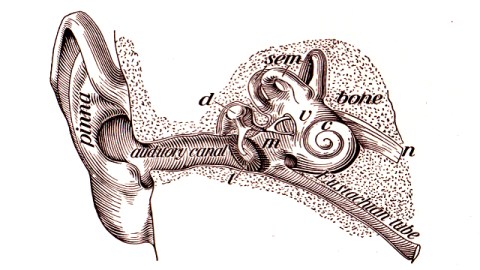

The second issue is one of selection. When we listen to one conversation over another, we are choosing to redirect our auditory attention, but neuroscientists have yet to discover how the routing of these decisions happens. Scientists know that sound waves are received by a structure in your ear called the cochlea. Within the cochlea there are neurons that are exquisitely sensitive to minute changes in pressure. Together, these neurons act in the cochlea as a spectral analyzer—some are sensitive to low frequency sounds, others are sensitive to middle frequency sounds and others are sensitive to high frequency sounds—and each is coded separately along a set of nerve fibers that lead to the brain’s thalamus. The thalamus acts as a “pre-processor” for all sensory modalities, converting distinctly different input from the eyes and ears into a “standard form” of sensory input before passing it along to the cortex of the brain.

“Where we really lose track of [the input] is when they enter into the early parts of the sensory cortex,” says Zador. “Attention is a special case of that kind of general routing problem that the brain faces all the time, because when you attend to let’s say your sounds rather than your visual input or when you attend to one particular auditory input out of many, what you’re doing is your selecting some subset of the inputs. In this case, the input coming into our ears and subjecting them to further processing and you’re taking those signals and routing them downstream.” How that routing happens is the basis of Zador’s research.

Ultimately, Zador says would like to understand how attention works in humans, but the neural activity of rodents is more easily manipulated and analyzed than that of primate monkeys or humans. To study the auditory attention of rodents, Zador’s designed a special behavioral box in which his colleagues and he have trained rodents to perform attentional tasks. The setup looks like a miniature sized talk-show set, in which the participant—a rat—is asked to choose between three ports by sticking his nose through a whole. When the rat sticks his nose through the center port, he breaks an LED beam that signals a computer to deliver a specified type of stimuli, such as a high or low frequency sound. When the computer presents a low frequency sound, for example, the rat goes to one of the ports and then gets a reward, a small amount of water. If the rat breaks the beam and receives a different frequency sound he goes to the other port and gets a reward. After a while, the rat understands what he is supposed to do and more quickly reacts to the differing frequencies. Then, Zador challenges the rat by masking the frequencies with low levels of white noise.

Zador’s experminent demonstrates that rats can take sound stimuli and use it to guide their behavior from one port to the other. It also provides a basis for Zador to present the rats with more complicated tasks that demand the rats’ attention, like manipulating when the high or low frequency is presented and how much it is buried in a cacophony of distracter sounds. Zador has found that when the rats ramp up their attention in expectation of hearing the sounds, the speed with which he responds to the target is faster than if the target comes unexpectedly. This, he says, is one of the hallmarks of attention: an improvement in performance as measured either by speed or accuracy. Zador has also found that there are neurons in the rats’ auditory cortex whose activity is especially enhanced when the rats expect to hear the target compared to when that same target is presented, but at an unexpected moment.

In better understanding the neural circuits underlying attention, Zador may be paving the way for better understanding disorders like autism, which is, in large part, a disorder of neural circuits. “We think that the manifestation of the environmental and genetic causes of autism is a disruption of neural circuits and in particular there is some reason to believe that it’s a disruption of long range neural circuits, at least in part, between the front of the brain and the back of the brain,” says Zador. “Those are the kinds of neural pathways that we think might be important in guiding attention.” One of the ongoing projects in Zador’s lab is to examine in mice the effects of genes that scientists think, when disrupted, cause autism in humans. “We don’t really have final results yet, but that is because these mice have only very recently become available,” he says. “So we’re very optimistic that by understanding how autism affects these long range connections and how those long range connections in turn affect attention that we’ll gain some insight into what is going on in humans with autism.”

The Takeaway

The brain’s ability to selectively focus on certain aspects of auditory scenes continues to perplex neuroscientists, but a continued effort to understand the neural circuits underlying attention in rats is giving scientists a better understanding of disorders like autism.

Understanding selective auditory attention in the brain may also help scientists begin to better comprehend other modalities of attention, such as visual attention, which psychologists have shown play a crucial roll in our ability—or lack thereof—to perform seemingly mundane tasks like drive and talk on the cell phone.

More Resources

— Naturearticle giving a more in-depth description of Tony Zador’s rat experiment (2010).

— More information on the relation between auditory perception and Autism at the Laboratory for Research into Autism.

— A computational model of auditory selective attention (2004).