AI Now Has “Gaydar”—A Controversial Claim, But Is It Unethical?

We can tell a lot about a person by looking at their face. It turns out that AI can do it better.

Glad to see that our work inspired debate. Your opinion would be stronger, have you read the paper and our notes: https://t.co/dmXFuk6LU6pic.twitter.com/0O0e2jZWMn

— Michal Kosinski (@michalkosinski) September 8, 2017

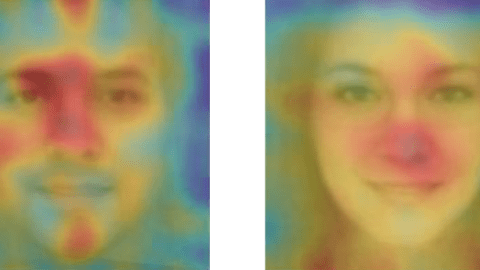

In a controversial new study that is setting the internet ablaze, researchers claim that artificial intelligence can be used to accurately detect someone's sexual orientation. The research, "Deep neural networks are more accurate than humans at detecting sexual orientation from facial images," utilized deep neural networks to examine over 35,000 publicly-available dating site photos where sexual orientation was indicated. The preliminary research was published in the Journal of Personality and Social Psychology, authored by Michal Kosinski, a professor at Stanford University Graduate School of Business who is also a Computational Psychologist and Big Data Scientist.

Without AI assistance, humans alone could correctly guess whether someone identified as a gay or straight male 61% of the time, and females 54% of the time. AI could correctly guess straight-versus-gay male pictures 81%, and females 71%. To make this determination, the AI program not only looked at fixed facial features but also transient facial features such as grooming styles.

Please read a summary of our findings https://t.co/a5AWEe7UwM before hiring the firing squad. pic.twitter.com/SzaEajhgty

— Michal Kosinski (@michalkosinski) September 10, 2017

Are We Shooting the Messenger?

This research has sparked controversy potentially because of a confluence of factors:

1. The research focuses on sexuality in binary terms (straight or gay), while much of the societal conversation has focused around a greater appreciation of gender fluidity that does not fit within a binary understanding.

2. Our sexual orientation is seen as something that we own. If AI is able to somewhat accurately determine our sexuality, this may make us feel incredibly vulnerable (similar to the x-ray glasses gag, that is supposed to make us feel naked and exposed).

3. The potential for misuse seems obvious. What may seem a fun parlor trick, or perhaps a testament to advancing deep neural networks, can also be misused by governments to prosecute those living in countries where being gay (or non-straight) is a punishable offense. There are currently at least ten countries where being gay is punishable by death, and 74 where being gay is illegal.

With the release of Apple's iPhone X, facial recognition technology officially enters the mainstream. That brings with it concerns of abuse, though at least one surveillance watchdog—Edward Snowden—approves of the phone's "panic disable" function.

This controversy has to be viewed through a larger question: "What ethical responsibility do researchers have with the potential misuse of their findings or creations?" The heated debate around this research comes on the heels of another controversial study examining facial features--one in which an algorithm could determine one's identity even if their face was obscured by glasses or a scarf.

Yes, we can & should nitpick this and all papers but the trend is clear. Ever-increasing new capability that will serve authoritarians well.

— Zeynep Tufekci (@zeynep) September 4, 2017

What may be getting lost in the cacophony of online comments is that the lead author of the AI/sexuality study, Michael Kosinski, was quite aware and direct about this potential for misuse.

"Those findings advance our understanding of the origins of sexual orientation and the limits of human perception. Additionally, given that companies and governments are increasingly using computer vision algorithms to detect people’s intimate traits, our findings expose a threat to the privacy and safety of gay men and women." -From Deep neural networks are more accurate than humans at detecting sexual orientation from facial images [introduction], Journal of Personality and Social Psychology.

While the Journal of Personality and Social Psychology has since notified Kosinski about ethical concerns they have with the study (“In the process of preparing the [manuscript] for publication, some concerns have been raised about the ethical status of your project. At issue is the use of facial images in both your research and the paper we accepted for publication”), this runs counter to the initial response from the journal when accepting the research.

According to a new article in Inside Hire Ed, Koninksi received the following message from the Journal of Personality and Social Psychology: “Your work is very innovative, and carries far-reaching implications for social psychology and beyond. I am therefore very pleased to accept this version of the paper in [the journal]. Congratulations!”

A thorny question that this controversy brings up is how much attention we place on research that has the potential for misuse, versus the authoritarian leaders that may abuse the findings. While the aforementioned study has been dinged for its focus on Caucasian photos, there was clearly an overall awareness and consideration of potential misuse.

While we in no way want to perpetuate stereotypes or narrow-minded thinking, we also need to be careful to not suppress science and speech.

--