To fear AI is to fear Newton and Einstein. There are no “dragons” here.

A long time ago, Thomas More wrote Utopia, a satire set in an idealized island society where people live in harmony with perfected laws, politics, and social norms. Ever since, we’ve used “utopia” as a term of derision, describing a bug in the human operating system where we yearn for the perfected and confuse our hope for it as a possible future.

And yet there’s a reason we dream of it. For much of human history, we’ve lived in a state of want — want for better living conditions, material resources, health, education. We want to live better.

Subscribe to Rohit Krishnan’s substack:

But we also fear the unknown. In the old maps, we would write hic sunt dracones (“Here be dragons”) about places we didn’t know. Over the centuries we dared slay those dragons, each one seemingly more fantastic than the one before, and brought ourselves forward. The cure to dragons, it turned out, was knowledge.

Distilled knowledge became technology, the ability for us to do more than we ever thought we could, from dragging blocks heavier than a house to transferring electrons consistently from one spot to another to creating heavier-than-air machines that fly.

There were a few things that helped us move toward the better utopia we see around us. Energy powered our technology, first in the form of animal power, then fossil fuels, and now from the very atom itself. We built our technology from materials, starting with the humble tree to carbon nanotubes and more today. And our designs came from knowledge — the most elusive of ingredients, which we learned to mix through painful trial and error over generations. We developed the ability to slowly reason our way through difficult problems and to pass our knowledge to others so they could learn faster than we did.

For much of human history, we’ve lived in a state of want — want for better living conditions, material resources, health, education. We want to live better.

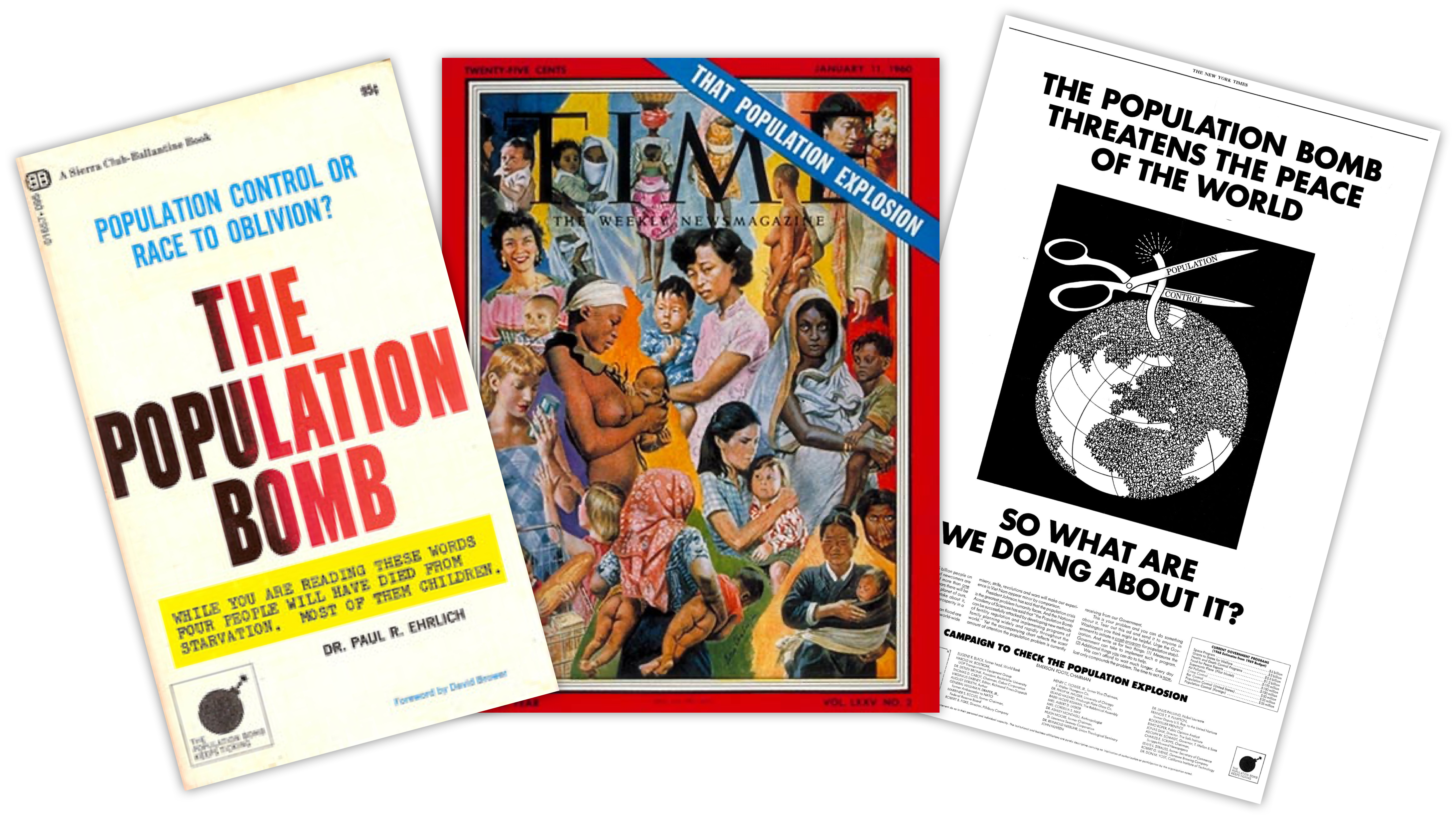

But for the past few decades, we’ve been stuck as energy extraction has reached a peak and we’ve sunk a little under the weight of our achievements. The low-hanging fruits have been plucked. Having cured certain diseases, put men on the Moon, and created incredible means of communication and new medicines, we now face stagnation.

AI could help us move forward again by augmenting cognition for all.

The revolution of AI is a way for us to deal with our problems of information overload. AI, especially in its current form with large language models like OpenAI’s GPT, is a fuzzy processor. It is able to recognize patterns sufficiently complex that it can learn language, write code, and create poetry. It can draw like Caravaggio or create new Bach symphonies. It’s able to convert seemingly any information from one form to another: words to images, images to music, words to video, numbers to essays.

Still, it’s only part of the development. We’ve had AIs like AlphaFold, which were specifically trained to predict protein folding and do so spectacularly. There were game-winning AIs in chess, Go, and now Counter-Strike. There are negotiation AIs that perform well in multi-party negotiations. AlphaZero can learn and play multiple games at a superhuman level without being explicitly programmed for any specific game.

But as we finally found a way to make the AI understand us, by having us speak with it directly, we seem to be getting scared.

The thing is, the same thing that makes AI great — the reason why you can ask for something in natural language and get a Python script back — is the same principle that makes it liable to misunderstand or misrepresent points of view, to hallucinate.

There aren’t many times in history we’ve gotten a legitimate cognitive upgrade, but when we did, it had an outsized impact on everything thereafter!

The level of experimentation with AIs has already been off the charts. We’ve started seeing them perform tasks autonomously. We’ve started seeing multimodal abilities. We’re seeing an increase in context windows. We’re seeing memories being added, short- and long-term. We’re seeing the ability to fine-tune models on the go. And if we’re able to use what exists in the near future with a reinforcement learning paradigm, we might be able to bootstrap something resembling actual intelligence.

But none of this is easy. There is no fait accompli. The thing that upsets me is that the underlying assumption that we will inevitably, inexorably, reach (and surpass) actual intelligence treats all of us who are excited about it and want to build this future as agentless flotsam drifting through history.

Nothing is going to get built unless we build it. And, importantly, we wouldn’t want to build it unless there’s some value we can get from it or an end that’s useful. And nothing gets used unless it’s useful.

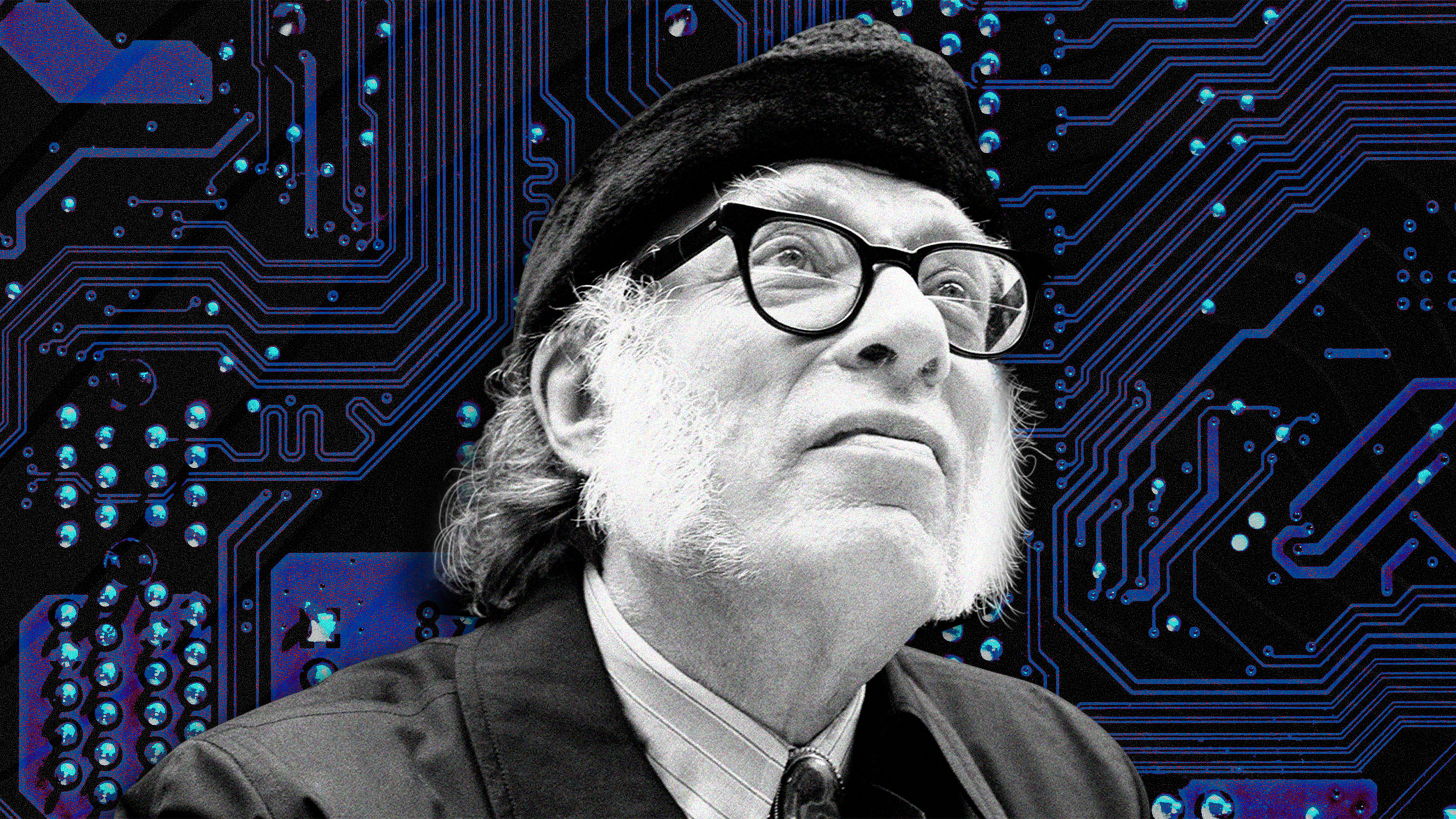

And the size of the prize is already immense. There aren’t many times in history we’ve gotten a legitimate cognitive upgrade, but when we did, whether through better education or the advent of scientific thinking, it had an outsized impact on everything thereafter!

For instance, most data scientists say that 90% of their job is data cleaning and preparation. Imagine if even that could be cut in half. Or think about a time in your life when you had too much to do — too much admin work, too many meetings to arrange, too many schedules to manage. Or too much data to analyze, too much code to write, too many papers to grade. For every job in every niche, this has been a common problem for decades.

AI could help us move forward again by augmenting cognition for all.

What wouldn’t you give to have an automated assistant who could read and summarize things for you? Or who could write your emails? Or write first drafts of reports? Or analyze data that you just don’t want to analyze, because it’s banal?

Sure, AI right now is like an excitable and not particularly reliable assistant — brilliant but flaky, a great start while we try to make them more reliable and bind their fanciful nature.

What we’re learning about its impact, however, is a mirror to our own activities. We’re learning that our efforts, even at the cutting edge of many fields, include a ton of work-work — boring work that just has to get done so you can get to the fun or rewarding part of the job. It’s chasing the fleeting feeling you get to experience maybe a few times in your career, at the far end of exhaustion from the mundane.

Shouldn’t we try and get to that point where the self-professed dreams of humanity are fulfilled? Newton’s year of reflection and moments of inspiration. Einstein riding his bicycle and visualizing the invariant laws of physics. Percy Spencer realizing that radar caused a chocolate bar to melt. Tim Berners-Lee proposing hypertext links.

Creating more such moments is not just important or useful — it’s as close to a sacred duty as we have, and it might be the closest we can come to building a utopia. We attempt to get there through education and civilization. To fear the fruits of this is to fear ourselves.

Shouldn’t we try and get to that point where the self-professed dreams of humanity are fulfilled?

Thomas More didn’t think his utopia was a realistic possibility. Rather, his version of an idealized world was meant to highlight the shortcomings of English society in the 16th century. Today, we can see the shortcomings of our own society in technological and scientific stagnation, and in the countless hours of wasted time we spend on busywork.

AI won’t usher in utopia either, but it can help us move forward. So when we hear people discussing their AI fears in Senate hearings, arguing for AI bans in open letters, or calling for airstrikes on data centers, we can separate realistic worries from fanciful projections unlikely to ever manifest in the real world. Runaway anxieties shouldn’t be what steers us into the future.

Subscribe to Rohit Krishnan’s substack:

This article was originally published by our sister site, Freethink.