Robots who share your accent are more trusted, study shows

- Robots often sport a standardized accent because it is believed the accent makes them sound more competent.

- However, a recent study suggests that when a robot speaks in a user’s dialect, the user finds it more trustworthy and competent.

- Other factors could also affect a user’s preference, such as physical appearance and whether the voice sounds typically male or female.

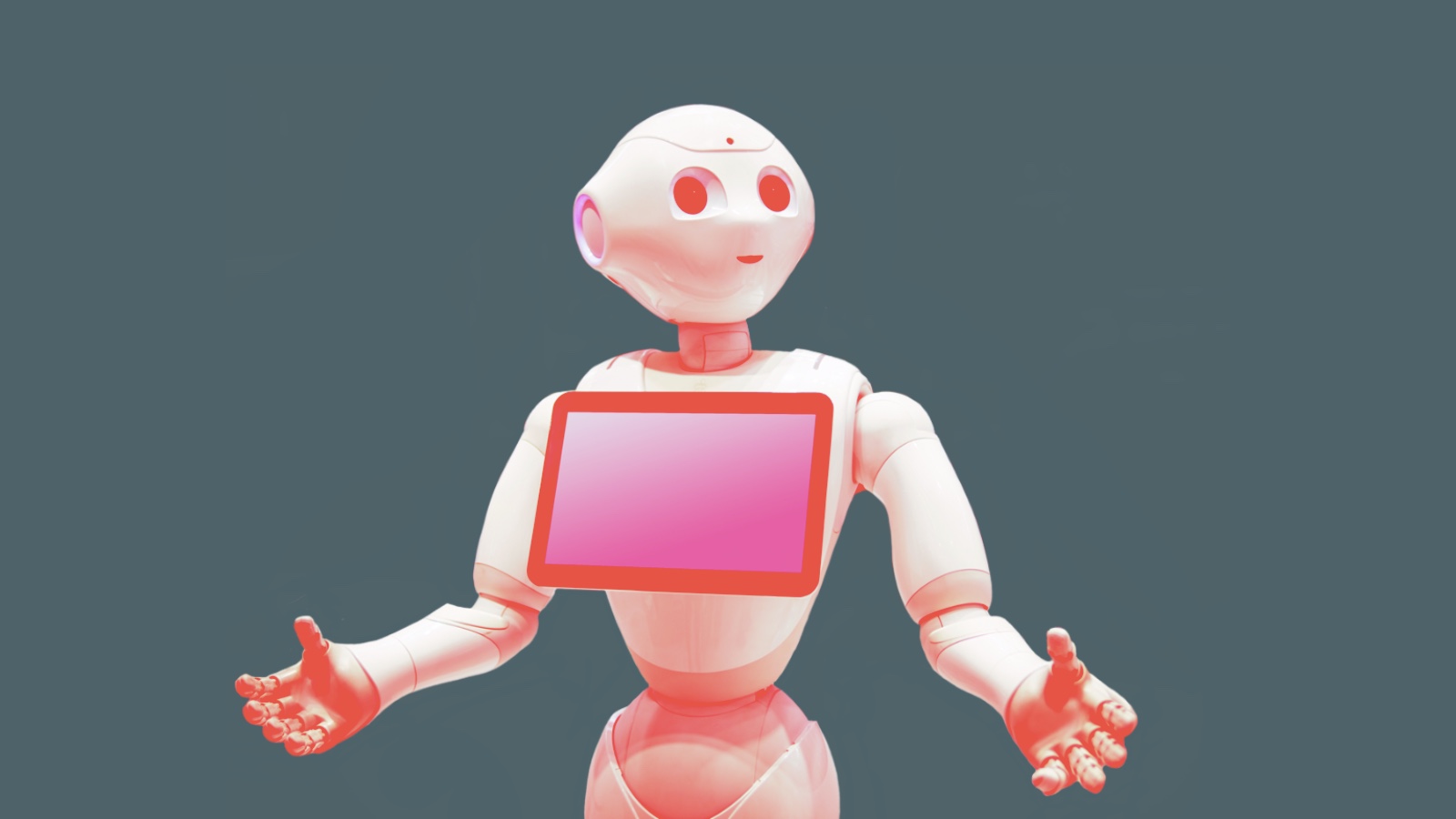

As robots become a bigger part of our everyday social lives, designers are increasingly considering how to get humans to trust them. A common expectation is that robots should behave in ways that align with our culture and social norms, and few aspects of these are more visible than the voices, accents, and dialects they use to talk to us.

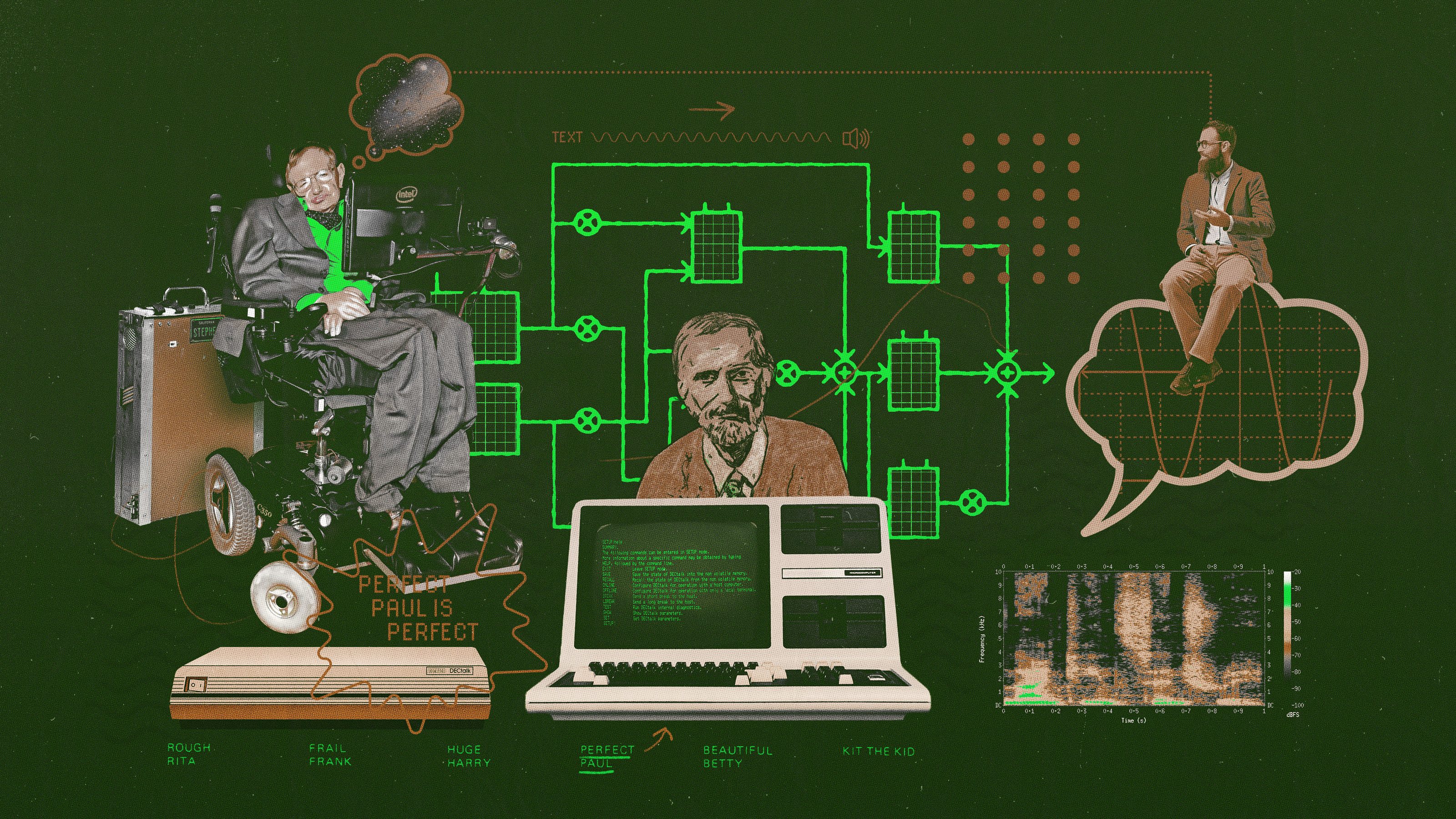

Imagine a “robot voice” in your head. If it is anything like a fictional robot inspired by decades of sci-fi, it might sound clinical, stilted, and authoritative, with a hard-to-place but generic accent. Today, however, machines are starting to talk more and more like people. How will that affect how we perceive them?

The challenge: So far, studies have been conflicted as to whether people prefer robots to speak to them in standardized accents, or in regional accents and dialects similar to their own.

While robots speaking in standardized accents are widely seen as more intelligent and competent, some researchers argue that they could be perceived as more familiar and comforting if they talk in more regional accents and dialects — possibly making them seem more trustworthy.

The survey: In a new experiment detailed in Frontiers in Robotics and AI, a team of researchers in Germany aimed to get a clearer picture of how people perceive robots based on the dialect they use.

In the study, Katharina Kühne and colleagues at the University of Potsdam recruited 120 native German speakers living in Berlin and the surrounding region of Brandenburg. They then showed them a video of the not-quite-two-feet tall Nao robot speaking either in standard German or in the Berlin dialect. So, “ich bin ein Berliner” becomes “ick been een Berlina”, the latter of which is widely perceived to be more friendly and working-class.

Perception: Afterwards, the researchers asked their participants to judge which robot they perceived as more competent and trustworthy. In general, their results showed that the participants preferred the robot speaking in standard German – but not in every case.

If the participants were more proficient in the Berlin dialect and spoke it more regularly, they perceived the dialect-speaking robot as more trustworthy. In either case, they found that the participants generally judged the robot as being more trustworthy when they also perceived it as more competent and intelligent.

Kühne’s team acknowledged there are many different factors that they didn’t test that could affect users’ preferences, including the robot’s physical appearance, whether its voice is male or female, and the deeper social and political connotations of different dialects. All the same, their study provides valuable insights into how the use of language can influence our interactions and perceptions of robots.

Informing the future: Increasingly, social robots are finding their way into areas of everyday life as wide-ranging as teaching, personal care, and even companionship. As this social landscape changes, the team’s results could ultimately offer important guidance as to how they can be tailored to meet the needs and expectations of users from a diverse range of backgrounds.

This article was originally published by our sister site, Freethink.