How viral social media memes trigger real-world violence

Credit: Christian Buehner on Unsplash

- It can be hard to believe that comical images online are enough to rile people up enough that they’ll actually attack.

- Originating in the darker corners of the internet, Bugaloo is now prominent on mainstream online platforms like Facebook and Instagram.

- The Network Contagion Research Institute’s recent series of Contagion and Ideology Reports uses machine learning to examine how memes spread.

There has been talk lately about fringe hate groups using memes to incite real world violence, but many prefer to casually dismiss this narrative as hysteria. It can be hard to believe that comical images online are enough to rile people up enough that they’ll actually attack, and for most of us, this is thankfully true.

If you spend your time scrolling through pumpkin spice recipes and cat videos, you’re probably not the target of a systematic hate campaign. But that doesn’t mean these campaigns don’t exist. Not every Facebook user is susceptible to radicalization, but memetic warfare is increasingly being recognized as an important element of counter-governmental sedition and racist propaganda.

Studies have long shown that exposure to hate makes it easier for people to hate – and to act on their hate. If there is an organized effort by hate groups to manipulate people with memes, then these visuals can actually be influencing people to perpetrate violent attacks.

What kind of memes were used on the Facebook Event listing that the Kenosha Guard used to mobilize Kyle Rittenhouse?

Many netizens appreciate the dangers and want social media platforms to do much more to curb the spread of hate across their networks. From Snapchat to Gab, TikTok to WhatsApp, memes are fueling hatred and inciting violence, and more Americans are asking what responsibility these companies should take in policing the spread of harmful content.

The Network Contagion Research Institute’s recent series of Contagion and Ideology Reports leverages machine learning to examine how memes spread. The idea is to unearth a better understanding of the role memes play in encouraging real-world violence.

So what exactly is a meme, and how did it become a tool for weaponizing the web? Originally coined by Oxford biologist Richard Dawkins in his 1976 book “The Selfish Gene,” the term is defined there as “a unit of cultural transmission” that spreads like a virus from host to host and conveys an idea which changes the host’s worldview.

Dawkins echoes “Naked Lunch” author William Burroughs’s concept of written language as a virus that infects the reader and consequently builds realities that are brought to fruition through the act of speech. In this sense, a meme is an idea that spreads by iterative, collaborative imitation from person to person within a culture and carries symbolic meaning.

The militant alt-right’s use of internet memes follows this pattern. They are carefully designed on underground social media using codes, before attaining approval by like-minded users that disseminate these messages on mainstream platforms like Twitter or Facebook.

Sometimes these messages are lifted from innocuous sources and altered to convey hate. Take for example Pepe the Frog. Initially created by cartoonist Matt Furie as a mascot for slackers, Pepe was appropriated and altered by racists and homophobes until the Anti-Defamation League branded the frog as a hate symbol in 2016. This, of course, hasn’t stopped public figures from sharing variations of Pepe’s likeness in subversive social posts.

The dissemination of hateful memes is often coordinated by an underground movement, so even its uptake by mainstream internet users and media outlets is claimed as a victory by a subculture that prides itself on its trolling.

Indeed, these types of memes have a tricky way of moving from the shadows of the internet to the mainstream, picking up supporters along the way – including those who are willing to commit horrific crimes.

This phenomenon extends well beyond politics. Take, for example, the Slenderman. Created as a shadowy figure for online horror stories, Slenderman achieved mainstream popularity and a cult-like following. When two girls tried to stab their friend to death to prove that Slenderman was real in 2014, the power of the meme to prompt violence became a national conversation.

Slenderman creator Victor Surge told Know Your Meme that he never thought the character would spread beyond the fringe Something Awful forums. “An urban legend requires an audience ignorant of the origin of the legend. It needs unverifiable third and forth [sic] hand (or more) accounts to perpetuate the myth,” he explained. “On the Internet, anyone is privy to its origins as evidenced by the very public Somethingawful thread. But what is funny is that despite this, it still spread. Internet memes are finicky things and by making something at the right place and time it can swell into an ‘Internet Urban Legend.'”

While Slenderman is obviously a fiction, political memes walk many of the same fine lines – and have galvanized similarly obsessed followers to commit attacks in the real world.

Take, for example, the Boogaloo movement. This extremist militia has strong online roots and uses memes to incite violent insurrection and terror against the government and law enforcement. Originating in the darker corners of the internet, Bugaloo is now prominent on mainstream online platforms like Facebook and Instagram. Boogaloo followers share how to build explosives and 3D-printed firearms, spread encrypted messages and distribute violent propaganda.

Boogaloo’s intentions seem to be twofold: recruit new followers and communicate tactical information to incumbent members. While finding more people to adopt their ideology is one thing, there is a strong possibility that Boogaloo memes and messaging can be used to trigger sleeper cells to violent action.

Boogaloo isn’t alone. The use of memes by groups like the Proud Boys, which operate on an ideology consisting of both symbolic and physical violence, is spreading across America – especially since the start of the pandemic.

The Network Contagion Research Institute (NCRI) uses advanced data analysis to expose hate on social media. The institute’s scientists work with experts at the ADL’s Center on Extremism (COE) to track hateful propaganda online and offer strategies to combat this phenomenon. By better understanding how memes spread hateful propaganda, the NCRI is helping law enforcement, social media companies and citizens get better at preventing online chatter about violence from becoming real action.

Founded by Princeton’s Dr. Joel Finkelstein, the NCRI is finding that the spread of hate online can be examined using epidemiological models of how viruses spread, only applied to language and ideas, much as Burroughs imagined decades ago.

Now that the internet has obviated the need for people to meet in person or communicate directly, recruiting and deploying members of violent groups is easier than ever before.

Before social media, as Finkelstein reminds us, organizing underground hate groups was harder. “You would have to have an interpersonal organization to train and cultivate those people,” he said. “With the advance of the internet, those people can find each other; what it lacks in depth it makes up in reach. The entire phenomenon can happen by itself without a trace of anyone being groomed.”

Memes generate hysteria in part by being outrageous enough for people to share – even the mainstream media. This is a concrete strategy called amplification. The extreme alt-right manages to reach mainstream audiences with racialist and supremacist memes, even as these messages are being denounced.

Content spreads virally whether those spreading it support the embedded ideology or not, making it difficult to intervene and prevent meme-incited violence.

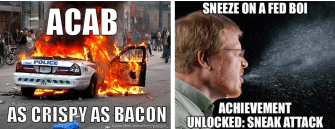

Some people may unwittingly amplify messaging designed to spread violence, mistaking memes like ACAB (all cops are bastards) for harmless if somewhat dark humor.

NCRI

So what can be done about the meme wars, and what onus is on law enforcement and Big Tech?

Finkelstein recommends a three-pronged approach to combating the violent outcome of subversive memes.

- Push for more stringent boundaries defining civility online using technology.

- Educate courts, lawmakers and civil institutions about how extreme online communities operate and create better industry standards to regulate these groups.

- Bring the federal government, including the FBI and Homeland Security, up to speed on the new reality so they can intervene as necessary.

But not everyone thinks Big Tech or the law have much ground to stand on in combating viral hate. Tech companies haven’t gone the mile in truly enforcing stricter standards for online content, but some companies are closing down accounts associated with extremist leaders’ websites and their movements.

But this is largely tilting at windmills. The proliferation of memes online spreading ideology virally might be too big to combat without dramatically limiting freedom of speech online. Facebook and YouTube have banned thousands of profiles, but there’s no way of knowing how many remain – or are being added every day. This leaves the danger of meme radicalization and weaponization lurking beneath the surface even as we head into elections.

Boogaloo and other seditious groups have been empowered by recent crises, including the pandemic and the anti-racist protests sweeping the country. Greater numbers of these community members are showing up at anti-quarantine protests and are disrupting other gatherings, and the press they’re getting – this article included – is only bringing more attention to their violent messages.

Extremist memes continue to circulate, as America heads to the polls amidst a global pandemic and widespread civil unrest. It stands to reason that more blood will be spilled. Getting a handle on Twitter handles that spread hate and shutting down groups whose sole purpose is sowing the seeds of brutality is vital – the only question that remains is how.