Synthetic media: How AI-generated characters spread disinformation

- Synthetic media is a broad, umbrella term that includes, among other things, the use of AI to generate “deepfake” photos.

- This technology has advanced rapidly. Malevolent actors are using it to spread extremely convincing propaganda.

- Governments and Big Tech companies are trying to fight back, but there will be unintended consequences.

In the last few years, many strategies and tactics have been used to generate and spread online misinformation. But a recent approach that taps into the power of artificial intelligence to create photos with high accuracy of fictitious personas that purport to be journalists or field experts poses a serious and novel threat to our society.

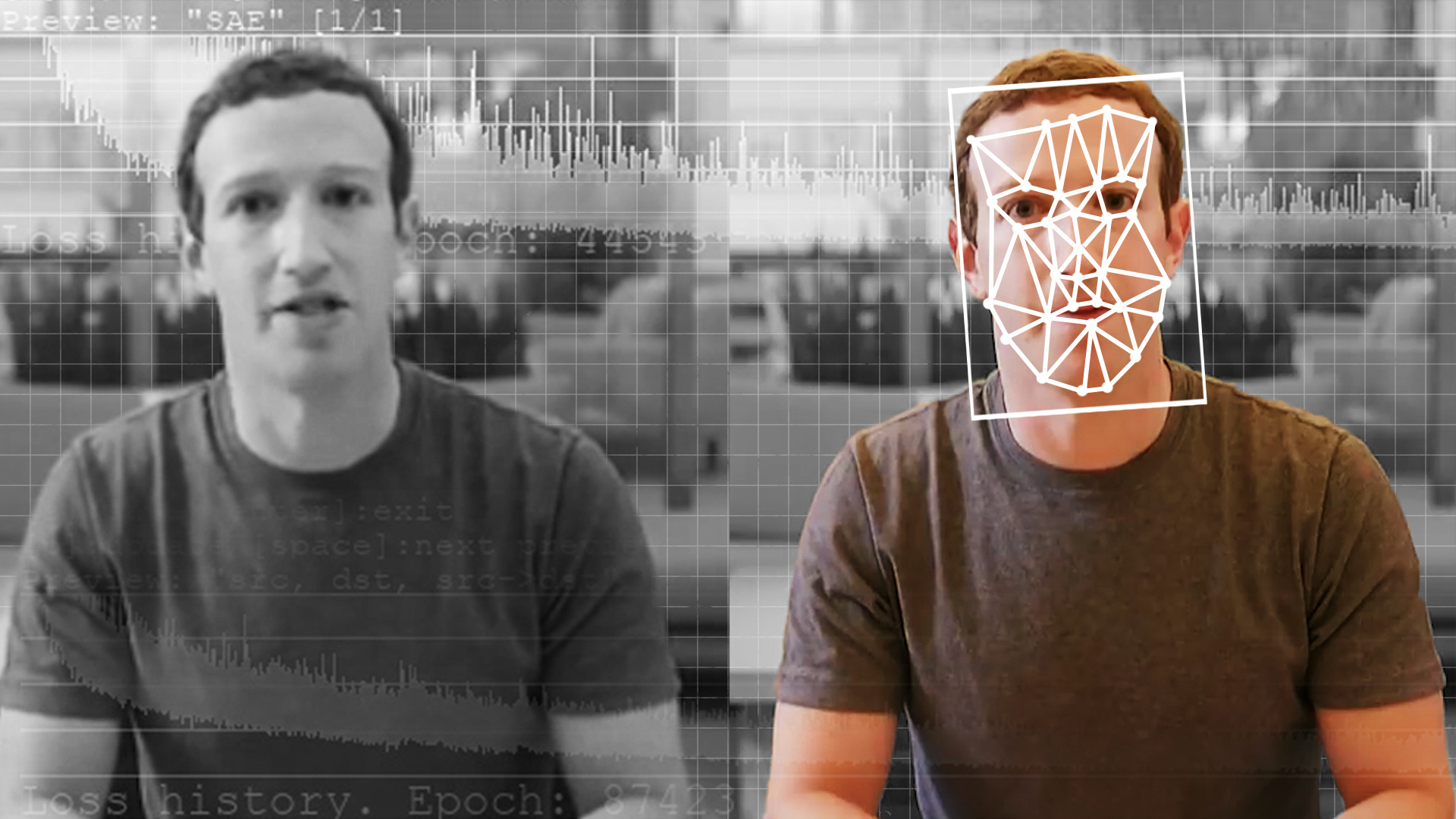

The AI-generated characters fall under a broad umbrella called synthetic media that relies on a technique called generative adversarial network (GAN), in which two networks compete to create photos that are cross-checked to determine whether they are realistic or not. Many websites and applications are now available to generate these photos without the need of any technical background, and they are incredibly convincing.

Synthetic media and disinformation

In regard to disinformation campaigns, AI-generated characters have been utilized in three main ways.

The first application is creating fabricated persons who claim to be experts or journalists in order to persuade the public about a particular communicated message. For example, in July 2020, an investigative report revealed a network of fictitious authors who published op-ed pieces in conservative media outlets, such as the Washington Examiner and American Thinker. The network also published in Middle Eastern outlets like Al Arabiya and Jerusalem Post, with topics ranging from praise for various Gulf countries to criticizing Iran.

In February 2022, Facebook removed a similar Russian network of fictitious personas who claimed to be news editors in Kyiv. The network published articles alleging that the West betrayed Ukraine and that Ukraine is a failed state.

A second application is the creation of fake accounts for more ordinary personas to spread fabricated content. In August 2021, a network of 350 fake social media accounts were discovered by a research center. The goal of this network, which also used AI-generated photos, was to delegitimize the West and endorse Chinese state propaganda. Another example was a Facebook page called “Zionist Spring,” which used fake accounts to spread disinformation in favor of then-Prime Minister Benjamin Netanyahu.

A third application is the targeting of specific individuals. For example, in July 2020, a news report detailed the story of a completely fabricated UK student who published an article accusing two activists of being “known terrorist sympathizers.”

A new front in the war on disinformation

Synthetic media technology has progressed very rapidly. A recent study found that AI-generated faces are indistinguishable from real ones and even are considered more trustworthy.

How do we fight back? There are several possibilities. Various laws have been proposed, but some scholars are skeptical, suggesting that curbing use of the technology is not only an unconstitutional violation of free speech but may create more problems than it solves.

Tech companies might offer a better solution. Facebook, for instance, has been researching how to detect AI-generated photos through reverse engineering. The company also launched a deepfake detection challenge. Additionally, there are now applications (such as a Chrome extension) to detect fake profile photos. But even here, there may be an unintended consequence. One study showed that raising public awareness of deepfake technology among voters caused them to distrust all political videos, even legitimate ones.

Synthetic media appears as if it will present a substantial threat to societal stability in the coming years and decades.