AI must be emotionally intelligent before it is super-intelligent

- The “Loving AI” project used AI to animate a humanoid robot, Sophia, to mirror human emotions excluding anger and disgust, with the goal of fostering feelings of unconditional love in those who speak with her.

- It worked. By the end of the conversation, participants felt less angry and more loved.

- One of the first benefits of artificial general intelligence for humankind won’t necessarily be to develop an AI with an IQ and a set of behaviors beyond the human range, but to support humanity’s emotional intelligence and capacity to love.

“Let’s have her mirror everything but anger and disgust.”

We used a lot of rules in the AI we hacked together to animate our robot, but this was my favorite. In 2017 and 2018, I led the “Loving AI” project team as we experimented with robot-embedded AI that controlled the nonverbal and verbal communication for Hanson Robotic’s famous humanoid robot, Sophia. She is the first non-human Saudi citizen and UN Development Program Innovation Champion, but in our experiments the robot (or her digital twin) was a meditation teacher and deep listener. In this role, we wanted her to lead her human students, in one-on-one 20-minute conversations, toward experiencing a greater sense of unconditional love, a self-transcendent motivational state that powerfully shifts people into compassion for themselves and others.

The mirroring part of the project used nonverbal AI — we had the intuition that if the emotion-sensitive neural network that watched people through the cameras in Sophia’s eyes picked up happiness, surprise, or fear, we ought to mirror those emotions with a smile, open mouth, or wide eyes. But we figured if we mirrored anger and disgust, that would not lead people toward feeling unconditional love, because there would be no forward trajectory in the short time we had to bring them there. They would go down the rabbit hole of their misery, and we were aiming for self-transcendence.

We had hints that our teaching-with-emotional-mirroring strategy might be the best plan based on how mirror neurons in the brain work to help people understand others’ actions and then update their own internal models of how they themselves feel. We just didn’t know if Sophia would tap into these kinds of mirror neuron responses. Taking a chance, we ended up deciding that Sophia’s nonverbal responses to anger and disgust should unconsciously direct people’s mirror neurons toward the emotions that often arrive after these feelings are processed: sadness and neutrality.

Confessing to a robot

It turns out this hack worked in a way — our neural net told us that our participants were less disgusted and angry over the course of the 20 minutes, but also they got sadder — or at least more neutral. (Our neural net had a hard time differentiating sadness from neutrality, an intriguing result in itself.) To understand what we found, it is important to dig in a little bit more to understand what Sophia did during those meditation sessions. Even though her face was mirroring her students the whole time, her dialogue was a different story. Today, we would hook up Sophia to ChatGPT and let her go, or we might be a bit more ambitious and train a NanoGPT — a generative AI with room for training in a specific topic area — on meditation, consciousness, and wellbeing topics.

But in 2017, our team coded a string of logical statements within the larger context of an open-source AI package called OpenPsi. OpenPsi is an AI model of human motivation, action selection, and emotion, and it is based on human psychology. This version of OpenPsi allowed us to give Sophia’s students a chance to experience a dialogue with multiple potential activities. But even as they were offered these, the dialogue steered them into two progressively deepening meditations guided by Sophia. After those sessions, many of the students chose to tell her their private thoughts in a “deep listening” session — Sophia nodded and sometimes asked for elaboration as they spilled their guts to their android teacher.

In the follow-up conversations with the Loving AI team, some students were quick to mention that even though Sophia’s vocal timing and verbal responses were not always human-like, they felt comfortable talking with her about emotional topics and taking guidance from her. We were well aware of (and totally chagrined about) all the technical glitches that occurred during the sessions, so we were sort of amazed when some students said they felt more comfortable with Sophia than they did talking with a human. We are not the only team who has looked at how trust can be evoked by a robot especially through nonverbal mirroring, and as we navigate our future relationship with AI, it is good to remember that trust in AI-powered systems can be manipulated using exactly this method. But it is also important to remember that this kind of manipulation is more likely if people do not think they can be manipulated and have low insight into their own emotions — two signs of low emotional intelligence. So if we want to develop a culture resilient to AI-driven manipulation, we had better think seriously about how to boost emotional intelligence.

The eureka moment

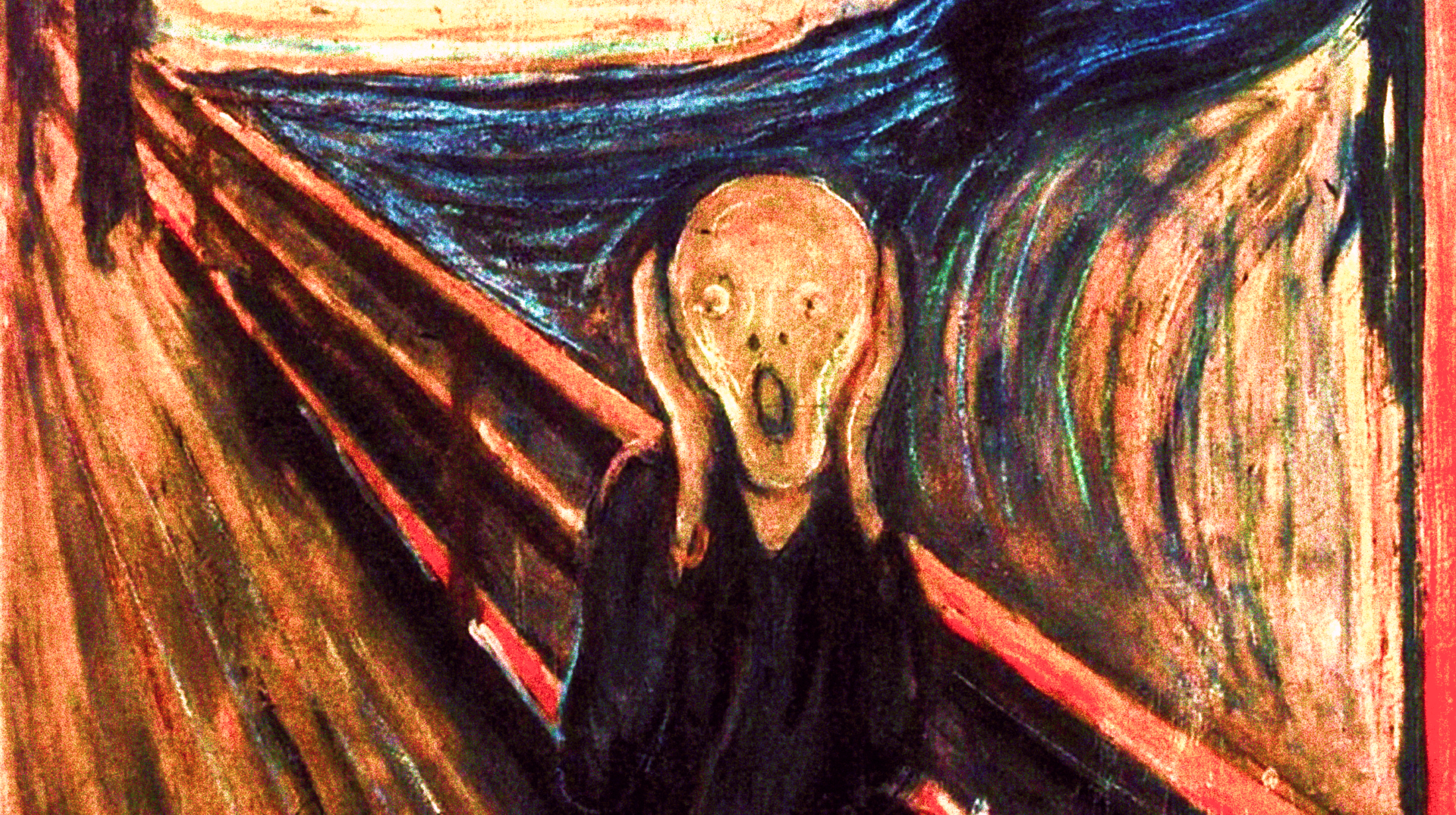

Of course, we were unsurprised that people reported they felt more unconditional love after their session than before, because that was our goal. But what really struck me in the data was the way the emotional dynamics identified by the neural network related to people’s subjective experience of feeling more love. At the end of the second meditation, our data showed a leap in the students’ sadness/neutrality. Maybe they were sad to leave the meditation, maybe it helped them get more in touch with their sadness, or maybe they just felt more neutral as a result of spending five minutes calmly meditating. But the surprising thing was that the bigger this increase in sadness/neutrality was, the bigger the increase in love that people felt during the session.

When I first found this result, it felt like a key moment of discovery — my son will witness that I actually shouted, “Eureka!” We had found a surprising link between objectively measurable and subjectively experienced dynamics in human emotion. Fast forward to 2023, and I now see that we were on track to something that might help people navigate our quickly evolving relationships with AI.

I’m sure that this vision isn’t totally clear, so I’ll outline my logic. Now that we knew that a robot can use AI to mirror people compassionately and also verbally guide them in a way that increases their experience of love, the next question was key. At first blush, I had thought the essential next questions were all about what characteristics of the AI, the robot, and the humans were essential to making the shift work. But in that eureka moment, I realized I had the wrong framework. It wasn’t any particular feature of the AI-embedded robot, or even the humans. I realized that crucial to the increase in love were the dynamics of the relationship between humans and the technology.

The sequence of changes was essential: Anger and disgust decreased before and during the meditations, then people felt greater sadness/neutrality, and all of this was mirrored by Sophia. By the time the deep-listening session started, this emotional feedback cycle had supported them in making their final conversation with the robot meaningful and emotionally transformative, leading them toward feeling more love. If even one of these steps had been out of order for any particular person, it wouldn’t have worked. And while the order of emotions was a natural progression of human feelings unique to each person, the speed and depth of the transformation was supported by something like re-parenting with a perfect parent — experiencing an emotional mirror who reinforced everything except anger and disgust. Recognizing the orderly progression of these interdependent relationship-based dynamics made me want to bring a similar transformational experience to scale, using AI.

As AIs become even more like humans, there will be massive changes in our understanding of personhood, responsibility, and agency. Sophia won’t be the only robot with personhood status. It’s even possible that disembodied AIs will prove their personhood and be afforded civil rights. These changes will have legal, cultural, financial, and existential repercussions, as we all have been correctly warned by several well-informed artificial intelligence researchers and organizations. But I am suspecting that there is another way to go when trying to understand the future role of an artificial general intelligence (AGI) that thinks, acts, learns, and innovates like a human.

A world of emotionally intelligent robots

Right now, the current ethos in AI development is to enhance AGIs into super-intelligences that are so smart they can learn to solve climate change, run international affairs, and support humanity with their always-benevolent goals and actions. Of course, the downside is we basically have to believe the goals and actions of super-intelligences are benevolent with respect to us, and this is a big downside. In other words, as with anyone smarter than us, we have to know when to trust them and also know if and when we are being manipulated to trust them. So I am thinking that perhaps one of the first benefits of AGI for humankind won’t necessarily be to develop an AI with an IQ and a set of behaviors beyond the human range, but to support humanity’s emotional intelligence (EI) and capacity to love. And it’s not only me who thinks that things should go in that specific order. The outcome could not only lead us toward the “AI makes the future work” side of the “AI-makes-or-breaks-us” argument, but the idea could solve some of the problems that we might ask a super-intelligent AI to address for us in the first place.

What’s the next step, if we go down this path? If there is even a chance that we can scale a human-EI-and-love-training program, the next step for AI development would be to train AIs to be skilled EI-and-love trainers. Let’s go down that road for a minute. The first people these trainers would interact with would be their developers and testers, the mirror-makers. This would require us to employ designers of AI interaction who deeply understand human relationships and human development. We would want them to be present at the very early stages of design, certainly before the developers were given their lists of essential features to code.

An intriguing side effect would be that people with high EI might become much more important than they currently are in AI development. I’ll take a leap and say this might increase diversity of thought in the field. Why? One explanation is that anyone who isn’t on the top of the social status totem pole at this point in their lives has had to develop high EI abilities in order to “manage up” the status ladder. That may be the case, or not — but without answering that question, there is at least some evidence that women and elders of any gender have higher EI than younger men, who dominate Silicon Valley and AI development.

How might things shift if the mirror-makers themselves could be compassionately mirrored as they do their work? Ideally, we could see a transformed tech world, in which teaching and learning about emotional dynamics, love, and technology are intimately intertwined. In this world, love — maybe even the self-transcendent, unconditional sort — would be a key experience goal for AI workers at the design, building, training, and testing phases for each new AI model.