We’ve all tried to win an argument by laying down some strong statistics to prove that we’re right. But cognitive neuroscientist Tali Sharot discusses the limitations of information in changing people’s beliefs. In fact, intelligent people are likely to manipulate data to align with their pre-existing beliefs. That’s when your super smart statistics start to backfire.

In one experiment, providing more extreme data to both believers and skeptics resulted in increased polarization rather than consensus. Brain scans reveal that when two people disagree, the brain seems to “switch off,” not encoding the opposing views.

In a study at UCLA aiming to convince parents to vaccinate, directly refuting the autism link wasn’t effective. Instead, shifting the focus to the purpose of vaccines – protecting against deadly diseases like measles – was more persuasive. The key is identifying a shared objective or common motive, as seen with the mutual concern for children’s health, rather than emphasizing divisive points.

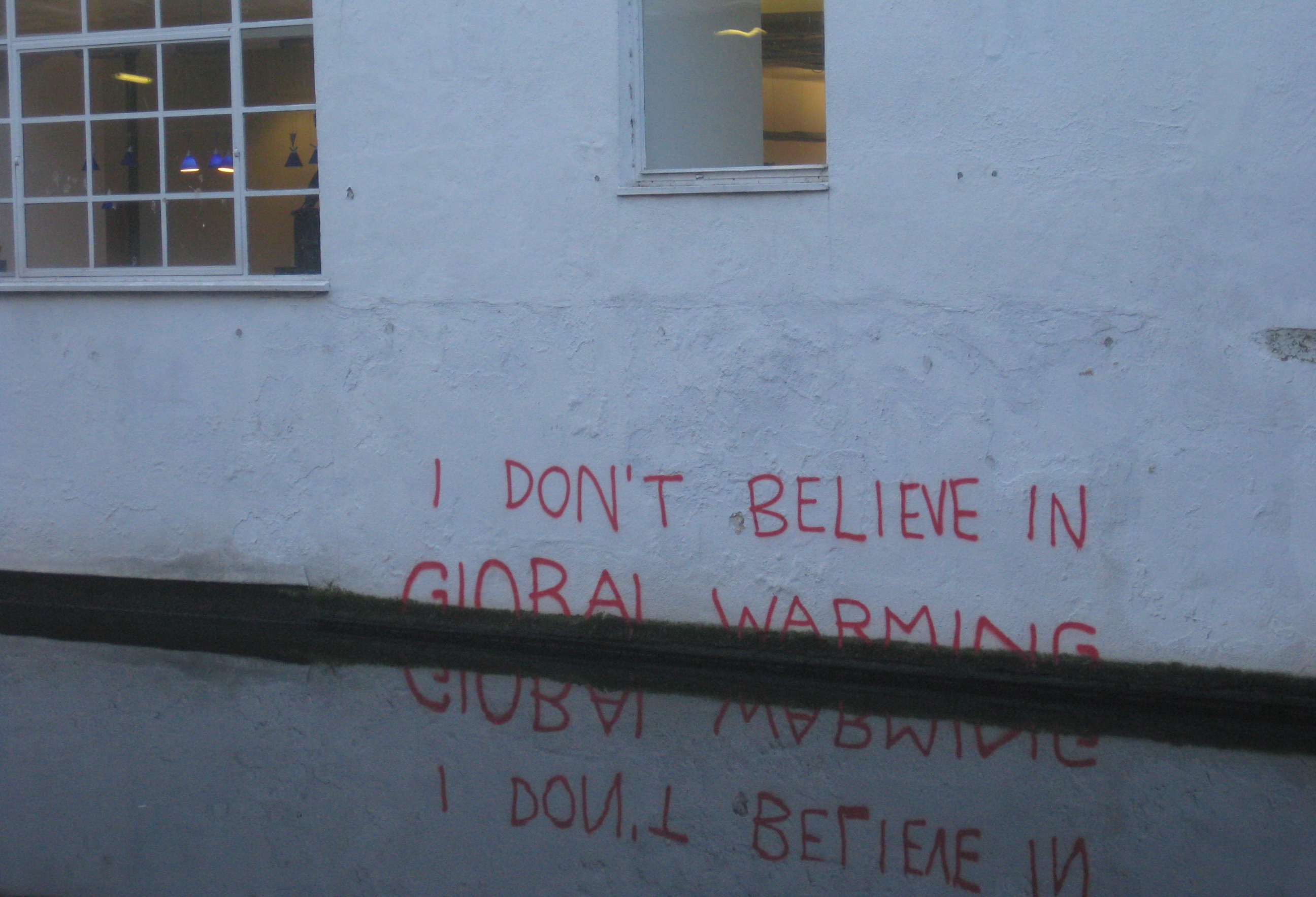

Tali Sharot: Most of us think that information is the best way to convince people of our truth, and, in fact, it doesn't work that well. We see that all the time, right? We see it, with climate change, where there's tons of data suggesting that climate change is man-made, but about 50% of the population doesn't believe it, or with people arguing about things like how many people were in the presidential inauguration.

So, we have facts, but people decide which facts they wanna listen to, which facts they wanna take and change their opinions, and which they want to disregard. And one of the reasons for this is, when something doesn't conform to what I already believe, what people tend to do is either disregard it or rationalize it away, because information doesn't take into account what makes us human, which is our emotions, our desires, our motives, and our prior beliefs.

So, for example, in one study, my colleagues and I tried to see whether we could use science to change people's opinions about climate change. The first thing we did was ask people, "Do you believe in man-made climate change? Do you support the Paris Agreement?" And based on their answers, we divided them into the strong believers and the weak believers- and then we gave them information. For some people, we said that, "Scientists have reevaluated the data and now conclude that things are actually much worse than they thought before, that the temperature would rise by about 7 degrees to 10 degrees." For some people, we said, "The scientists have reevaluated the data and they now believe that, actually, the situation is not as bad as they thought. It's much better, and the rise in temperature would be quite small."

And what we found is that people who did not believe in climate change, when they heard that the scientists are saying, "Actually, it's not that bad," they changed their beliefs even more in that direction, so they became more extremist in that direction, but when they heard that the scientists think it's much worse, they didn't nudge, and the people who already believe that climate change is man-made, when they heard that things, scientists are saying, are much worse than they did before, they moved more in that direction. So they became more polarized, but when they heard that the scientists are saying, "It's not that bad," they didn't nudge much. So, we gave people information, and, as a result, it caused polarization. It didn't cause people to come together.

So, the question is: What's happening inside our brain that causes this? And, in one study, my colleagues and I scanned brain activity of two people who were interacting, and what we found was, when those two people agreed on a question that we gave them, the brain was really encoding what the other person was saying, the details that they gave, but when the two people disagreed, it looked metaphorically as if the brain was switching off and not encoding what the other person was saying. And, as a result, when the two agreed, they became even more confident, but, when they disagreed, there wasn't as much of a change in their confidence in their own view.

What has been shown by Kahan and colleagues from Yale University is that, the more intelligent you are, the more likely you are, in fact, to change data at will. So, what they did is they first gave participants in their experiment analytical and math questions to solve, and then they gave them data about gun control: 'Is gun control actually reducing violence?' And they found that more intelligent people actually were more likely to twist data at will to make it conform to what they already believed.

So, it seems that people are using their intelligence not necessarily to find the truth, but to take in the information and change it to conform to what they already believe. So, that suggests that just giving people information without considering first where they're coming from, may backfire at us- but we don't always need to go against someone's conviction in order to change their behavior, and let me give you an example. So, this is a study that was conducted at UCLA where what they wanted to do is convince parents to vaccinate their kids, and some of the parents didn't want to vaccinate their kids because they were afraid of the link with autism.

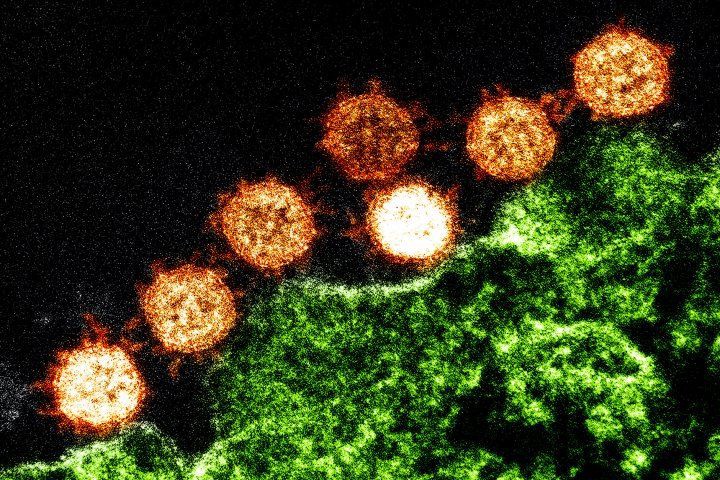

So they had two approaches. First, they said, "Well, the link with autism is actually not real. Here's all the data suggesting there isn't a link between vaccines and autism." And it didn't really work that well, but, instead, they used another approach. So, instead of going that way, they used another approach, which is, "Let's not talk about autism. We don't necessarily need to talk about autism to convince you to vaccinate your kid." Instead, they said, "Well, look, these vaccines protect kids from deadly diseases, right? From the measles." And they showed them pictures of what the measles are, because, in this argument about vaccines, people actually forgot what the vaccines are for, what are they protecting us from, and they highlighted that, and didn't necessarily go on to discuss autism, and that had a much better outcome. The parents were much more likely to say, "Yes, we are gonna vaccinate our kids." So, the lesson here is that we need to find the common motives. The common motive in this case was the health of the children, not necessarily going back to the thing that they were arguing about, that they disagreed about.