Ask Ethan: Could Measurement Inaccuracies Explain Our Cosmic Controversies?

If we want our conclusions to be meaningful, our data had better be robust.

When it comes to the Universe, there’s a whole lot that doesn’t add up. All the matter we observe and infer — from planets, stars, dust, gas, plasma, and exotic states and objects — can’t account for the gravitational effects we see. When we observe galaxies and measure both their distances and redshifts, it reveals the expanding Universe, and yet there are two recent surprises: observations that indicate the expansion is accelerating (attributed to dark energy), and the fact that different measurement methods lead to two different sets of expansion rates.

Are these problems actually real puzzles to be reckoned with, or could they be due to problems with the measurements themselves? That’s what Martin Step wants to know, as he writes in to ask:

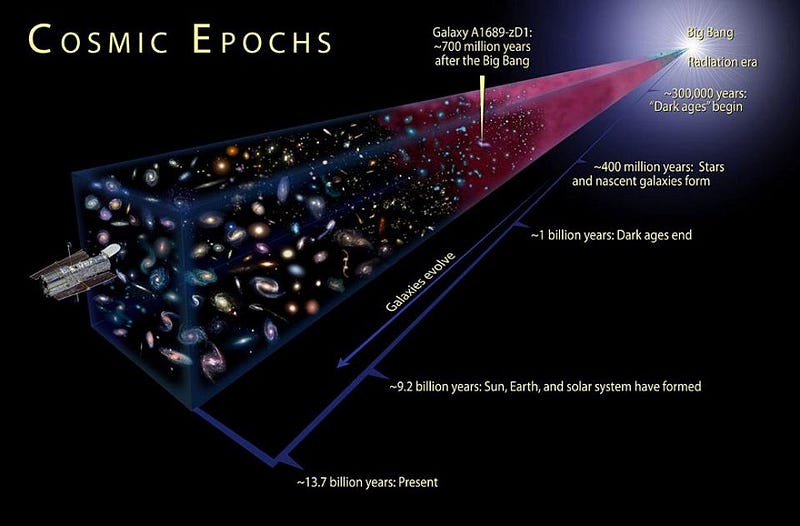

“I’ve read many times about astronomers looking at objects that are 13.7 or so billion light years away, so far away in space and time that these must have been objects that formed soon after the Big Bang… So, if we are just seeing the light from these objects now, and they are massively red-shifted, that implies that at the moment those photons were emitted these celestial objects were already a long distance away… it seems like at least some of the assumptions being made about these objects are wrong. Either they are not as far away in space or time as the red shift indicates, or the red shift theory is less and less accurate the farther away the object is, or something else.”

It’s so important to make sure we’re not fooling ourselves. Here’s why we think these problems are real.

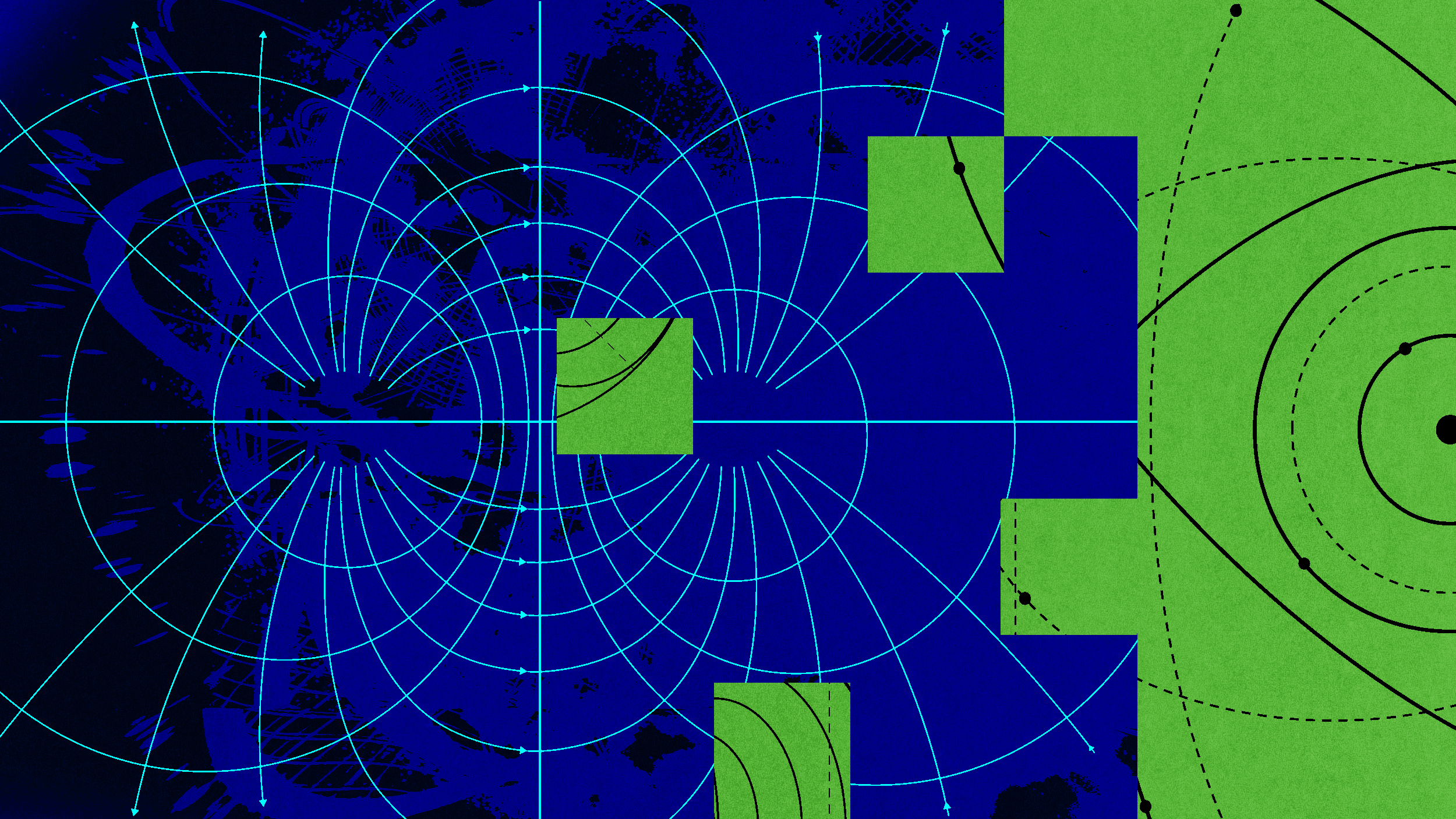

In general, whenever you do any sort of work, you want some independent way to check yourself. Some things won’t be checked, of course, as you have to have some set of starting points that everyone can agree on, so it’s important to realize the assumptions we’re making. (Even if they themselves are being or have been checked in other ways in the past.) For the expanding Universe, we typically assume the following:

- the laws of physics are the same, everywhere, for all observers at all times,

- that General Relativity, as put forth by Einstein, is our theory of gravitation,

- that the Universe is isotropic, homogeneous, and expanding,

- and that light obeys Maxwell’s laws of electromagnetism when it behave classically, and the quantum rules that govern it (quantum electrodynamics) apply when it exhibits quantum behavior.

Those assumptions have been tested in a number of ways, but that’s what we consider the modern starting point for attempts to measure the Universe. After all, we need a framework to work in, and this one is not only powerful and useful, but it’s survived many cross-checks as well.

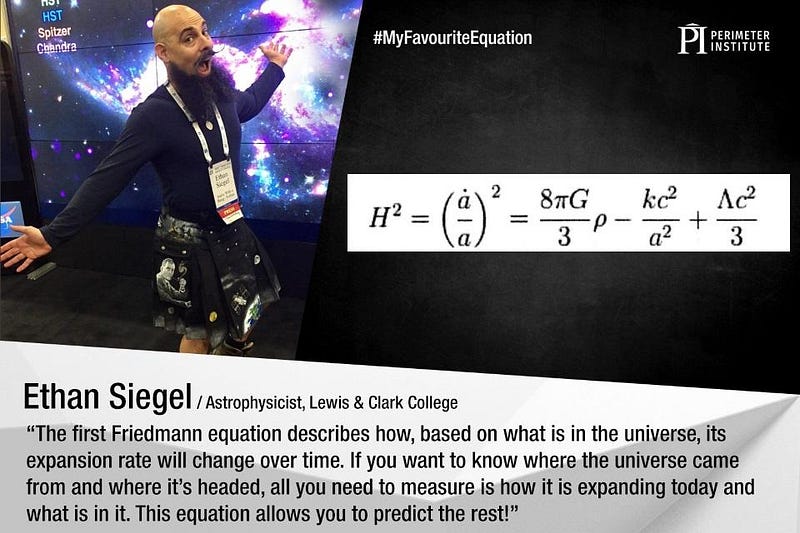

This is an incredibly powerful starting point, because it allows us to link up a number of properties of the Universe with the observables we can actually measure. The above equation — known as the first Friedmann equation — can be derived directly from General Relativity under the above assumptions. It tells you that if you can measure the expansion rate of the Universe today and at earlier times, you can determine exactly what’s in the Universe in terms of matter and energy. (Conversely, if you can instead measure the expansion rate today and the contents of the Universe, you can determine the expansion rate at all times in the past and future.)

There are a number of ways to do this, but the oldest, most traditional method is as simple as it gets:

- you measure some quantity that’s related to either the observed size or the observed brightness of an object (like a star or galaxy),

- you infer — from some other measured quantity or from some known property of the object — how intrinsically large or bright the object actually is,

- and you also measure the redshift of the object, or how much the light has been shifted from its rest-frame wavelength.

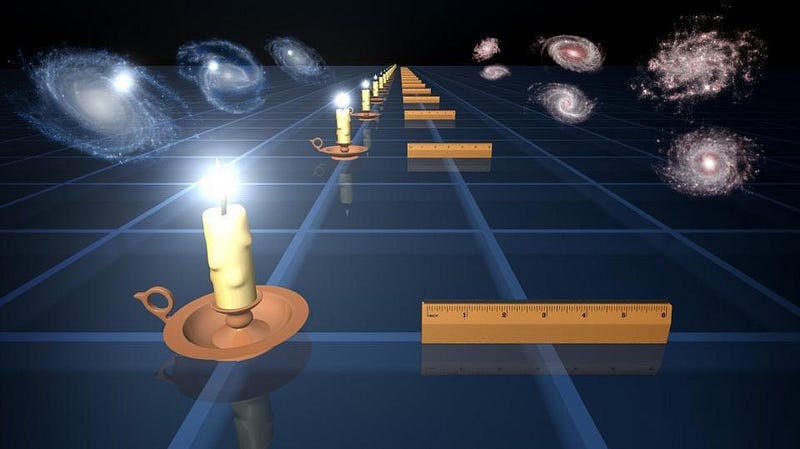

In astrophysics, these two general methods are known as standard candles (if they’re based on brightness) and standard rulers (if based on size), as they’re based on simple concepts.

If I take an object like a candle or a light bulb, and I place it a certain distance away, I’ll be able to see it with a particular brightness. In fact, for every candle or light bulb in the Universe, if we put it at that same distance, it would have a specific brightness that you’d see associated with it. That’s because, intrinsically, is has a property inherent to it that causes it to be luminous: an intrinsic brightness.

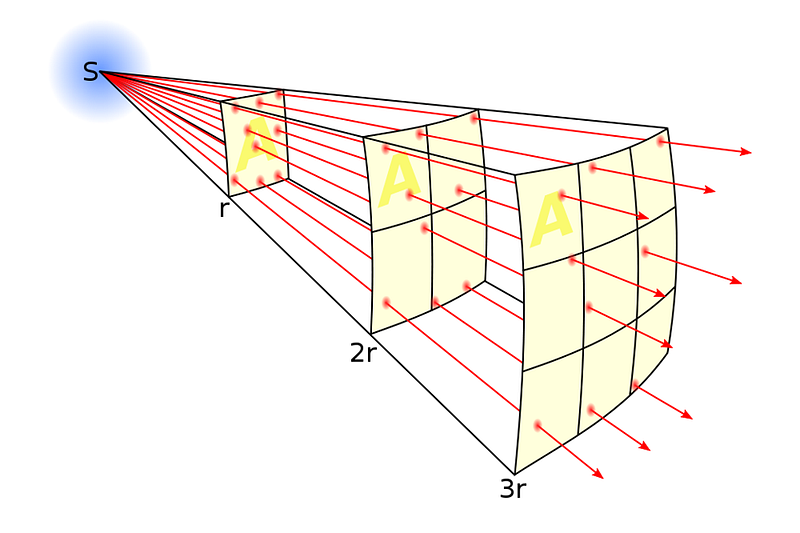

If I move it farther away, it will appear fainter: twice as far away means one-quarter the brightness; three times as far away means one-ninth the brightness; four times as far away means one-sixteenth the brightness, etc. Light emitted from a source spreads out in a spherical shape, and so the farther away you go, the less light you can see with the same amount of collecting area.

A similar story happens for the sizes of objects: the farther away they are, the more their apparent size changes. The details of the story are slightly more complicated in the expanding Universe because the geometric properties of space change as time unfolds, but the same principle applies. If you can make a measurement that reveals the intrinsic brightness or size of an object, and you can measure the apparent brightness or size of an object, you can infer its distance from you.

These cosmic distances are important because knowing how far away the objects you’re viewing allows you to determine how much the Universe has expanded over the time that the light was emitted from when it arrives at our eyes. If the laws of physics are the same everywhere, then the quantum transitions between atoms and molecules will be the same for all atoms and molecules everywhere in the Universe. If we can identify patterns of absorption and emission lines and match them up to atomic transitions, then we can measure how much that light has been redshifted.

A small part of that redshift (or blueshift, if the object is moving towards us) will be due to the gravitational influence of all the other objects around it: what astronomers call “peculiar velocity.” The Universe is only isotropic (the same in all directions) and homogeneous (the same in all locations) on average: if you were to smooth it out by averaging over a rather large volume.

In reality, our Universe is clumped and clustered together, and the gravitational overdensities — like stars, galaxies, and clusters of galaxies — as well as the underdense regions, exert pushes and pulls on the objects within it, causing them to move around in a variety of directions. Typically, objects within a galaxy move around at tens-to-hundreds of km/s relative to one another because of these effects, while galaxies can move at hundreds or even thousands of km/s because of peculiar velocities.

But that effect is always superimposed over the expansion of the Universe, which is primarily responsible — especially at large distances — the redshifts we observe.

This is why, if we want to be sure we aren’t fooling ourselves about the conclusions we’re drawing, it’s so important to ensure that our distance measurements are reliable. If they’re biased or systematically offset in any way, that could throw all of the conclusions we build upon these methods into doubt. In particular, there are three things we should worry about.

- If our distance estimates to any of these astronomical objects are biased nearby, we could be mis-calibrating the expansion rate today: the Hubble parameter (sometimes called the Hubble constant).

- If our distance estimates are biased at large distances, we could be fooling ourselves into thinking that dark energy is real, where it might be an artifact of our incorrect distance estimates.

- Or, if our distance estimates are incorrect in a way that translates equally (or proportionally) to all galaxies, we could get a different value for the Universe’s expansion by measuring individual objects as compared to measuring, say, the properties of the leftover glow from the Big Bang: the cosmic microwave background.

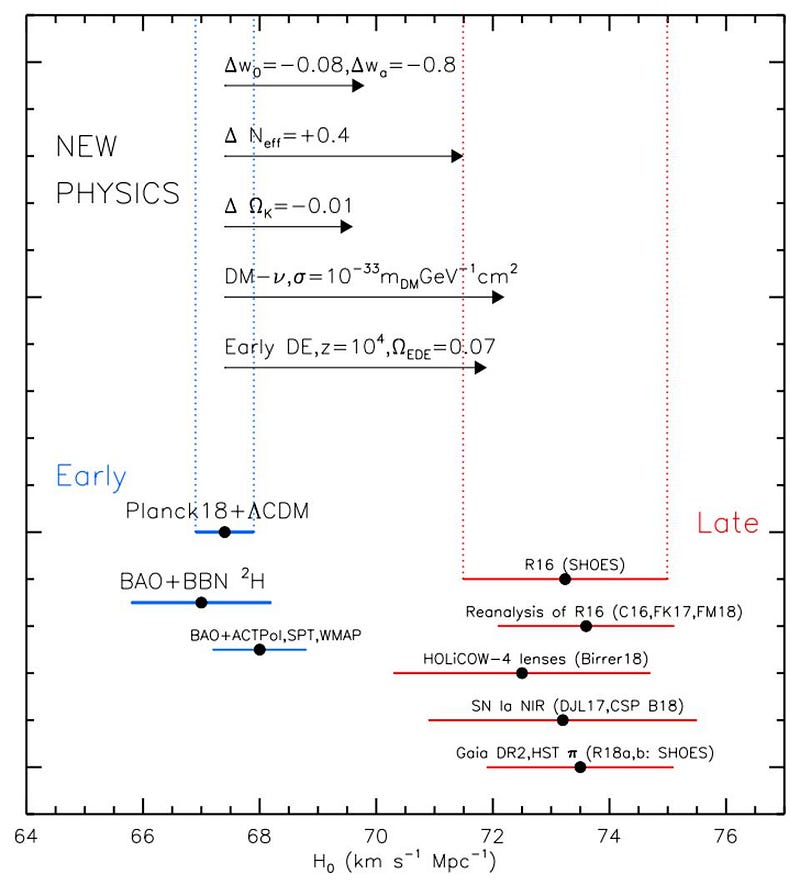

Because we see that different methods of measuring the Universe’s expansion rate actually do yield different values — with the cosmic microwave background and a few other “early relic” methods yielding a ~9% smaller value than all the other measurements — this is a legitimate concern. Perhaps it’s reasonable to worry that our distance measurements might be incorrect, and that’s an error that’s driving us to draw incorrect conclusions about the Universe, creating conundrums whose roots are our own mistakes.

Fortunately, this is something we can check. In general, there are a myriad of independent ways to measure distances to galaxies, as there are a total of a whopping 77 different “distance indicators” we can use. By measuring a specific property and applying a variety of techniques, we can infer something meaningful about the intrinsic properties of what we’re looking at. By comparing something intrinsic to something observed, we can immediately know, assuming we’ve got the rules of cosmology and astrophysics correct, how far away an object is.

The “check” we should be performing, then, is to look at numerous different, independent methods for measuring the distances to the same sets of objects, and to see whether these distances are consistent with one another. Only if the different methods all yield similar results for the same objects should we consider them trustworthy.

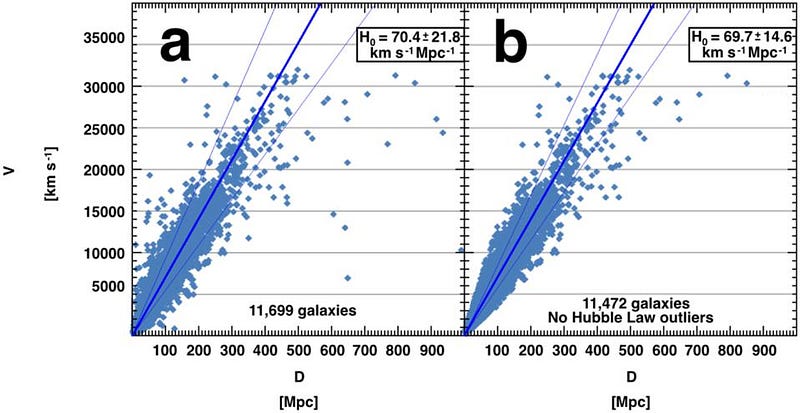

Earlier this month, exactly that test was performed, as astronomer Ian Steer leveraged the NASA/IPAC Extragalactic Database of Distances (NED-D) to tabulate multiple distances for 12,000 separate galaxies, using a total of six different methods. In particular, a couple of key galaxies frequently used as “anchor points” in constructing the cosmic distance ladder, like the Large Magellanic Cloud and Messier 106, were included. The results were spectacular: all six methods (spanning 77 various indicators) yielded consistent distances for each of the examined cases. It’s the largest independent test like this we’ve ever performed, and it shows that — to the limits of what we can tell — we don’t appear to be fooling ourselves about cosmic distances, after all.

As a result, we can confidently state that our understanding of the expanding Universe, our methods for measuring cosmic distances, the existence of dark energy, and the discrepancy between measurements of the Hubble constant using different methods are all robust results. Astronomy, like many scientific fields, often has arguments over which method is best or most reliable, and that’s why it’s so important to examine the full suite of available data. If all the methods we have yield identical results with only negligible differences between them, our conclusions become a lot harder to ignore.

Individually, any one measurement will have large uncertainties associated with it, but a large and comprehensive data set should enable us to render those uncertainties irrelevant by providing sufficient statistics, so long as they’re unbiased. Remarkably, this study shows exactly that, enabling us to use these distance estimates for all sorts of scientific purposes — from extragalactic astronomy to cosmology to gravitational waves — with supreme confidence. As study author Ian Steer himself wrote in a heartwarming and affirmative message,

“The finding supports the idea that inclusiveness and respect for diverse data and methods results in better, more viable and more valid data than the normal approach that excludes most data, and takes only the most pristine, cherry picked data. Extragalactic distances data, like the life forms gathering it, are stronger together than expected and work better together than apart.”

Send in your Ask Ethan questions to startswithabang at gmail dot com!

Starts With A Bang is written by Ethan Siegel, Ph.D., author of Beyond The Galaxy, and Treknology: The Science of Star Trek from Tricorders to Warp Drive.