Big think: Will AI ever achieve true understanding?

Credit: GABRIEL BOUYS via Getty Images

- The Chinese Room thought experiment is designed to show how understanding something cannot be reduced to an “input-process-output” model.

- Artificial intelligence today is becoming increasingly sophisticated thanks to learning algorithms but still fails to demonstrate true understanding.

- All humans demonstrate computational habits when we first learn a new skill, until this somehow becomes understanding.

It’s your first day at work, and a new colleague, Kendall, catches you over coffee.

“You watch the game last night?” she says. You’re desperate to make friends, but you hate football.

“Sure, I can’t believe that result,” you say, vaguely, and it works. She nods happily and talks at you for a while. Every day after that, you live a lie. You listen to a football podcast on the weekend and then regurgitate whatever it is you hear. You have no idea what you’re saying, but it seems to impress Kendall. You somehow manage to come across as an expert, and soon she won’t stop talking football with you.

The question is: do you actually know about football, or are you imitating knowledge? And what’s the difference? Welcome to philosopher John Searle’s “Chinese Room.”

The Chinese Room

Searle’s argument was designed as a critique of what’s called a “functionalist” view of mind. This is the philosophy that argues that our mind can be explained fully by what role it plays, or in other words, what it does or what “function” it has.

One form of functionalism sees the human mind as following an “input-process-output” model. We have the input of our senses, the process of our brains, and a behavioral output. Searle thought this was at best an oversimplification, and his Chinese Room thought experiment goes to show how human minds are not simply biological computers. It goes like this:

Imagine a room, and inside is John, who can’t speak a word of Chinese. Outside the room, a Chinese person sends a message into the room in Chinese. Luckily, John has an “if-then” book for Chinese characters. For instance, if he gets , the proper reply is . All John has to do is follow his instruction book.

The Chinese speaker outside of the room thinks they’re talking to someone inside who knows Chinese. But in reality, it’s just John with his fancy book.

What is understanding?

Does John understand Chinese? The Chinese Room is, by all accounts, a computational view of the mind, yet it seems that something is missing. Truly understanding something is not an “if-then” automated response. John is missing that sinking in feeling, the absorption, the bit of understanding that’s so hard to express. Understanding a language doesn’t work like this. Humans are not Google Translate.

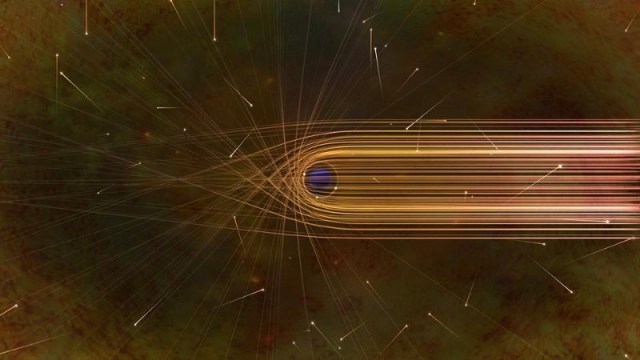

And yet, this is how AIs are programmed. A computer system is programmed to provide a certain output based on a finite list of certain inputs. If I double click the mouse, I open a file. If you type a letter, your monitor displays tiny black squiggles. If we press the right buttons in order, we win at Mario Kart. Input — Process — Output.

Can imitation become so fluid or competent that it is understanding.

But AIs don’t know what they’re doing, and Google Translate doesn’t really understand what it’s saying, does it? They’re just following a programmer’s orders. If I say, “Will it rain tomorrow?” Siri can look up the weather. But if I ask, “Will water fall from the clouds tomorrow?” it’ll be stumped. A human would not (although they might look at you oddly).

A fun way to test just how little an AI understands us is to ask your maps app to find “restaurants that aren’t McDonald’s.” Unsurprisingly, you won’t get what you want.

The Future of AI

To be fair, the field of artificial intelligence is just getting started. Yes, it’s easy right now to trick our voice assistant apps, and search engines can be frustratingly unhelpful at times. But that doesn’t mean AI will always be like that. It might be that the problem is only one of complexity and sophistication, rather than anything else. It might be that the “if-then” rule book just needs work. Things like “the McDonald’s test” or AI’s inability to respond to original questions reveal only a limitation in programming. Given that language and the list of possible questions is finite, it’s quite possible that AI will be able to (at the very least) perfectly mimic a human response in the not too distant future.

What’s more, AIs today have increasingly advanced learning capabilities. Algorithms are no longer simply input-process-output but rather allow systems to search for information and adapt anew to what they receive.

A notorious example of this occurred when a Microsoft chat bot started spouting bigotry and racism after “learning” from what it read on Twitter. (Although, this might just say more about Twitter than AI.) Or, more sinister perhaps, two Facebook chat bots were shut down after it was discovered that they were not only talking to each other but were doing so in an invented language. Did they understand what they were doing? Who’s to say that, with enough learning and enough practice, an AI “Chinese Room” might not reach understanding?

Can imitation become understanding?

We’ve all been a “Chinese Room” at times — be it talking about sports at work, cramming for an exam, using a word we didn’t entirely know the meaning of, or calculating math problems. We can all mimic understanding, but it also begs the question: can imitation become so fluid or competent that it is understanding.

The old adage “fake it, ’till you make it” has been proven true over and over. If you repeat an action enough times, it becomes easy and habitual. For instance, when you practice a language, musical instrument, or a math calculation, then after a while, it becomes second nature. Our brain changes with repetition.

So, it might just be that we all start off as Chinese Rooms when we learn something new, but this still leaves us with a pertinent question: when, how, and at what point does John actually understand Chinese? More importantly, will Siri or Alexa ever understand you?

Jonny Thomson teaches philosophy in Oxford. He runs a popular Instagram account called Mini Philosophy (@philosophyminis). His first book is Mini Philosophy: A Small Book of Big Ideas.