We need a new social contract for the coming golden age of robotics

- Artificial general intelligence (AGI) is the ability of machines to match or surpass human intelligence.

- Humanity needs a new social contract that governs the relationship between people and the emerging generation of AGI machines.

- This social contract would consist of a set of rules defining what intelligent machines can and cannot do — and how people should use them.

When artificial general intelligence (AGI), the ability of machines to match or even surpass human intelligence, seems to be within our grasp, we must encode ethics models within our future intelligent machines to ensure they contribute to the betterment of humanity and fulfill the new social contract we need.

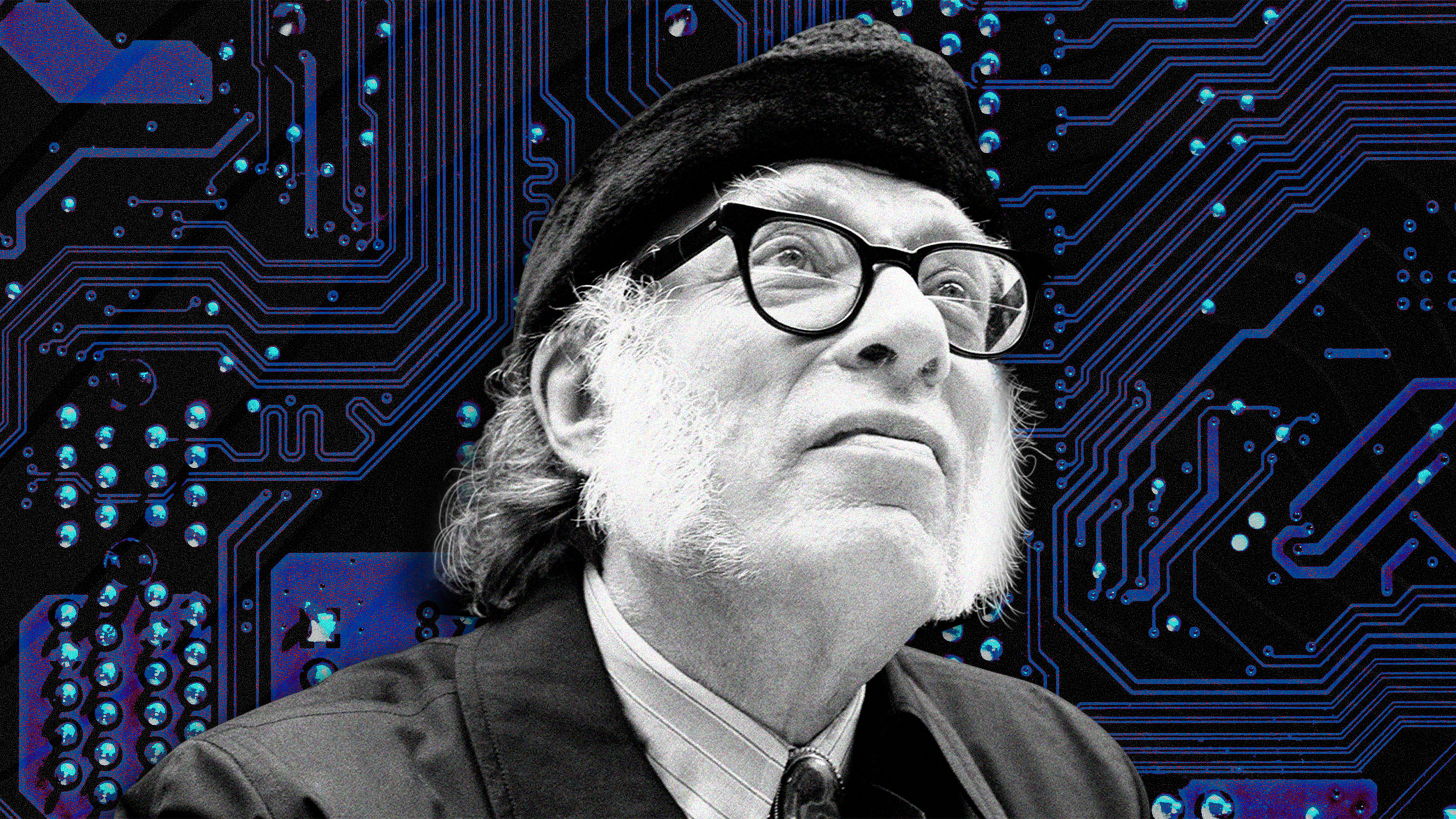

While growing up in suburban Michigan, I was the nerdy sort of kid who loved math and science — and, not surprisingly, became the AV guy who ran the projector at school. I loved tinkering with electronic gadgets. My other great love as a teenager was science fiction, particularly the work of Isaac Asimov. I read hundreds of his novels and short stories during my teens and twenties. It was not until recently that I began thinking more deeply about intelligent machines’ ethical and moral implications. I looked back at what Asimov had written about the relationship between people and robots. In addition to featuring robots prominently in his novels and stories, he wrote dozens of essays about them. Asimov concluded they should be respected, not feared or controlled. He developed rules for how they should interact with humanity. Asimov was an optimist and a realist.

I am an optimist and a realist, too. To achieve a future where artificial intelligence benefits humanity, we need a new social contract that governs the relationship between people and the emerging generation of AGI machines.

The concept of a social contract emerged in the Age of Enlightenment, the period of rigorous scientific, political, and philosophical questing that spanned the 18th century in Europe. Traditionally, a social contract is an implicit or explicit deal between the government and the people in a country where individuals surrender some of their freedoms and follow the rules laid out by governments in exchange for other benefits and maintenance of the social order. Because social orders are under constant stress, the values, laws, and regulations that embody social contracts require reexamination and modification when new factors come into play.

The new social contract I have in mind would govern the relationship between people and smart machines — assuring that people are safe and the newly emerging AGI entities align with our values and interests. It would consist of a set of rules agreed to by the world’s governments, businesses, and other institutions defining what intelligent machines can and cannot do and how people can and cannot use them.

When I look ahead, I see amazing things coming. We are at the beginning of an intelligence revolution — similar to our world in the 1850s during the Industrial Revolution.

This computer science journey began in earnest in the 1940s and 1950s. It was not until the 1960s that universities began offering computer science degrees. Then, the PC, the web, the smartphone, big data, the cloud, foundation models, and tremendous advances in artificial intelligence came in rapid succession. The science and industry that emerged from the IT revolution are still changing rapidly but are also maturing. Technology transforms our world, businesses, and our personal lives.

Now we are on the verge of another great lift. By harnessing AI and other techniques to master the explosion of data we see today, we can understand how the world works much more accurately and comprehensively. We can make better decisions and use Earth’s resources more responsibly. Throughout my career, I have focused on bringing technology to bear to help businesses succeed. As computer technology developed, its potential impact for good or potential harm increased. I am hopeful these coming technological advances will improve the well-being of our species and the sustainability of life on this planet.

Over the past 80 years of the computing revolution, intelligent machines matched or bested one human capability after another. First, we created machines with expertise in a single domain. With the arrival of foundation models, the depth of AI systems’ knowledge, the speed with which they react or predict, and the accuracy of their predictions are pretty darned impressive. They already demonstrate a recall of knowledge far beyond human capabilities. Now, we create machines with expertise in multiple domains. These large-scale machine learning models will dramatically lower the cost of intelligence, enabling new smarts and capabilities in applications and services of all types.

I believe machines will possess artificial general intelligence within the next decade.

I see the 2030s and beyond as the golden era of robotics. Today’s robots that make cars on assembly lines and clean up nuclear power plants after meltdowns are impressive. But the robots of the future will impress on another order of magnitude. They will be largely autonomous — because they cannot always take orders from computing systems located in the cloud. To make machines capable of autonomy, we will have to provide them with AI capabilities that are miniaturized and localized. Initially, these machines will serve single purposes like cleaning our floors, delivering packages, driving vehicles, and flying us around. Over time, more general purpose robots that take on human characteristics and form will emerge.

I believe machines will possess artificial general intelligence within the next decade. It is only a matter of when. These prospects do not frighten me, but they do concern me. What is the societal impact in a world where smart machines are general purpose, matching the capabilities of people and exceeding them in many ways? What ethics and rules will control these machines? In the future, it seems likely that robots will be capable of performing most physical tasks, and intelligent models within them will be capable of performing most intellectual tasks. What will people do if machines and AI systems do all that work?

I am not an expert in AI, and I am not an ethicist. I am an engineer and a businessman. I do not have a clear answer to these issues. These questions will likely be among society’s most critical policy issues in the decades ahead. Computer scientists, business leaders, government officials, academics, ethicists, and theologians must work together.

I believe people will develop solutions to the profound ethical issues raised by tomorrow’s robots and intelligent machines, but I think the process will be messy. In history, every major technological advance has been used, for good and bad. Ultimately, though, common sense prevails, and society establishes laws and regulations that oversee the use of technology. This governance applies to everything from electricity to nuclear technology, and I believe the same will happen with intelligent machines.

We can and will overcome these challenges, and the rising tide can lift all boats. But these issues will not solve themselves. We must think deeply about them and design solutions before the disruptions take full force.